Image by editor

, Introduction

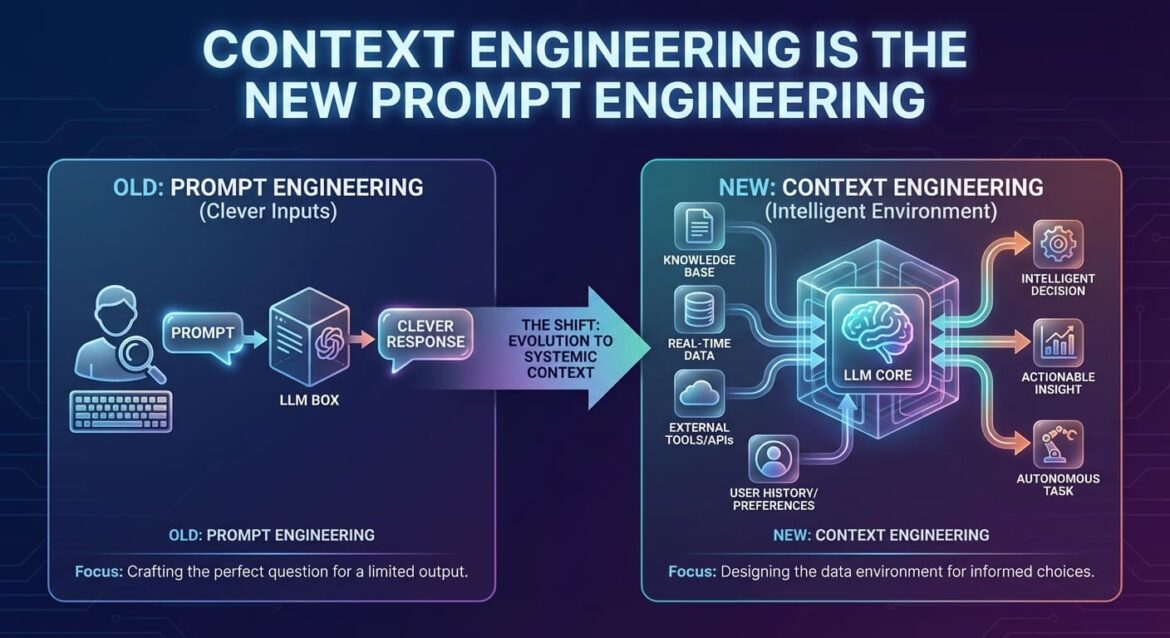

Everyone was obsessed with creating the perfect prompt – until they realized that the prompts weren’t the magical magic they thought they were. The real power lies in what exists around them: the data, metadata, memory, and narrative structure that give AI systems a sense of continuity.

The context is engineering quick engineering site As the new limits of control. It’s not about clever words anymore. It’s about designing an environment where AI can think with depth, consistency, and purpose.

The change is subtle but seismic: we’re moving from asking smart questions to creating smart worlds for models to live in.

, short life of quick fad

When ChatGPT first launched, people believed that quick wording could unlock unlimited creativity. Engineers and influencers flooded LinkedIn with “magical” templates, each claiming to hack models’ brains. It was exciting at first – but short-lived, and We realized that quick engineering never meant scaleAs use cases moved from one-off chats to enterprise workflows, cracks began to appear,

Hints depend on linguistic accuracy, not logic. They are delicate. Change one word or token, and the system behaves differently. In small experiments, this is fine. in production? This is chaos.

Companies have learned that unless you spoon-feed them every time, models forget, get lost, and misinterpret context. So, the industry shifted. Instead of constantly rewriting symbols, engineers began to create frameworks that retained meaning through memory, metadata, and structure. And thus, Reference engineering became the glue that held the compatibility together.,

The end of the instant fad didn’t kill creativity – it redefined it. Writing beautiful prompts paved the way for designing flexible environments. Today’s smartest AI engineers don’t ask better questions; They create better conditions for answers to emerge.

, Context is the real interface

The intelligence of each model is tied to its context window – The amount of text or data that it can process at one time. That limitation gave rise to the discipline of reference engineering. The goal is not to phrase the correct request but to create a scenario where the model’s logic remains stable, accurate, and adaptive.

Well-constructed context behaves like invisible infrastructure. It puts the logic together, provides context, and grounds the model’s logic in verifiable data. recovery-enhanced generation (RAG) is a prime example: rather than relying on memoryless signals, models pull in-time context from curated knowledge bases. The result is continuity – AI that remembers what matters and discards what doesn’t.

In this paradigm, the context becomes the interface. This way we communicate structure, not syntax. Instead of giving instructions directly to the model, we build systems that pre-load it with exactly the right background before each query. The future of AI reliability will depend not on fancy phrases but on engineered context pipelines that consistently keep models in relevant information.

, architecture behind understanding

Context Engineering works like urban planning for knowledge. It organizes data, memory, and logic so that the model can navigate complexity without getting lost. Where prompt engineering focused on linguistic flair, context engineering Focuses on infrastructure: embeddings, schema and retrieval logic Which creates a “mental map” of the model.

A well-engineered context is layered. The first layer structures the persistent identity – who the user is, what they want, and how the model should behave. The next layer incorporates relevant, time-sensitive knowledge obtained from external databases. application programming interface (API). At the end, The transient layer adapts in real time, updating based on the direction of the interactionThese levels form the architecture of understanding,

It is no longer about a play on words; This is information choreography. Engineers are learning to balance brevity and context saturation, and deciding how much information to display without overwhelming the model. The difference between AI that hallucinates and AI that reasons clearly often depends on a single design choice: how its context is created and maintained.

, From commanding to collaborating with models

Prompting was a command-based relationship: humans told AI what to do. Context engineering turns that into collaboration. The goal is no longer to control every response, but to co-design the framework in which those responses unfold. It’s a dance between structure and autonomy.

When context systems integrate memory, feedback, and long-term intent, the model begins to function less like a chatbot and more like a collaborator. Imagine an AI that remembers past edits, understands your stylistic patterns, and adjusts its logic accordingly. That’s collaboration through context. Each interaction builds on the last, creating a shared mental workspace.

This collaborative layer completely changes the way we think about signaling. Instead of phrasing commands, we define relationships. Context engineering gives AI consistency, empathy, and purpose – qualities that were impossible to achieve through one-off linguistic commands.

, Memory as new prompt layer

The introduction of memory marks the real end of accelerated engineering. Static signals are eliminated after a single exchange; Memory turns AI interactions into evolving stories. Through vector database and recovery systems, models can now retain lessons, decisions, and mistakes, and Then use them to refine future reasoning,

This does not mean infinite memory. Smart reference engineers curate selective recalls. They design mechanisms that decide what to keep, compress, or forget.

Art, like human experience, lies in balancing novelty with relevance. A model that remembers everything is noisy; He who remembers strategically is wise.

, The rise of contextual design

Context engineering is rapidly spreading beyond research laboratories. In customer support, AI system references prior tickets to maintain empathyIn analytics, data models learn to remember previous summaries for consistency, In creative fields, tools like image generators Now take advantage of layered context to deliver work that feels intentionally human,

Contextual design introduces a new feedback loop: context informs behavior, behavior reshapes the context. It is a dynamic cycle that drives adaptability. The system evolves with every input. This shift demands new design thinking – AI products should be treated as living ecosystems, not static devices. Engineers are becoming curators of sustainability.

Soon, every serious AI workflow will rely on engineered context layers. Those who ignore this change will find their output weak and inconsistent. Those who embrace it will create systems that are smarter, more aligned, and more resilient over time.

, conclusion

Prompt Engineering taught us how to talk to machines. Context engineering teaches us to build the world they think about. The limits of AI design now lie in memory, persistence, and adaptive structure. Every powerful system of the next decade will be built not on clever words but on coherent context.

The era of signs is ending. The era of environment has begun. People who learn to engineer context will not only get better outputs – they will build models that actually understand. That is not automation. He is the co-intelligence.

Nahla Davis Is a software developer and technical writer. Before devoting his work full-time to technical writing, he worked for Inc., among other interesting things. Managed to work as a lead programmer at a 5,000 experiential branding organization whose clients include Samsung, Time Warner, Netflix, and Sony.