NVIDIA today announced an important expansion of its strategic cooperation With Mistral AI. This partnership coincides with the release of the new Mistral 3 Frontier Open model family, a significant moment hHardware acceleration and open-source model architectures have combined to redefine performance benchmarks.

This collaboration is a huge leap in the speed of estimation: the new models go so far NVIDIA GB200 up to 10x faster on NVL72 systems Compared to the previous generation H200 system. This breakthrough unlocks unprecedented efficiency for enterprise-grade AI, promising to solve the latency and cost barriers that have historically hindered the large-scale deployment of reasoning models.

A generational leap: 10 times faster at Blackwell

As enterprise demands shift from simple chatbots to high-reasoning, long-context agents, inference efficiency has become a critical hurdle. Collaboration between NVIDIA and Mistral AI NVIDIA addresses this problem by optimizing the Mistral 3 family specifically for the Blackwell architecture.

Where production AI systems must deliver both strong user experience (UX) and cost-efficient scale, the NVIDIA GB200 NVL72 delivers up to 10x the performance of the previous generation H200. This isn’t just an increase in raw speed; This translates to significantly higher energy efficiency. exceeds the system 5,000,000 tokens per second per megawatt (MW)., At a user interactivity rate of 40 tokens per second.

For data centers suffering from power shortage, it efficiency gains This is just as important as boosting performance. This generational leap ensures low per-token costs while maintaining the high throughput required for real-time applications.

A new Mistral 3 family

The engine powering this performance is the recently released Mistral 3 family. This suite of models delivers industry-leading accuracy, efficiency, and optimization capabilities, covering the spectrum from large-scale data center workloads to edge device inference.

Mistral Large 3: Flagship MOE

sits at the top of the hierarchy Mistral Large 3, a state-of-the-art sparse multimodal and multilingual mixture-of-experts (MOE) model.

- Total parameters: 675 billion

- Active parameters: 41 billion

- Context Window: 256K tokens

trained at NVIDIA Hopper GPU, Mistral Large 3 Designed to handle complex logic tasks, providing parity with top-tier closed models while maintaining the flexibility of open weights.

Minstrel 3: Dense Power on the Edge

to implement larger models minstrel 3 seriesA suite of small, compact, high-performance models designed for speed and versatility.

- size: 3B, 8B, and 14B parameters.

- Variant: Base, instructions and rationale for each shape (nine models total).

- Context Window: 256K tokens across the board.

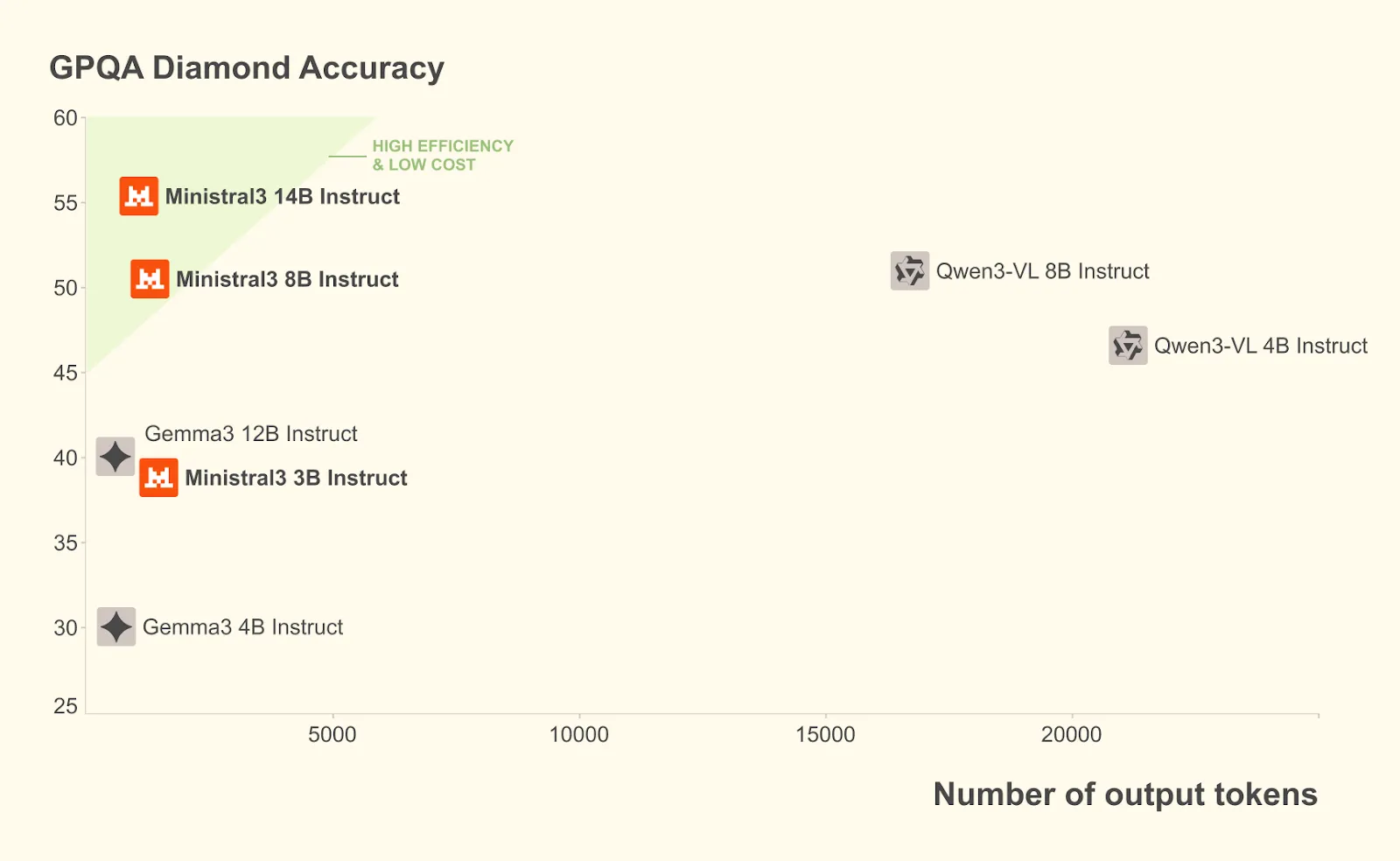

minstrel 3 The chain excels on the GPQA Diamond accuracy benchmark by using 100 fewer tokens while delivering high accuracy:

The critical engineering behind speed: a comprehensive optimization stack

The “10x” performance claim is driven by an extensive stack of optimizations co-developed by Mistral and NVIDIA engineers. The teams took an “extreme co-design” approach, merging hardware capabilities with model architecture adjustments.

TensorRT-LLM Wide Expert Parallelism (Wide-EP)

To fully exploit the enormous scale of the GB200 NVL72, NVIDIA employs massive expert parallelism within TensorRT-LLMThis technology provides an optimized MoE GroupGEMM kernel, specialist distribution, and load balancing,

Importantly, WIDE-EP exploits the coherent memory domain of NVL72 and the NVLink fabric. It is highly resilient to architectural variations in large MoE. For example, Mistral Large 3 uses approximately 128 experts per layer, which is about half as many as comparable models such as DeepSeek-R1., Despite this difference, Wide-EP enables the model to realize the high-bandwidth, low-latency, non-blocking benefits of the NVLink fabric, ensuring that the model’s sheer size does not result in communication bottlenecks.

Basic NVFP4 quantization

One of the most significant technical advancements in this release is support for NVFP4, the native quantization format of the Blackwell architecture.

For Mistral Large 3, developers can deploy a compute-optimized NVFP4 checkpoint to an offline volume using the open-source llm-compressor library.

This approach reduces computation and memory costs while strictly maintaining accuracy. It takes advantage of NVFP4’s high-precision FP8 scaling factors and fine-grained block scaling to control quantization error. The recipe specifically targets the MoE load while keeping other components at native precision, allowing the model to be deployed seamlessly onto the GB200 NVL72 with minimal accuracy loss.

Discrete Service with NVIDIA Dynamo

Mistral Large 3 uses Nvidia DynamoA low-latency distributed inference framework for separating the prefill and decode stages of inference.

In a traditional setup, the prefill stage (processing the input prompt) and the decode stage (generating the output) compete for resources. By rate-matching and separating these steps, Dynamo significantly increases performance for long-reference workloads such as 8K input/1K output configurations. This ensures high throughput even when using the model’s huge 256K reference window.

From Cloud to Edge: Minstrel 3 Performance

Optimization efforts extend beyond giant data centers. Recognizing the growing need for local AI, the Minstrel 3 series is engineered for edge deployment, offering flexibility for a variety of needs.

RTX and Jetson Acceleration

The compact Minstr models are optimized for platforms such as NVIDIA GeForce RTX AI PC and NVIDIA Jetson robotics modules.

- RTX 5090: Minstrels-3B variant can reach faster guessing speeds 385 tokens per second On NVIDIA RTX 5090 GPU. It brings workstation-class AI performance to local PCs, enabling faster iteration and greater data privacy.

- Jetson Thor: For robotics and edge AI, developers can use VLLM containers on NVIDIA Jetson Thor. The minstrel-3-3b-instruction model achieves 52 tokens per second for single concurrency, scaling up to 273 tokens per second With concurrency of 8.

comprehensive framework support

NVIDIA has collaborated with the open-source community to ensure that these models are usable everywhere.

- Llama.cpp and Olama: NVIDIA collaborated with these popular frameworks to ensure fast iteration and low latency for local development.

- sglang: NVIDIA collaborated with SGLang to create an implementation of Mistral Large 3 that supports both separation and speculative decoding.

- VLLM: NVIDIA worked with VLLM to expand support for kernel integration, including speculative decoding (EAGLE), Blackwell support, and extended parallelism.

Production ready with NVIDIA NIM

To streamline enterprise adoption, new models will be available nvidia nim microservices,

Minstral Large 3 and Minstral-14b-Instruct are currently available through the NVIDIA API Catalog and Preview API. Soon, enterprise developers will be able to use downloadable NVIDIA NIM microservices. It provides a containerized, production-ready solution that allows enterprises to deploy the Mistral 3 family with minimal setup on any GPU-accelerated infrastructure.

This availability ensures that the typical “10x” performance advantage of the GB200 NVL72 can be realized in production environments without complex custom engineering, democratizing access to frontier-class intelligence.

Conclusion: A New Standard for Open Intelligence

The release of the NVIDIA-accelerated Mistral 3 open model family represents a huge leap forward for AI in the open-source community. By offering frontier-level performance under an open source license, and backing it with a robust hardware optimization stack, Mistral and NVIDIA are meeting developers where they are.

on a huge scale GB200 using NVL72 Wide-EP and NVFP4Thanks to Minstrels’ edge-friendly density on the RTX 5090, this partnership provides a scalable, efficient path to artificial intelligence. With upcoming optimizations such as multitoken prediction (MTP) and speculative decoding with EAGLE-3 expected to push performance even further, the Mistral 3 family is set to become a foundational element of the next generation of AI applications.

Available for testing!

If you’re a developer and want to benchmark these performance gains, you can do so Download Mistral 3 Model Test deployment-free hosted versions directly from Hugging Face build.nvidia.com/mistrarai To evaluate latency and throughput for your specific use case.

View models at hugging face, you can find details corporate blog And Technical/Developer Blog,

Thanks to the NVIDIA AI team for the thought leadership/resources for this article. The NVIDIA AI team has endorsed this content/article.

Jean-Marc is a successful AI business executive. He leads and accelerates development of AI driven solutions and started a computer vision company in 2006. He is a recognized speaker at AI conferences and holds an MBA from Stanford.