Data collaboration is the backbone of modern AI innovation, especially when organizations collaborate with external partners to unlock new insights. However, data privacy and intellectual property protection remain major challenges in enabling collaboration while protecting sensitive data.

To bridge this gap, customers across all industries are using Databricks Clean Room to run shared analytics on sensitive data and enable privacy-first collaboration.

We have compiled the 10 most frequently asked questions about clean rooms below. These explain what clean rooms are, how they protect data and IP, how they work in the cloud and across platforms, and what it takes to get started. Let’s jump in.

1. What is a “Data Clean Room”?

The Data Clean Room is a secure environment where you and your partners can work together on sensitive data to extract useful insights, without sharing the underlying sensitive raw data.

In Databricks, you create a clean room, add the assets you want to use, and run only approved notebooks in an isolated, secure, and governed environment.

2. What are some example use cases of clean rooms?

Clean rooms are useful when multiple parties need to analyze sensitive data without sharing their raw data. This is often due to privacy rules, contracts, or intellectual property protection.

They are used in many industries including advertising, healthcare, finance, government, transportation, and data monetization.

Some examples include:

advertising and marketing: Identity solutions, campaign planning and measurement, data monetization and brand collaboration for retail media without exposing PII.

- Partners such as Epsilon, The Trade Desk, Acxiom, LiveRamp, and Deloitte use Databricks Clean Room for identity solutions.

financial Services: Banks, insurers and credit card companies combine data for better operations, fraud detection and analysis.

- Example: master card Uses clean rooms to match and analyze PII data to detect fraud; Yours Securely matches borrower data with lenders to find qualified borrowers.

Clean rooms protect customer data while allowing collaboration and data enrichment.

3. What types of data assets can I share in a clean room?

You can share a wide range of Unity Catalog-managed assets in Databricks Clean Rooms:

- Table (Managed, external, and foreign): Structured data such as transactions, events, or customer profiles.

- Scene: Filtered or aggregated fragments of your tables.

- editions: Files like images, audio, documents or personal code libraries.

- notebook: SQL or Python notebook that defines the analysis you want to run.

Here’s what it looks like in practice:

- A RETAILERA cpg brandand a market research firm Share anonymized views to jointly analyze campaign reach, including: hashed customer IDs, aggregate sales metrics, and regional demographics.

- A streaming platform and one advertising agency Share campaign impression tables and a notebook that calculates cross-platform audience metrics.

- A Edge and a fintech partner Share volumes containing risk and fraud ML models and use a notebook to jointly score the models while keeping individual records private.

4. How does it compare to delta sharing? Why would I use a clean room instead?

Think of it this way: Delta sharing is the right choice when one party needs read-only access to the data in their environment and seeing the underlying records is acceptable to them.

Clean Room adds a secure, controlled space for multi-party analysis when data must remain private. Partners can join data assets, run mutually agreed upon code, and return only outputs that all parties agree on. This is useful when you need to meet strict privacy guarantees or support regulated workflows. In fact, data shared in the clean room still uses the Delta Sharing Protocol behind the scenes.

For example, a retailer can use delta sharing to give a supplier read-only access to the sales table so they can see how products are selling. The same pair would use a clean room when they needed to join richer, more sensitive data (like customer traits or detailed lists) from both parties, run approved notebooks, and only share aggregate outputs like demand forecasts or top-risk items.

5. How is sensitive data and IP kept secure in a clean room?

Clean rooms are created so that your partners can never see your raw data or IP. Your data lives in your own Unity catalog, and you only share specific assets in the clean room via delta sharing, which is controlled by approved notebooks.

To implement these protections in a clean room:

- Collaborators only see the schema (column names and types), not the actual row-level data.

- Only notebooks that you and your partners approve can run on a serverless computer in an isolated environment.

- Notebooks write to temporary output tables, so you control exactly what comes out of the clean room.

- Outbound network traffic is restricted through serverless egress control (SEG).

- To protect IP or proprietary code, you can package your logic as a private library, store it in a Unity Catalog volume, and reference it in a clean room notebook without revealing your source code.

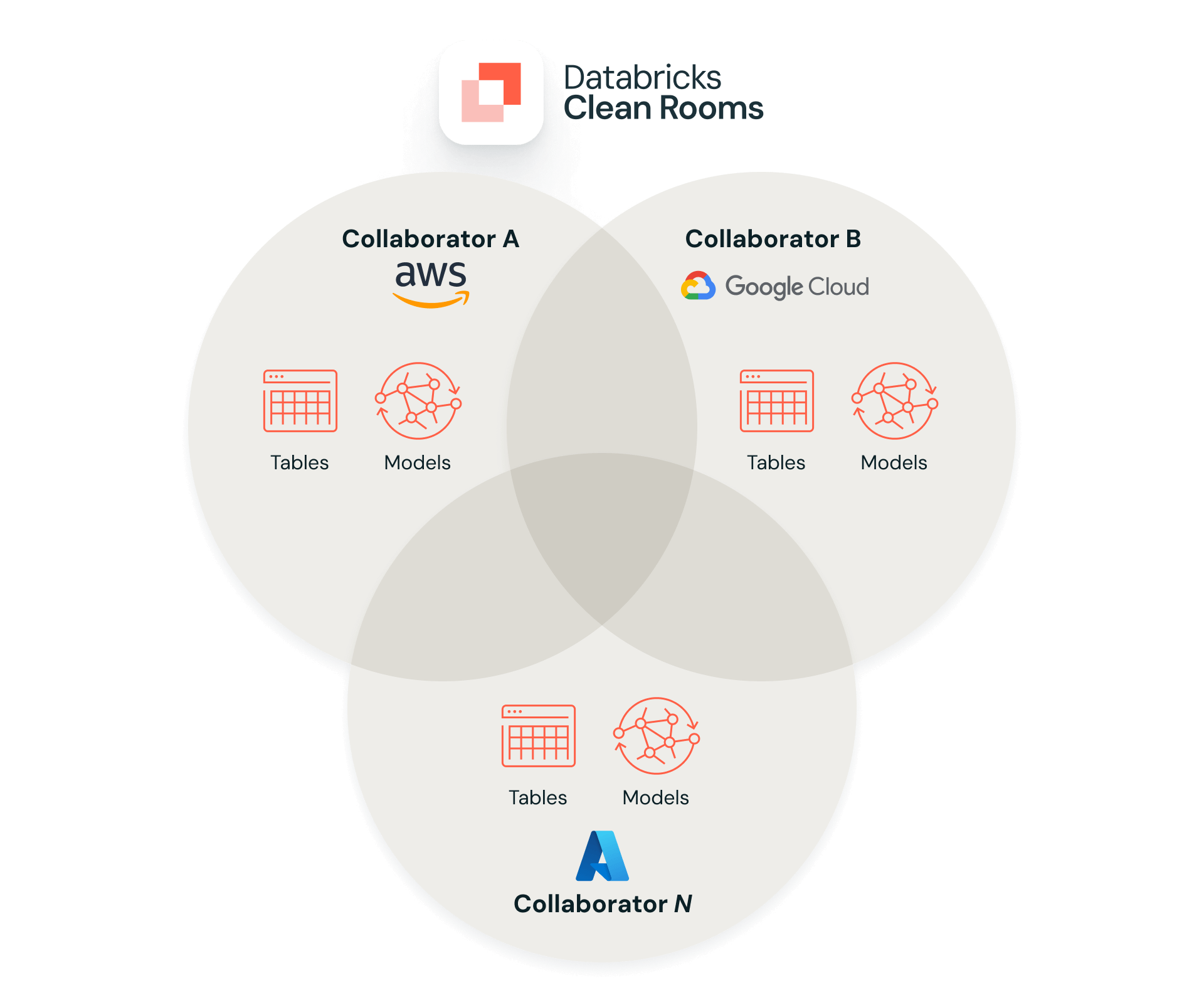

6. Can colleagues on different clouds join the same clean room?

Yes. Clean Room is designed for multicloud and cross-region collaboration, as long as each participant has a Unity Catalog-enabled workspace and Delta Sharing enabled on their metastore. This means an organization using Databricks on Azure can collaborate in a clean room with partners on AWS or GCP.

7. Can I bring data from Snowflake, BigQuery, or other platforms into a clean room?

Yes absolutely. Lakehouse federation exposes external systems like Snowflake, BigQuery, and traditional warehouses as foreign catalogs in Unity Catalog (UC). Once external tables become available in UC, you share them in the clean room the same way you share any other table or view.

Here’s how it works at a high level: You use Lakehouse Federation to create connections and foreign catalogs that expose external data sources in the Unity Catalog, without having to copy all that data into Databricks. Once those external tables are available in Unity Catalog, you can share them in a clean room like any other Unity Catalog-managed table or view.

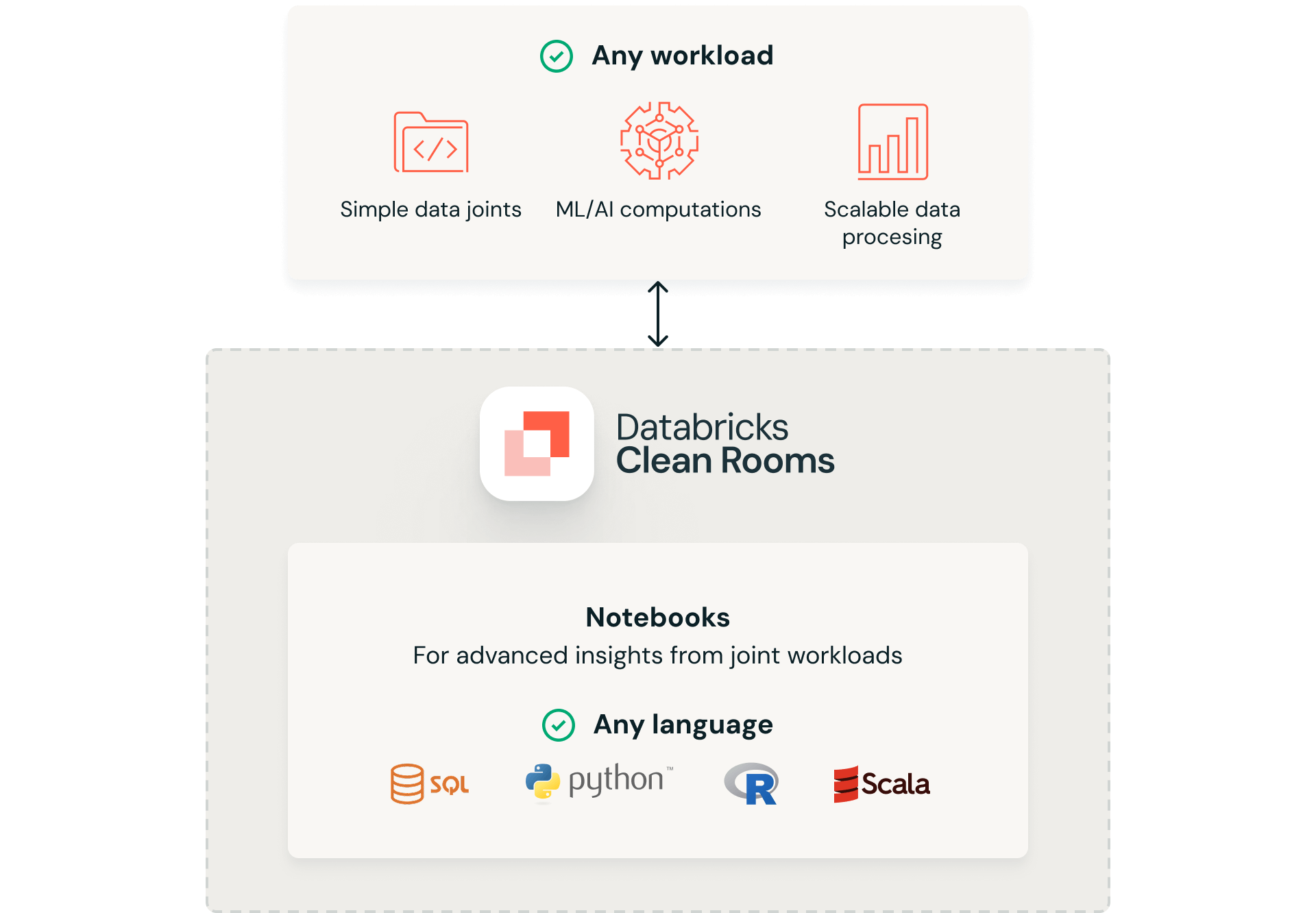

8. How do I run custom analyzes on combined data?

Inside a clean room, you do almost everything through a notebook. You add a SQL or Python notebook that contains the code for the analysis you want, your partners review and approve the notebook, and then it can run.

Simple case: You might have a SQL notebook that calculates the overlapping hashed IDs between a retailer’s purchases and a media partner’s impressions, and then reports reach, frequency, and conversions.

More advanced: You use a Python notebook to add features from both sides, train or score a model on the combined data, and write predictions to an output table. The approved runner sees the output, but no one sees the other party’s raw records.

9. How does multi-party cooperation work?

In Databricks Clean Room, you can have up to 10 organizations (you and 9 partners) working together in a secure environment, even if you’re on different clouds or data platforms. Each team keeps their data in their own Unity Catalog and shares only the specific tables, views or files they want to use in the clean room.

Once everyone is involved, each party can propose SQL or Python notebooks, and those notebooks require approval before running so all parties are comfortable with the logic.

10. So, it all sounds good. How do I get started?

Here’s a simple way to get started:

- Check that Unity Catalog, Delta Sharing, and Serverless Compute are enabled in your workspace.

- Create a Clean Room object in your Unity Catalog metastore and invite your partners with their sharing identifiers.

- Each party connects the data assets and notebooks they want to collaborate on.

- Once everyone approves the notebook, run your analysis and review the output in your metastore.

look at this Video To learn more about cleanroom construction and getting started.