Google has published T5Gemma 2open family encoder-decoder Custom-made transformer checkpoints Gemma 3 Pre-trained weights in an encoder-decoder layout, then continuing pre-training with that UL2 Objective. is released pre trained onlyThe purpose of which is to give developers post-training for specific tasks, and Google clearly notes that it is Not releasing post-trained or IT checkpoints For this drop.

T5Gemma 2 is positioned as an encoder-decoder counterpart of Gemma 3 that keeps the same low level building blocks, then adds 2 structural changes aimed at small model efficiency. Models inherit Gemma 3 features that are particularly important for deployment multi-modal, Long reference up to 128K tokensand extensive multilingual coverage, with blog narration more than 140 languages,

What did Google actually release?

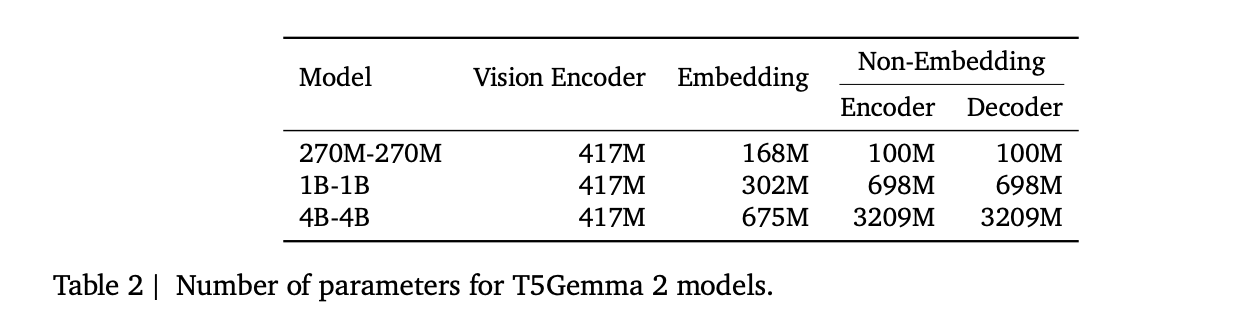

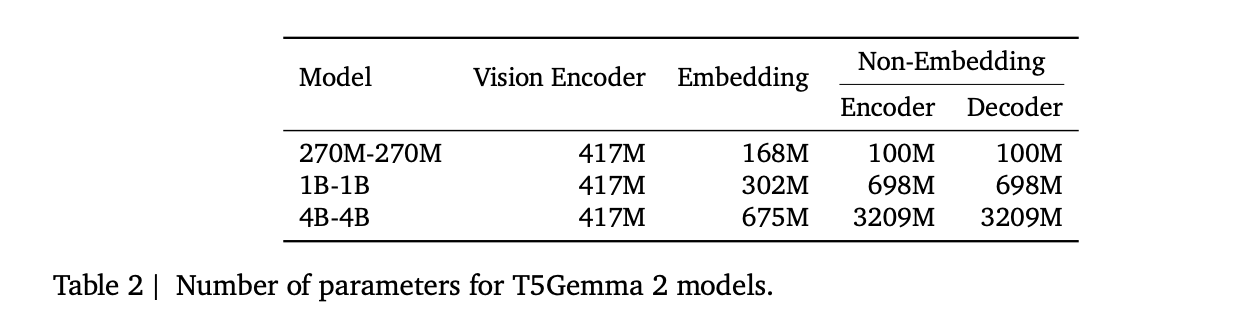

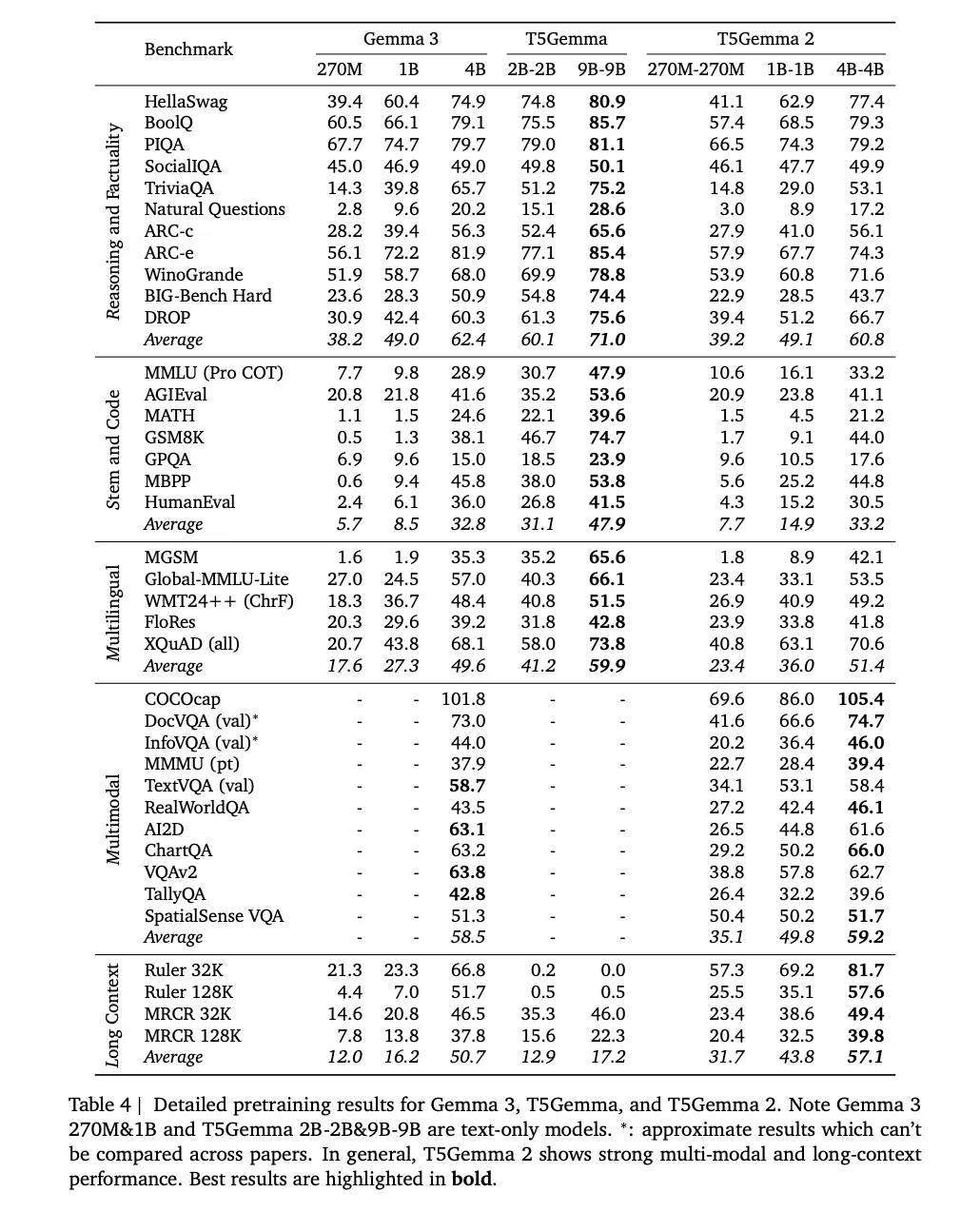

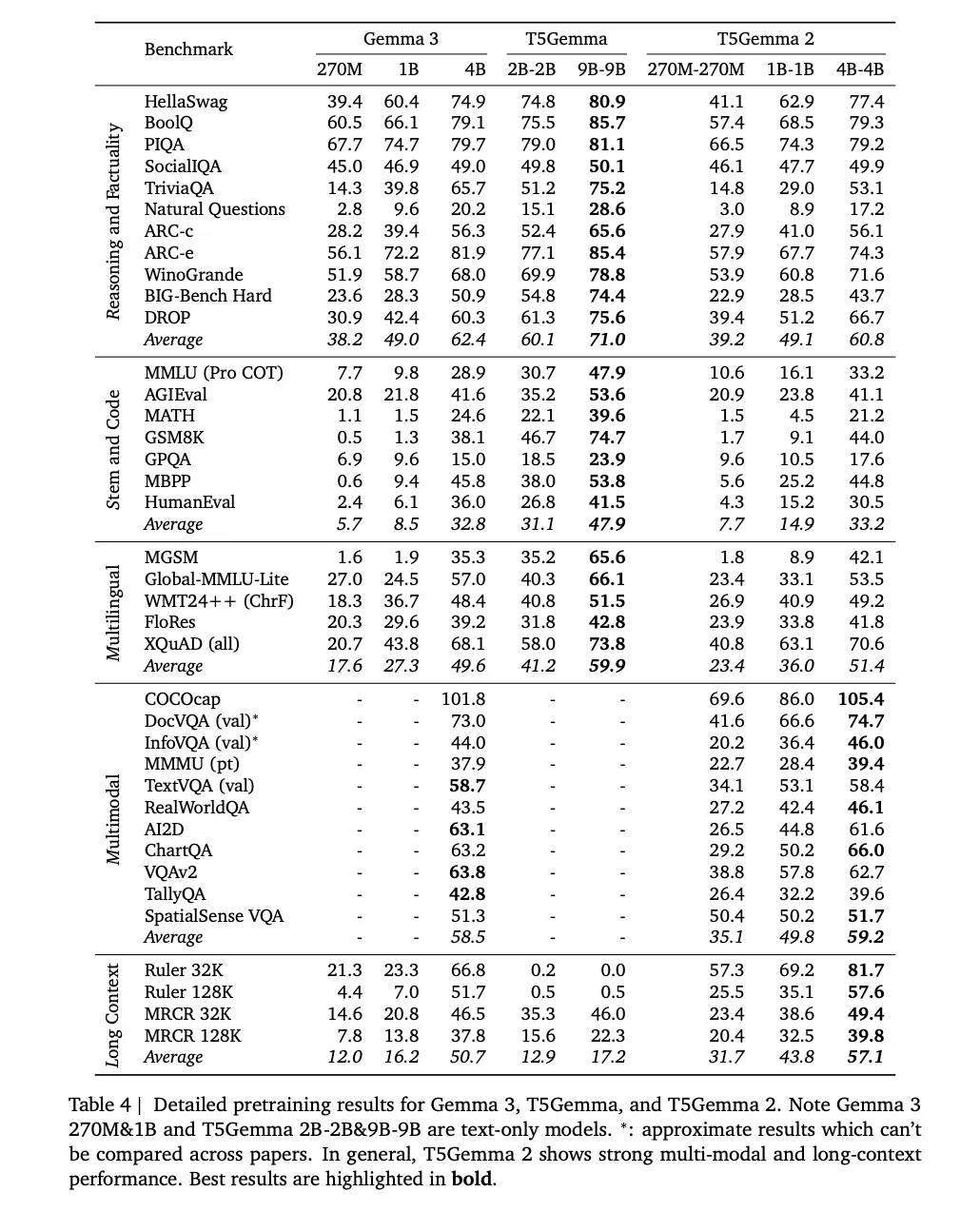

The release includes 3 pre-trained shapes, 270M-270M, 1b-1bAnd 4b-4bWhere the notation means that the encoder and decoder are the same size. Research team reports estimated totals excluding vision encoder 370M, 1.7bAnd 7b Parameters. Multimodal Accounting Lists a 417m Parameter vision encoder, divided into embedding and non-embedding components with encoder and decoder parameters.

Optimization without training from scratch,encoder-decoder

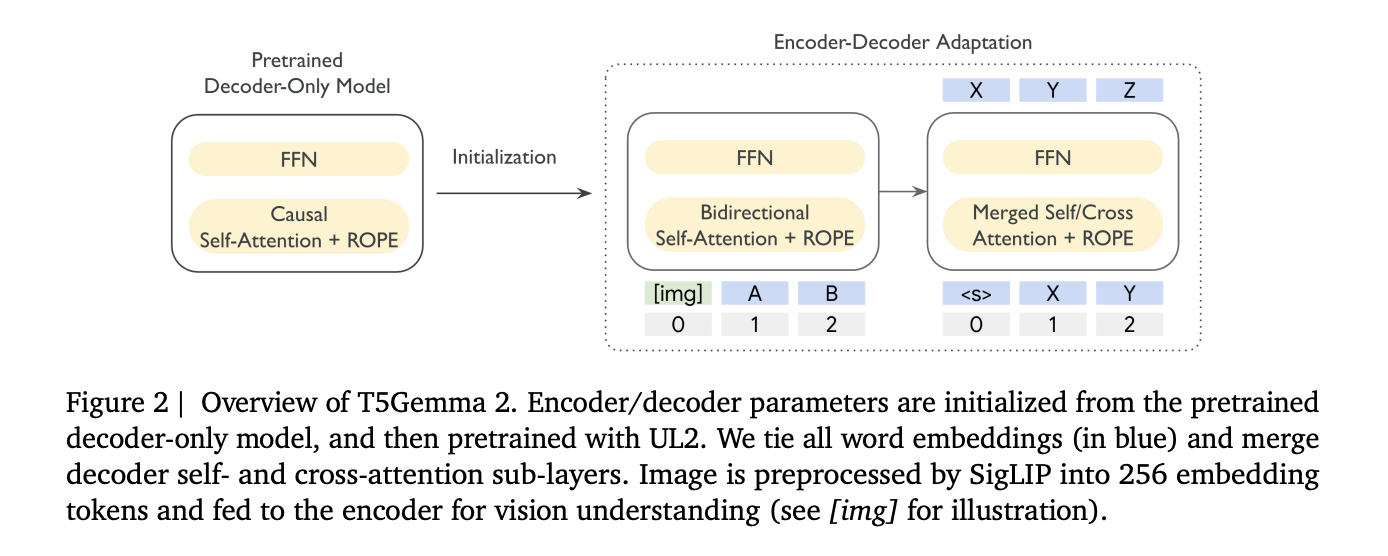

T5Gemma 2 follows the same optimization idea introduced in T5Gemma, starting with a decoder-decoder model with a decoder-only checkpoint, then optimizing with UL2. In the above figure the research team initializes the encoder and decoder parameters with a pre-trained decoder-only model, then pre-trains with UL2, the images are first converted to 256 tokens by Siglip.

This matters because the encoder-decoder splits the workload, the encoder can read the full input bidirectionally, while the decoder focuses on autoregressive generation. The research team argues that this separation could help in long context tasks where the model must extract relevant evidence from a large input before generating it.

Two efficiency changes that are easy to miss but affect smaller models

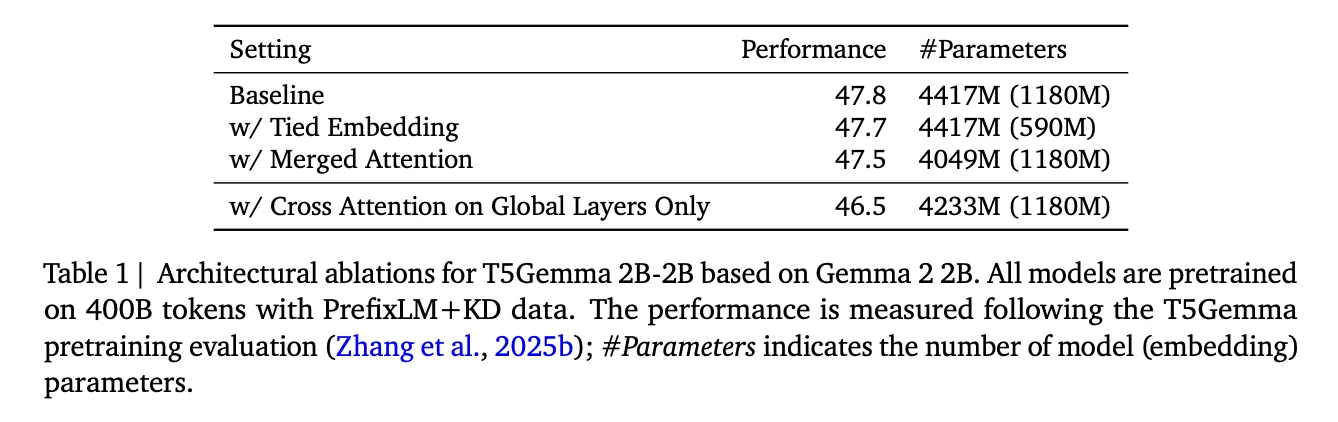

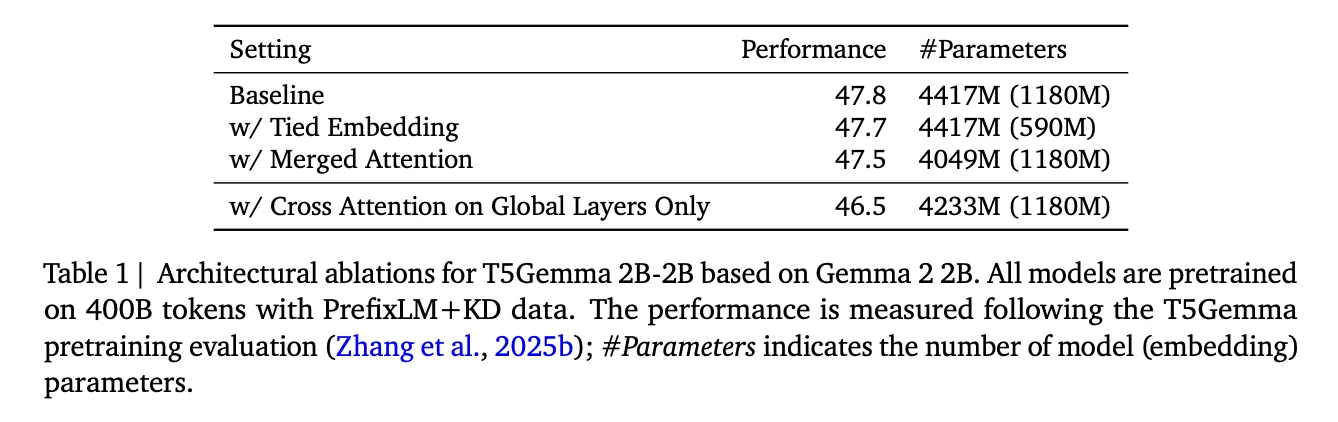

First of all, T5Gemma uses 2 tied word embedding Encoder input embedding, decoder input embedding and decoder output or softmax embedding. This parameter reduces redundancy, and refers to an ablation showing little quality change while reducing the embedding parameter.

Second, it introduces attention is lost In the decoder. Instead of separate self-attention and cross-attention sublayers, the decoder performs a single attention operation K and V The encoder is formed by combining the outputs and decoder states, and masking preserves causal visibility for decoder tokens. This is associated with easier initialization, as it minimizes the differences between the adapted decoder and the original Gemma style decoder stack, and it reports parameter savings with a smaller average quality degradation in their ablations.

Multimodality, image understanding is the encoder side, not the decoder side

T5Gemma 2 is multimodal by reusing Gemma 3’s vision encoder and keeping frozen during trainingVision tokens are always fed to the encoder and the encoder tokens have full visibility to each other in their own focus, This is a practical encoder-decoder design, the encoder combines image tokens with text tokens into relevant representations, and then the decoder can pay attention to those representations when generating text,

On the tooling side, the T5Gemma 2 is housed under a image-to-text The pipeline that matches the research design, image in, text prompt in, text out. That pipeline example is the fastest way to validate end-to-end multimodal paths, including dtype options like bfloat16 and automatic device mapping.

128K long reference, what makes it capable

Google researchers attribute 128K context window to Gemma 3’s alternating Local and global focus Mechanism. Gemma 3 Team describes a 5 to 1 repeating pattern, 5 local sliding window focus layers After 1 global attention layerwith local window size 1024This design reduces KV cache growth relative to making each layer global, which is one reason long contexts become possible on small footprints,

The research team in T5Gemma 2 also mentioned adoption positional interpolation methods for longer context, and they pre-train on sequences 16K input paired with 16K target outputThen evaluate long reference performance 128K including on benchmarks ruler And MRCRThe detailed pretraining results table includes 32K and 128K evaluations, showing the claimed long reference delta on Gemma 3 at the same scale,

Training setup and what “pre-trained only” means for users

The research team says the models have been pre-trained 2T token and describes a training setup that includes batch size 4.2M tokensWith decreasing cosine learning rate 100 Warmup phase, global gradient clipping 1.0and the average of the checkpoints at the last 5 Checkpoints.

key takeaways

- T5Gemma 2 is an encoder decoder family adapted from Gemma 3 and continued with UL2It reuses the Gemma 3 pre-trained weights, then applies the same UL2 based optimization recipe used in T5Gemma.

- Google released only pre-trained checkpointsThere are no post trained or instruction tuned variants included in this drop, so downstream use requires your own post training and evaluation.

- Multimodal input is handled by a Siglip Vision Encoder that outputs 256 image tokens and remains frozenThose vision tokens go to the encoder, the decoder produces text.

- Two parameter efficiency changes are centralBounded word embedding shares the encoder, decoder and output embedding, merged attention integrates the self attention and cross attention of the decoder into a single module.

- Long references up to 128K enabled by Gemma 3’s interleaved attention design5 local sliding window layers followed by 1 global layer repeated with window size 1024, and T5Gemma 2 inherits this mechanism.

check it out paper, technical details And model hugging faceAlso, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletterwait! Are you on Telegram? Now you can also connect with us on Telegram.

I am a Civil Engineering graduate (2022) from Jamia Millia Islamia, New Delhi, and I have a keen interest in Data Science, especially Neural Networks and their application in various fields.