NVIDIA has released the Nemotron 3 family of open models as part of a full stack for agentic AI, including model weights, datasets, and reinforcement learning tools. The family has three sizes, Nano, Super and Ultra, and targets multi-agent systems that require long context logic with tight control on estimation costs. Nano has about 30 billion parameters with about 3 billion active per token, Super has about 100 billion parameters with about 10 billion active per token, and Ultra has about 500 billion parameters with 50 billion active per token.

Ideal Family and Target Workload

Nemotron 3 is presented as an efficient open model family for agentic applications. line contains Nano, Super and Ultra modelsEach is tuned for different workload profiles.

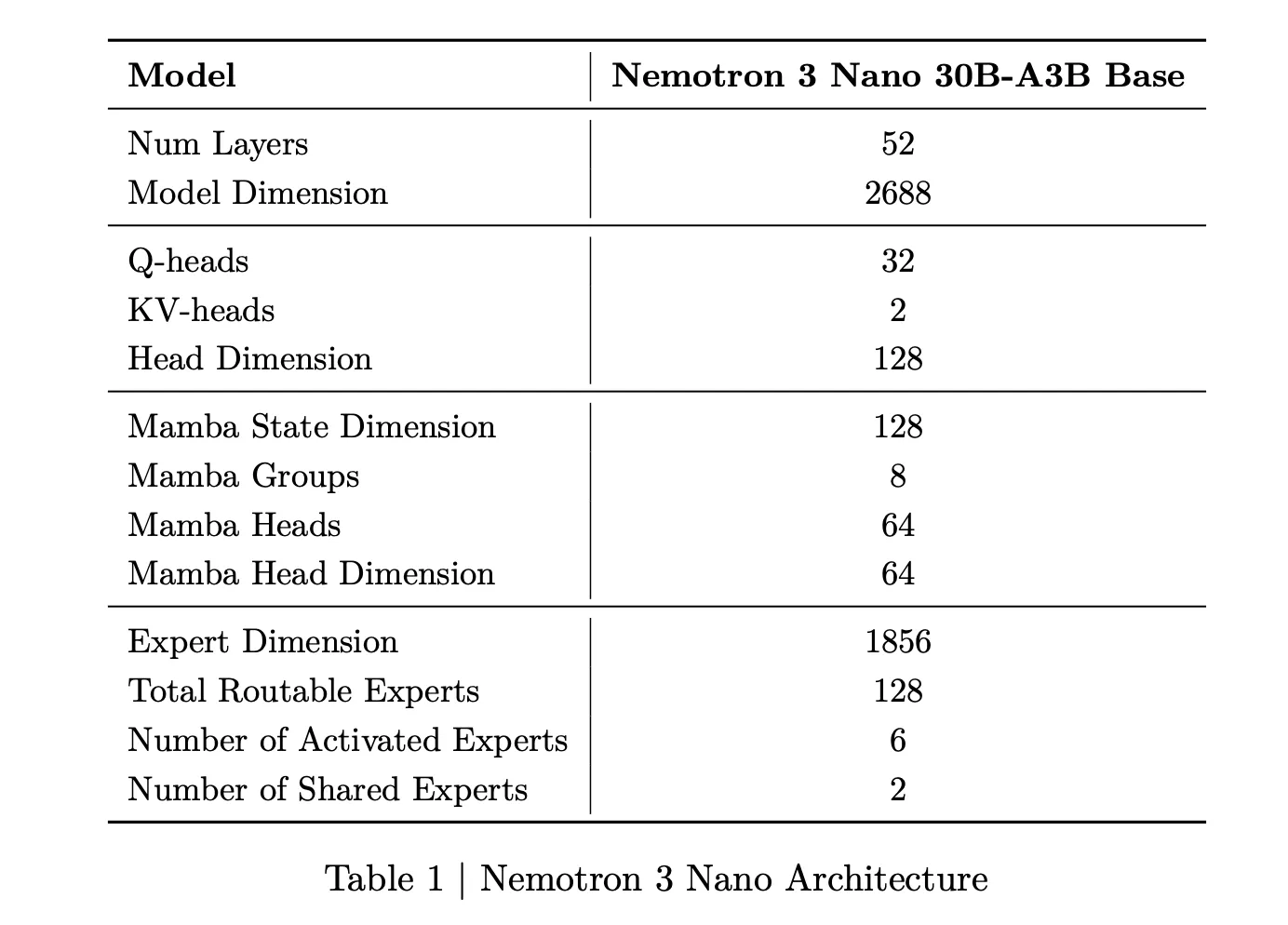

nemotron 3 nano The mixture of experts is a hybrid Mamba Transformer language model with approximately 31.6 billion parameters. Only about 3.2 billion parameters are active per forward pass, or 3.6 billion including embeddings. This allows sparse activation models to maintain high representativeness while keeping computation low.

nemotron 3 super It has approximately 100 billion parameters and up to 10 billion actives per token. The Nemotron 3 Ultra scales this design to approximately 500 billion parameters with up to 50 billion actives per token. Super targets high accuracy logic for large multi agent applications, while Ultra is intended for complex research and planning workflows.

nemotron 3 nano Now available on Hugging Face and as an Nvidia NIM microservice with open weights and recipes. Super and Ultra are scheduled for the first half of 2026.

The NVIDIA Nemotron 3 Nano delivers approximately 4x higher token throughput than the Nemotron 2 Nano and significantly reduces logic token usage, supporting native context lengths of up to 1 million tokens. This combination is for multi-agent systems that work on large scopes such as large documents and large code bases.

Hybrid Mamba Transformer MoE Architecture

The core design of the Nemotron 3 is a blend of the experts’ Hybrid Mamba Transformer architecture. The model combines Mamba sequence blocks, attention blocks, and sparse expert blocks inside a single stack.

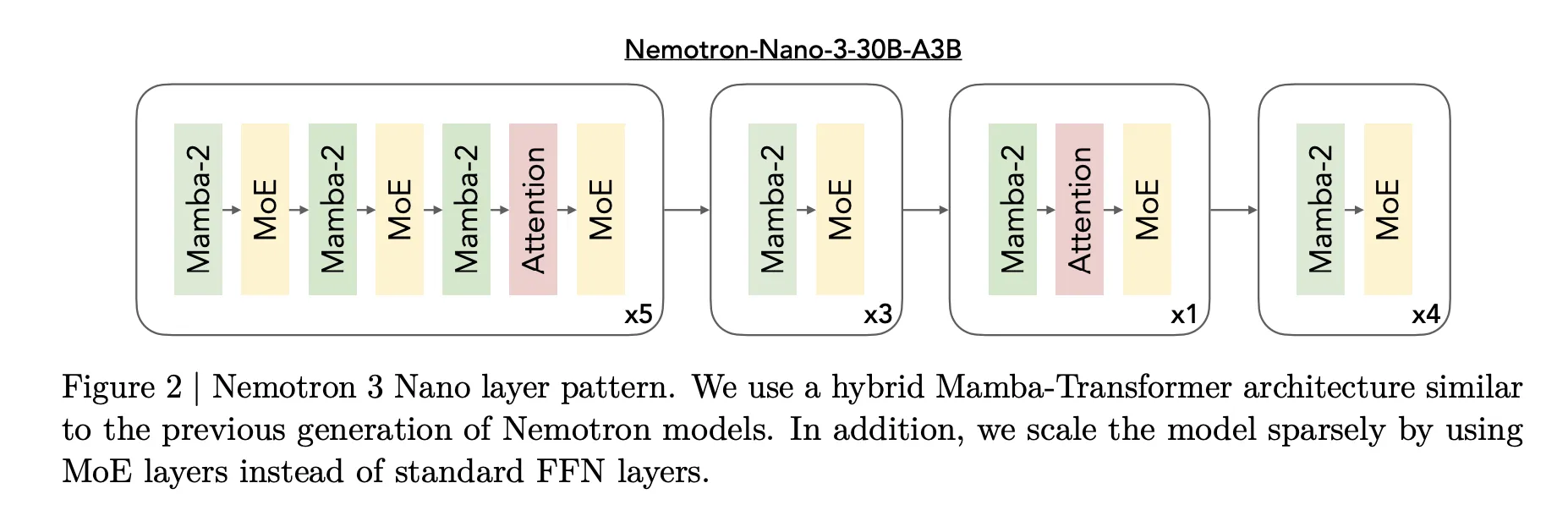

For the Nemotron 3 Nano, the research team describes a pattern that interconnects the Mamba 2 block, the Attention block, and the MOE block. The standard feedforward layers of previous Nemotron generations have been replaced by MoE layers. A learned router selects a smaller subset of experts per token, for example 6 out of 128 routable experts for Nano, which keeps the active parameter count closer to 3.2 billion whereas the full model has 31.6 billion parameters.

Mamba 2 handles long-range sequence modeling with state space style updates, attention layers provide direct token to token interactions for structure sensitive tasks, and MOE provides parameter scaling without proportional compute scaling. The important thing is that most of the layers are either fast sequences or sparse expert calculations, and full attention is used only where it makes the most sense for the logic.

For Nemotron 3 Super and Ultra, NVIDIA has added LatentMoE. Tokens are projected into a lower dimensional latent space, experts operate in that latent space, then the output is projected back. This design allows many times more experts for the same communication and computation cost, supporting greater specialization across tasks and languages.

Super and Ultra also include multi token predictions. Multiple output heads share a common trunk and predict multiple future tokens in a single pass. This improves optimization during training, and predictably enables execution with fewer full forward passes, such as speculative decoding.

Training data, exact format and reference window

Nemotron 3 has been trained on large-scale text and code data. The research team reports pretraining on approximately 25 trillion tokens, with over 3 trillion new unique tokens in the Nemotron 2 generation. Nemotron 3 Nano uses special datasets for scientific and reasoning content in addition to Nemotron Common Crawl v2 Point 1, Nemotron CC Code and Nemotron Pretraining Code v2.

Super and Ultra are trained mostly in NVFP4, a 4 bit floating point format optimized for NVIDIA accelerators. Matrix multiple operations run in NVFP4 while accumulation uses higher precision. This reduces memory pressure and improves throughput while keeping accuracy close to standard formats.

All Nemotron 3 models support reference windows up to 1 million tokens. The architecture and training pipeline are tuned to this length for long horizon logic, which is necessary for multi agent environments that traverse large traces and shared working memory between agents.

key takeaways

- Nemotron 3 is a three-tier open model family for agentic AI: Nemotron 3 comes in Nano, Super and Ultra variants. Nano has about 30 billion parameters with about 3 billion active per token, Super has about 100 billion parameters with about 10 billion active per token, and Ultra has about 500 billion parameters with 50 billion active per token. The family targets multi-agent applications that require efficient long-term context reasoning.

- Hybrid Mamba Transformer MoE with 1 million token reference: The Nemotron 3 models use a hybrid Mamba 2 Plus Transformer architecture with a sparse mix of experts and support a 1 million token reference window. This design provides long context management with high throughput, where only a small subset of experts per token are active and attention is used where it is most useful for the logic.

- Secret MOE and Multi Token Prediction in Super and Ultra: Super and Ultra variants add latent MOE where expert computation occurs in less latent space, which reduces communication costs and allows more experts, and multi-token prediction heads that generate multiple future tokens per forward pass. These changes improve quality and enable speculative style speedups for a series of long text and idea workloads.

- Massive training data for efficiency and NVFP4 precision: With over 3 trillion new tokens compared to the previous generation, Nemotron 3 is pre-trained on approximately 25 trillion tokens, and Super and Ultra are trained primarily in NVFP4, a 4-bit floating point format for NVIDIA GPUs. This combination improves throughput and reduces memory usage while keeping accuracy close to standard precision.

check it out paper, technical blog And Model load on HF, Feel free to check us out GitHub page for tutorials, code, and notebooksAlso, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletter,

Asif Razzaq Marktechpost Media Inc. Is the CEO of. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. Their most recent endeavor is the launch of MarketTechPost, an Artificial Intelligence media platform, known for its in-depth coverage of Machine Learning and Deep Learning news that is technically robust and easily understood by a wide audience. The platform boasts of over 2 million monthly views, which shows its popularity among the audience.