Author(s): Ayyub Nainiya

Originally published on Towards AI.

RAG is not a recovery problem, it is a system design problem. The sooner you start treating it as one, the sooner it will stop falling apart.

If you’ve built your first RAG (Retrieval-Augmented Generation) system, you’ve probably experienced the harsh reality: The basic tutorial approach works for demos, but falls apart with real documents and user questions. Your users complain about irrelevant answers, missing information, or responses that confidently cite the wrong reference.

I’ve spent this year building and refining RAG systems in production, and I’ve discovered that the difference between a “RAG demo” and “RAG that works” is huge.

In this post, we cover practical strategies that really move the needle.

Problem with Basic RAG

Most RAG tutorials follow a similar pattern:

- Split documents into fixed-sized pieces (512 tokens)

- Embed the pieces with an off-the-shelf model

- Store in a vector database

- Retrieve top-like part

- LLM Reference Materials

This works for simple cases, but fails when:

- Users ask questions in multiple sections

- Important reference sections divided into boundaries

- Semantically similar text is not actually relevant

- The structure of documents is complex (tables, lists, codes)

- You need to recover thousands of documents

The symptoms are familiar: incomplete answers, hallucinations when the correct context is not retrieved, or correct information that is simply missed because it was segmented incorrectly.

Let’s fix these issues systematically.

in this article:

- Part – 1: Chunking strategies (semantic, hierarchical, and mixed approaches that preserve context)

- part 2: Retrieval Optimization (Hybrid Search, Reranking and Reference Expansion)

- part 3:Evaluation Framework (metrics that actually predict user satisfaction)

- part 4:Production best practices (caching, monitoring, and graceful degradation)

Part 1: Fragmentation Strategies that Preserve Context

1. Fixed size mesh

Fixed-size chunking (dividing each N tokens) is convenient but destructive. It ignores document structure and splits sentences down the middle. Here’s what happens:

def naive_chunk(text, chunk_size=512):

tokens = tokenize(text)

return (tokens(i:i+chunk_size) for i in range(0, len(tokens), chunk_size))

crisis: A paragraph explaining a concept gets divided, while the context in the other part is lost.

2. Strategy 1: Semantic Chunking

Divide on semantic boundaries (paragraph, section) while respecting size limits:

def semantic_chunk(text, max_tokens=512, overlap=50):

chunks = ()

current_chunk = ()

current_size = 0# Split on double newlines (paragraphs)

paragraphs = text.split('nn')

for para in paragraphs:

para_tokens = tokenize(para)

para_size = len(para_tokens)

# If paragraph is too large, split it

if para_size > max_tokens:

# Split to sentences

sentences = split_sentences(para)

for sent in sentences:

sent_size = len(tokenize(sent))

if current_size + sent_size > max_tokens:

# Save current chunk with overlap

chunks.append(' '.join(current_chunk))

# Keep last few sentences for context

current_chunk = current_chunk(-overlap:)

current_size = sum(len(tokenize(s)) for s in current_chunk)

current_chunk.append(sent)

current_size += sent_size

else:

# Add paragraph to current chunk

if current_size + para_size > max_tokens:

chunks.append(' '.join(current_chunk))

current_chunk = (para)

current_size = para_size

else:

current_chunk.append(para)

current_size += para_size

if current_chunk:

chunks.append(' '.join(current_chunk))

return chunks

major reforms,

- Preserves paragraph structure

- Adds overlap between pieces (important for boundary cases)

- respects sentence boundaries

why it works: When you preserve paragraph boundaries, you maintain the logical flow of ideas. The overlap between pieces is important, as it ensures that concepts spanning boundaries are captured in both parts. In my testing, this improved retrieval accuracy by about 30% compared to fixed-size chunking.

Key Insights: Text is not just a stream of tokens. It has structure, and that structure makes sense.

3. Strategy 2: Document structure-aware fragmentation

For structured documents, preserve the hierarchy:

def hierarchical_chunk(document):

chunks = ()

for section in document.sections:

section_header = f"# {section.title}n"# Include parent context

parent_context = ""

if section.parent:

parent_context = f"(From {section.parent.title})n"

for para in section.paragraphs:

chunk = {

'text': para.text,

'metadata': {

'section': section.title,

'parent': section.parent.title if section.parent else None,

'header_context': section_header,

'full_path': section.get_path() # e.g., "Chapter 3 > Section 3.2"

}

}

chunks.append(chunk)

return chunks

Why does it matter?: When retrieving, you can include section headers and hierarchical references, helping LLM understand where the information fits into the document structure.

In practice, this metadata becomes part of the content you feed to LLM, which will allow spatial awareness within the document.

4. Strategy 3: Hybrid Chunking for Complex Documents

Real-world documents are messy. They include tables, code snippets, diagrams, and mixed content. Each content type requires different management.

def hybrid_chunk(document):

chunks = ()for element in document.elements:

if element.type == 'table':

# Keep tables intact, add descriptive text

chunk = {

'text': f"Table: {element.caption}n{element.to_markdown()}",

'type': 'table',

'metadata': element.metadata

}

chunks.append(chunk)

elif element.type == 'code':

# Code blocks with context

chunk = {

'text': f"```{element.language}n{element.code}n```n{element.description}",

'type': 'code',

'metadata': element.metadata

}

chunks.append(chunk)

elif element.type == 'text':

# Use semantic chunking for regular text

text_chunks = semantic_chunk(element.text)

chunks.extend(({'text': t, 'type': 'text'} for t in text_chunks))

return chunks

Why different strategies matter: Tables lose all their meaning when divided. Code without context is useless. But regular paragraphs benefit from semantic segmentation. By treating each content type appropriately, you preserve information density.

I learned this the hard way when users kept complaining that “Pricing table is incomplete”. It turned out that we were fragmenting the tables, making them incomprehensible.

Part 2: Recovery Optimization

1. Top-K problem

Simply retrieving the most similar parts of top-k often fails because:

- Semantic similarity does not equal relevance

- You miss important context from surrounding parts

- Similar but irrelevant segments rank higher

2. Strategy 1: Hybrid Search

Combining vector similarity with keyword searching dramatically improves retrieval quality. Vector search captures semantic meaning, while BM25 captures exact word matches.

def hybrid_search(query, vector_db, bm25_index, alpha=0.5):

"""Combine semantic and keyword search"""# Vector search

vector_results = vector_db.search(query, top_k=20)

vector_scores = {doc.id: doc.score for doc in vector_results}

# BM25 keyword search

bm25_results = bm25_index.search(query, top_k=20)

bm25_scores = {doc.id: doc.score for doc in bm25_results}

# Combine scores

all_doc_ids = set(vector_scores.keys()) | set(bm25_scores.keys())

combined_scores = {}

for doc_id in all_doc_ids:

# Normalize scores to 0-1 range

v_score = vector_scores.get(doc_id, 0)

k_score = bm25_scores.get(doc_id, 0)

# Weighted combination

combined_scores(doc_id) = (alpha * v_score + (1 - alpha) * k_score)

# Sort and return top k

ranked = sorted(combined_scores.items(), key=lambda x: x(1), reverse=True)

return (doc_id for doc_id, score in ranked(:10))

when to use: Queries containing specific words (product names, technical jargon) benefit from keyword matching.

The alpha parameter lets you adjust the balance. I’ve found that 0.5 works well for general content, but lean more toward BM25 (alpha = 0.3) for technical documents with a lot of specialized terminology.

3. Strategy 2: Query Expansion

Rephrase the user’s query to improve retrieval:

def expand_query(query, llm):

"""Generate alternative phrasings"""

prompt = f"""Given this question: "{query}"Generate 3 alternative ways to phrase this question that might help find relevant information:

1. A more specific version

2. A more general version

3. Using different terminology

Return as JSON list."""

alternatives = llm.generate(prompt)# Search with all query variants

all_results = ()

for q in (query) + alternatives:

results = vector_db.search(q, top_k=5)

all_results.extend(results)

# Deduplicate and rerank

return deduplicate_and_rerank(all_results)

Tip: Don’t just look for alternatives. Use them to cast a wider net, then duplicate and re-rank. This captures relevant documents that use terminology different from the user. The downside is latency (multiple searches), so use it selectively for complex queries.

4. Strategy 3: Reranking

Use cross-encoder to re-rank the retrieved segments:

from sentence_transformers import CrossEncoder

def rerank_results(query, chunks, top_k=5):

"""Rerank with cross-encoder for better relevance"""reranker = CrossEncoder('cross-encoder/ms-marco-MiniLM-L-6-v2')

# Create query-chunk pairs

pairs = ((query, chunk.text) for chunk in chunks)

# Score all pairs

scores = reranker.predict(pairs)

# Sort by score

ranked_indices = scores.argsort()(::-1)(:top_k)

return (chunks(i) for i in ranked_indices)

why it works: Cross-encoders look at the query and segment it together, catching relevance that bi-encoders miss.

5. Strategy 4: Context Expansion

Retrieve parts around the top results:

def retrieve_with_context(query, vector_db, window=1):

"""Retrieve chunks plus surrounding context"""# Initial retrieval

top_chunks = vector_db.search(query, top_k=5)

# Expand to include neighbors

expanded_chunks = ()

for chunk in top_chunks:

# Get chunk position in document

doc_id = chunk.metadata('document_id')

chunk_idx = chunk.metadata('chunk_index')

# Retrieve surrounding chunks

for i in range(chunk_idx - window, chunk_idx + window + 1):

neighbor = get_chunk(doc_id, i)

if neighbor:

expanded_chunks.append(neighbor)

# Deduplicate and maintain order

return deduplicate_by_position(expanded_chunks)

This prevents information loss from the split context.

Part 3: Assessment That Really Matters

1. Beyond cosine equality

Most teams ask “Is the right group ranked higher?” But users care about: “Did I get the right answer?”

2. Evaluation Framework

class RAGEvaluator:

def __init__(self, test_cases):

self.test_cases = test_cases # List of (query, expected_answer, relevant_docs)def evaluate_retrieval(self, retrieval_fn):

"""Measure retrieval quality"""

metrics = {

'recall@k': (),

'precision@k': (),

'mrr': () # Mean Reciprocal Rank

}

for query, _, relevant_docs in self.test_cases:

retrieved = retrieval_fn(query, k=10)

retrieved_ids = (doc.id for doc in retrieved)

# Recall: what % of relevant docs were retrieved?

relevant_retrieved = set(retrieved_ids) & set(relevant_docs)

recall = len(relevant_retrieved) / len(relevant_docs)

metrics('recall@k').append(recall)

# Precision: what % of retrieved docs were relevant?

precision = len(relevant_retrieved) / len(retrieved_ids)

metrics('precision@k').append(precision)

# MRR: reciprocal rank of first relevant doc

for rank, doc_id in enumerate(retrieved_ids, 1):

if doc_id in relevant_docs:

metrics('mrr').append(1.0 / rank)

break

else:

metrics('mrr').append(0.0)

return {k: sum(v) / len(v) for k, v in metrics.items()}

def evaluate_end_to_end(self, rag_system, llm_judge):

"""Measure answer quality"""

scores = {

'correctness': (),

'completeness': (),

'faithfulness': () # Does answer stick to retrieved context?

}

for query, expected_answer, _ in self.test_cases:

# Generate answer

answer = rag_system.answer(query)

# LLM-as-judge evaluation

eval_prompt = f"""Query: {query}

Expected Answer: {expected_answer}

Generated Answer: {answer}

Retrieved Context: {answer.context}

Rate the generated answer on:

1. Correctness (0-1): Does it match the expected answer?

2. Completeness (0-1): Does it cover all aspects?

3. Faithfulness (0-1): Is it supported by the retrieved context?

Return JSON."""

ratings = llm_judge.evaluate(eval_prompt)

for metric, score in ratings.items():

scores(metric).append(score)

return {k: sum(v) / len(v) for k, v in scores.items()}

3. Key metrics to track

- Recovery Recall@k: Are relevant documents found?

- Answer accuracy: Does the final answer match the ground truth?

- Integrity: Does the answer cite the retrieved context (no hallucinations)?

- Latency: p50, p95, p99 response time

Cost: tokens used per query

4. A/B Testing Different Strategies

def compare_strategies(test_queries):

strategies = {

'baseline': baseline_rag,

'hybrid_search': hybrid_rag,

'reranked': reranked_rag,

'full_pipeline': optimized_rag

}results = {}

for name, strategy in strategies.items():

metrics = evaluate(strategy, test_queries)

results(name) = metrics

# Compare improvements

baseline_score = results('baseline')('correctness')

for name, metrics in results.items():

improvement = (metrics('correctness') - baseline_score) / baseline_score * 100

print(f"{name}: {metrics('correctness'):.3f} (+{improvement:.1f}%)")

Part 4: Production Best Practices

1. Metadata Filtering

Don’t search the entire corpus for every question:

def filtered_search(query, user_context):

"""Filter by metadata before vector search"""# Build filter from context

filters = {

'user_id': user_context.user_id,

'access_level': user_context.permissions,

'date_range': user_context.relevant_timeframe

}

# Search only relevant subset

results = vector_db.search(

query,

filters=filters,

top_k=10

)

return results

This dramatically improves speed and relevancy.

2. Caching

Cache at multiple levels:

lass CachedRAG:

def __init__(self, rag_system):

self.rag = rag_system

self.query_cache = {} # Query -> Answer

self.retrieval_cache = {} # Query -> Chunksdef answer(self, query):

# Exact match cache

if query in self.query_cache:

return self.query_cache(query)

# Semantic similarity cache

similar_queries = self.find_similar_cached_queries(query, threshold=0.95)

if similar_queries:

return self.query_cache(similar_queries(0))

# Generate new answer

answer = self.rag.answer(query)

self.query_cache(query) = answer

return answer

3. monitoring

Track what matters in production:

def log_rag_interaction(query, answer, retrieved_chunks, user_feedback=None):

"""Log for debugging and improvement"""metrics = {

'timestamp': datetime.now(),

'query': query,

'num_chunks_retrieved': len(retrieved_chunks),

'retrieval_scores': (c.score for c in retrieved_chunks),

'answer_length': len(answer),

'generation_latency': answer.latency_ms,

'user_feedback': user_feedback, # thumbs up/down

}

# Store for analysis

analytics_db.insert(metrics)

# Alert on anomalies

if metrics('retrieval_scores')(0) < 0.5:

alert("Low confidence retrieval", metrics)

4. Handling failure cases

def robust_rag(query):try:

# Try optimal strategy

chunks = hybrid_search_with_reranking(query)

if not chunks or chunks(0).score < 0.3:

# Fall back to broader search

chunks = fallback_search(query)

if not chunks:

# Admit when you don't know

return {

'answer': "I couldn't find relevant information to answer this question.",

'confidence': 0.0,

'suggestion': "Try rephrasing or asking about a different aspect."

}

answer = generate_answer(query, chunks)

return {

'answer': answer.text,

'confidence': answer.confidence,

'sources': (c.metadata for c in chunks)

}

except Exception as e:

log_error(e)

return fallback_response()

put it all together

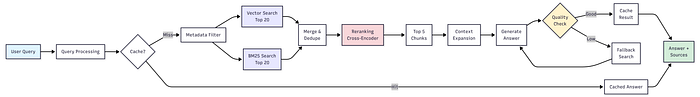

Here is the full production-ready pipeline:

class ProductionRAG:

def __init__(self):

self.chunker = HybridChunker()

self.vector_db = VectorDB()

self.bm25_index = BM25Index()

self.reranker = CrossEncoder()

self.cache = QueryCache()

self.evaluator = RAGEvaluator()def ingest_document(self, document):

"""Process and index a document"""

# Smart chunking

chunks = self.chunker.chunk(document)

# Embed and index

embeddings = self.embed(chunks)

self.vector_db.add(chunks, embeddings)

self.bm25_index.add(chunks)

return len(chunks)

def answer(self, query, user_context=None):

"""Full RAG pipeline"""

# Check cache

if cached := self.cache.get(query):

return cached

# Hybrid retrieval

vector_results = self.vector_db.search(query, top_k=20, filters=user_context)

bm25_results = self.bm25_index.search(query, top_k=20)

combined = self.merge_results(vector_results, bm25_results)

# Rerank

top_chunks = self.reranker.rerank(query, combined, top_k=5)

# Expand context

chunks_with_context = self.expand_context(top_chunks)

# Generate answer

answer = self.generate(query, chunks_with_context)

# Cache and return

self.cache.set(query, answer)

return answer

reality check

After implementing these strategies, I learned this:

What worked best:

- Semantic chunking with overlap (fixed size improvement)

- Hybrid Search (Improving Retrieval Recall)

- Reranking (improving answer quality)

- Reference expansion (eliminated most “incomplete answer” complaints)

What doesn’t matter much:

- foreign embedding model

- extremely large k values

- Complex query expansion (simple rephrasing worked too)

The biggest wins came from:

- Good evaluation framework (you can’t improve what you don’t measure)

- Proper document preprocessing (garbage in, garbage out)

- Metadata Filtering (Speed + Relevance)

conclusion

Building Production RAG is not about implementing every fancy technology. About:

- Understanding your failure modes

- measuring the right things

- Iterating on things that actually improve the user experience

- Building robust systems that degrade gracefully

Get started with semantic chunking and hybrid search. Add re-ranking if retrieval quality is your bottleneck. Apply proper evaluation before anything else.

The difference between demo and production RAG is that it is treated as a system that requires continuous measurement and iteration, not a one-time implementation.

What challenges are you facing with your RAG system?

Published via Towards AI