Author(s): Shalabh Jain

Originally published on Towards AI.

Generative AI has been the talk of the town in recent years, we all understand that. This article is part of a series that provides a comprehensive overview of the key components used in generative AI RAG applications.

Let me set the context on what the RAG application is.

A RAG application stands for recovery-enhanced generation applicationsThis is a type of AI system Combines information retrieval with large language models (LLM) To provide more accurate, up-to-date and reliable responses.

This solves a fundamental problem with LLMs in that they are trained on static data, they do not have access to private and proprietary documents and they may be confused about the answers. The RAG application solves these in a more cost-effective way than finalizing or re-training an LLM.

How the RAG app works

The RAG application consists of two different pipelines

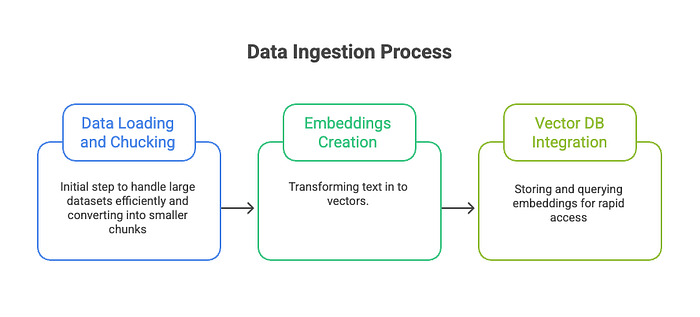

- data ingestion

- Recovery and Enhancement Generator

In this article we will focus on the available embeddings and related tooling as well as the different scenarios of their use.

First of all I will give some definitions –

- Embedding Model – A embedding model This is a machine learning model Converts data (such as text, images, audio, or code) into numerical vectors. (called embedding) in a high-dimensional space, where semantic meaning is preserved,

- Embeddings – lists of numbers that represent the meaning of a piece of data. Same meaning means the vectors are close together and different meaning means the vectors are far apart.

- Dimensionality in the embedding model – is Number of numerical values (attributes) In vector that represents a piece of data. For example, a 1536-dimensional embedding means that each input is represented by 1536 numbers. More dimensions means greater capacity to store meaning. Higher dimensions generally result in higher costs.

- Sparse vectors vs Dense vectors – Sparse vectors contain mostly zero values, making them efficient for keyword matching (e.g. search) by storing only non-zero attributes, while dense vectors contain mostly non-zero values, which capture complex semantic meaning but require more computation.

Embedding use cases –

- Search (where results are sorted by relevance to the query string)

- Clustering (where text strings are grouped based on similarity)

- Recommendations (where items containing a related text string are recommended)

- Anomaly detection (where outliers with low relatedness are identified)

- Diversity measure (where similarity distribution is analyzed)

- Classification (where text strings are classified by their most similar labels)

Popular embedding models –

- Open AI Model –

- text-embedding-3-small – Newer model, 1536 default dimensions, can be reduced when the highest performance is not required to balance cost.

- text-embedding-3-large – new model, 3702 default dimensions, can be reduced, high semantic accuracy, high cost per token.

- text-embedding-ada-002–1536 – Old model, fixed 1536 dimensions, set for deprecation.

All models are API based and cost per token. Cosine similarity is recommended. It is faster than the dot product but provides the same ranking as Euclidean distance.

2. Google Cloud Model –

- gemini-embeddings-001 – New model, adds functionality to the previous model, supports dense vectors up to 3072 output dimensions. Supports a maximum sequence length of 2048 tokens.

- text-embedding-005 – Specializing in English and code functions, supports up to 768 tokens and 2048 tokens.

- text-multilingual-embedding-002 – Specializes in multilingual tasks, supports up to 768 tokens and 2048 tokens.

Using the output_dimensionality parameters, the user can control the size of the output embedding vector. Selecting smaller output dimensionality can save storage space and increase computational efficiency for downstream applications, while incurring little loss in terms of quality. Google models are also API based.

3. Amazon Titan Model

- amazon.titan-embed-text-v2:0 – Default dimensions are 1024, but also supports 512, 256. Maximum supported tokens is 8,192

- Titan Text Embedding G1 – Default dimensions are 1024, but also supports 384, 256. Maximum supported tokens is 256

4. Sentence-Transformer (aka SBERT) Model

- ALL-MINILM-L6-V2 — An all-round model designed for many use-cases. 384 dimensions, 256 max tokens

- all-mpnet-base-v2 – All-round model for many use-cases. 384 dimensions, 256 max tokens

Usage Scenario –

to build organizations Enterprise-grade RAG applications where accuracy and semantic depth are criticallike models OpenAI’s text-embedding-3-big Or Google’s gemini-embedding-001 There are strong options. These models provide high-dimensional embeddings and excellent semantic fidelity, making them suitable for question answering, knowledge assistants, and compliance or policy-driven use cases. However, their high cost means that they are best used when recovery quality directly impacts business results.

In scenarios where Cost Efficiency and Scalability Maximum semantics are more important than precision – such as when indexing millions of documents – models like OpenAI’s text-embedding-3-small Or Amazon Titan Embedding Present a workable compromise. These models offer adjustable dimensions, allowing teams to reduce storage and compute costs while maintaining good retrieval performance. They are particularly effective for large-scale document search and recommendation systems.

For teams already invested in a specific cloud ecosystem, it is generally recommended to use The native embedding model provided by that platformOn Google Cloud, Gemini and text-embedding models integrate seamlessly with Vertex AI vector search, On AWS, Titan embeddings work well with Bedrock and OpenSearch, This alignment simplifies deployment, monitoring, and scaling while reducing operational overhead,

In regulated environments, offline deployments, or in cases where complete control over data and infrastructure is required, Open-source Sentence-Transformer Model Such as all-mpnet-base-v2 Or all-minilm-l6-v2 Are excellent options. While these models cannot always match the top-tier semantic performance of proprietary APIs, they provide robust results at zero per-token cost and are ideal for experimentation, internal tools, and privacy-sensitive workloads.

Overall, the choice of an embedding model should be based on Recovery quality requirements, cost constraints, cloud alignment and operational controlsInstead of dimensionality alone. Selecting the right embedding model is a fundamental decision that directly determines the effectiveness, reliability, and scalability of a RAG application.

Published via Towards AI