Can a fully sovereign open logic model match state-of-the-art systems when every part of its training pipeline is transparent? Researchers at Mohammed Bin Zayed University of Artificial Intelligence (MBZUAI) have released K2Think v2, a fully sovereign reasoning model designed to test how far open and fully documented pipelines can advance long-horizon reasoning over math, code, and science when the entire stack is open and reproducible. K2 Think V2 takes the 70 billion parameter K2 V2 Instruct base model and applies a carefully engineered reinforcement learning approach to transform it into a high precision reasoning model that is completely open in both weights and data.

K2 V2 From Base Model to Logic Expert

K2 V2 is a dense decoder transformer with 80 layers, hidden size 8192 and 64 attention vertices with grouped query attention and rotary position embedding. It is trained on approximately 12 trillion tokens drawn from the TxT360 corpus and related curated datasets that cover web text, mathematics, code, multilingual data, and scientific literature.

The training takes place in three phases. Pretraining runs on natural data with context length 8192 tokens to establish robust general knowledge. Intermediate training then expands the context to 512k tokens using TxT360 Midas, which blends long documents, synthetic thinking traces, and diverse reasoning behavior while keeping at least 30 percent short context data at every step. Finally, supervised fine tuning, called TxT360 3efforts, follows instructions and injects structured logic signals.

Importantly, the K2 V2 is not a normal base model. It is apparently optimized for long-term context stability and the performance of reasoning behaviors during intermediate training. This makes it a natural basis for a later stage of training that focuses solely on argument quality, which is exactly what K2 Think V2 does.

Fully Sovereign RLVR on Guru Dataset

K2 Think V2 is trained with GRPO style RLVR recipes on top of the K2 V2 instructable. The team uses the Guru dataset, version 1.5, which focuses on math, code, and STEM questions. Guru is sourced from licensed sources, expanded in STEM coverage, and dehydrated against key evaluation benchmarks prior to use. This is important for a sovereign claim, as both the base model data and the RL data are curated and documented by the same institution.

The GRPO setup removes the common KL and entropy assisted losses and uses asymmetric clipping of the policy ratio with the high clip set at 0.28. The training rollout runs completely on policy with temperature 1.2, global batch size 256 and no micro batching to increase diversity. It avoids policy reforms that are known to bring instability to GRPOs, such as training.

RLVR itself operates in two phases. In the first stage, the response length is limited to 32k tokens and the model runs for approximately 200 steps. In the second step, the maximum response length is increased to 64k tokens and training continues for about 50 steps with the same hyperparameters. This schedule specifically utilizes the long context capability inherited from K2 V2 so that the model can practice the full range of thought trajectories rather than small solutions.

benchmark profile

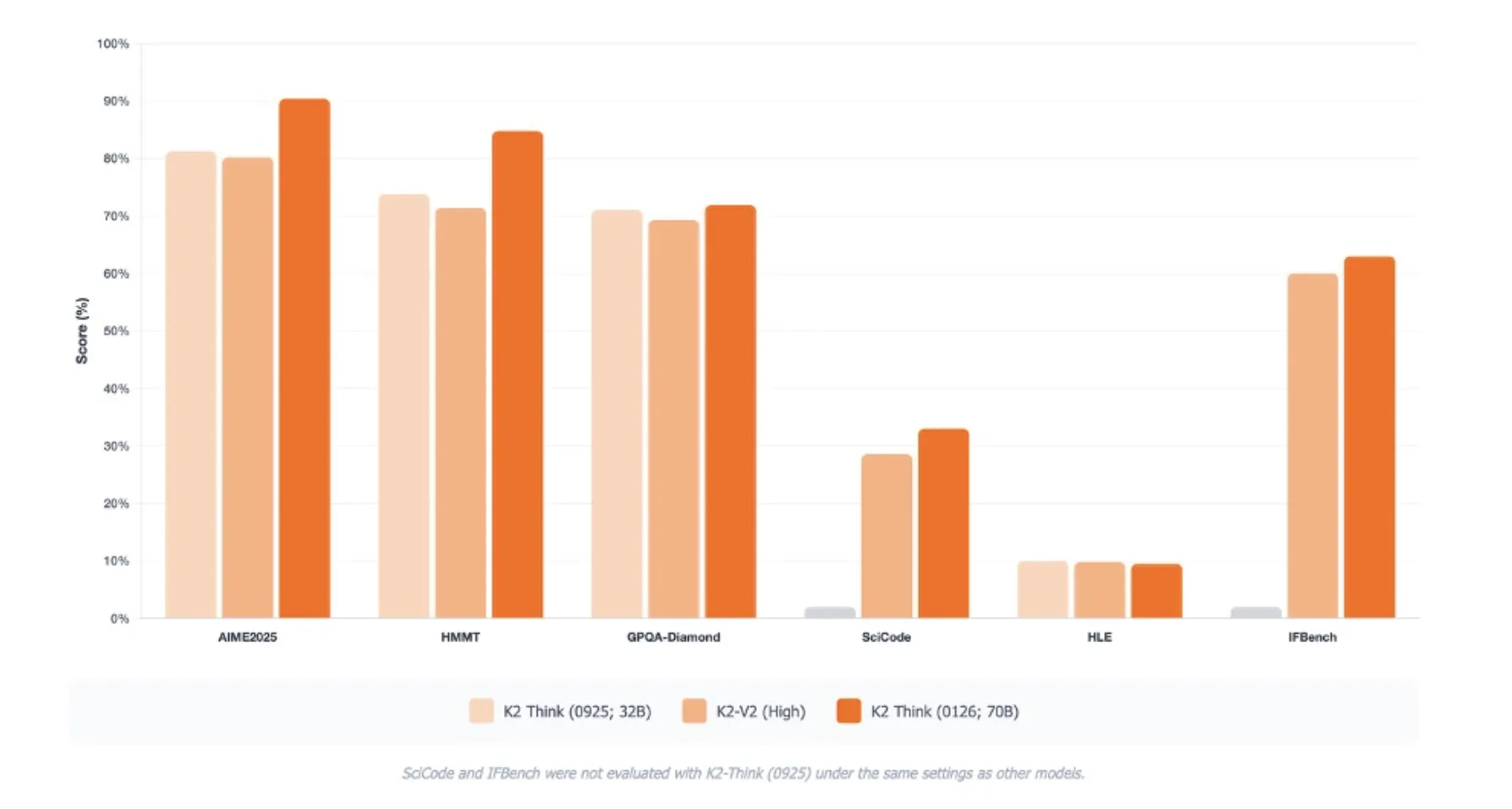

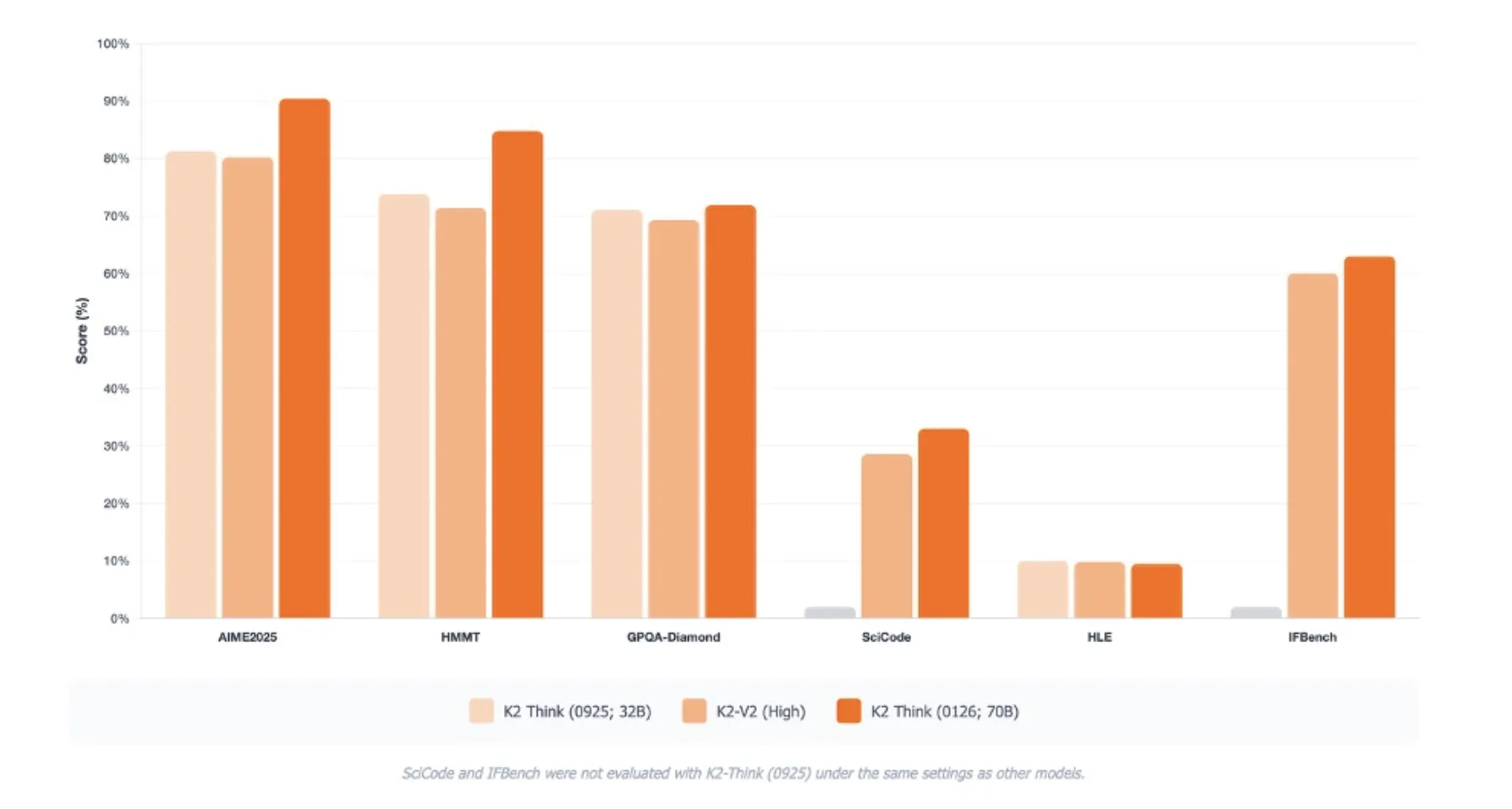

K2 Think V2 targets reasoning benchmarks rather than purely knowledge benchmarks. On AIME 2025 it reaches 1 in 90.42. Its score on HMMT 2025 is 84.79. On GPQA Diamond, the graduate-level hard science benchmark, it reaches 72.98. On Psycode it records 33.00, and on Humanities Last Exam it reaches 9.5 under benchmark settings.

These scores are reported as the average over 16 runs and are directly comparable only within the same assessment protocol. The MBZUAI team also highlights improvements to the IFBench and artificial analytics evaluation suites, with particular gains in hallucination rate and long context reasoning compared to previous K2 Think releases.

security and openness

The research team reports a Security 4 Style analysis that aggregates four security surfaces. Content and public safety, authenticity and credibility, and social alignment all reach macro average risk levels in the low range. Data and infrastructure risks remain high and have been marked as critical, reflecting concerns about sensitive personal information management rather than simply modeled behaviour. The team says that despite these shortcomings, K2Think V2 still shares the common limitations of larger language models. On the artificial analysis openness index, K2 Think V2 sits in the lead along with K2 V2 and Olmo-3.

key takeaways

- K2 Think V2 is a fully sovereign 70B reasoning model: Built on the K2 V2 instruction set, with open weights, open data recipes, detailed training logs, and a full RL pipeline released through Reasoning360.

- Base model optimized for long context and reasoning before RL:K2 V2 is a dense decoder transformer trained on approximately 12T tokens, with the middle training context length expanded to 512K tokens and targeting logic structured by ‘3 attempts’ SFT.

- Aligned reasoning using GRPO based RLVR on Guru dataset: The training idea uses a 2 stage on policy GRPPO setup on Guru v1.5 with asymmetric clipping, temperature 1.2 and response cap at 32K then 64K tokens to learn long chain of solutions.

- Competitive results on tough reasoning standards: K2 Think V2 reports strong passing 1 scores such as 90.42 on AIME 2025, 84.79 on HMMT 2025, and 72.98 on GPQA Diamond, establishing it as a high-precision open reasoning model for math, code, and science.

check it out paper, model weight, repo And technical details. Also, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletter. wait! Are you on Telegram? Now you can also connect with us on Telegram.

Max is an AI analyst at Silicon Valley-based MarkTechPost, actively shaping the future of technology. He teaches robotics at Brainvine, fights spam with ComplyMail, and leverages AI daily to translate complex technological advancements into clear, understandable insights.