Robiont, the embedded AI unit within Ant Group, has open sourced Lingbot-World, a large-scale world model that transforms video generation into an interactive simulator for embodied agents, autonomous driving, and games. The system is designed to present controllable environments with high visual fidelity, strong dynamics, and long temporal horizons, while remaining responsive enough for real-time control.

From text to video From text to the world

Most text to video models generate short clips that look realistic but behave like passive movies. They do not model how actions change the environment over time. Lingbot-World is designed as an action conditioned world model. It learns the transition dynamics of the virtual world, so that keyboard and mouse inputs, combined with camera motion, drive the evolution of future frames.

Formally, the model learns a conditional distribution of future video tokens given previous frames, language cues, and individual actions. At training time, it predicts sequences up to about 60 seconds. At inference time, it can automatically roll out coherent video streams that extend for approximately 10 minutes while keeping the scene structure stable.

From data engines, web video to interactive trajectories

A core design in the Lingbot-world is an integrated data engine. It provides rich, aligned observations of how the world changes, covering a variety of realistic scenarios.

The data acquisition pipeline combines 3 sources:

- Large-scale web videos of humans, animals, and vehicles, viewed from both first-person and third-person

- Game data, where RGB frames are strictly coupled with user controls such as W, A, S, D and camera parameters

- Synthetic trajectories rendered in Unreal Engine, where clean frames, camera internals and externals, and object layout are all known

After collection, a profiling step standardizes this heterogeneous corpus. It filters for resolution and duration, splits the video into clips and estimates missing camera parameters using geometry and pose models. A vision language model scores clips for quality, motion magnitude, and scene type, then selects a curated subset.

On top of this, a hierarchical captioning module creates 3 levels of text supervision:

- Descriptive captions for complete trajectories including camera motion

- Visual static captions that describe the environment layout without motion

- Dense temporal captions for short time windows that focus on local dynamics

This separation allows the model to separate static structure from motion patterns, which is important for long horizon stability.

Architecture, MOE Video Backbone and Action Conditioning

Lingbot-world starts from Wan2.2, which is 14B parameter image to video propagation transformer. This backbone already captures robust open domain video priors. The Robiant team expands this into a mix of experts DiT with 2 experts. Each expert has about 14B parameters, so the total parameter count is 28B, but only 1 expert is active at each denoising step. This keeps the estimated cost the same as the compact 14B model while expanding capacity.

One course extends the training sequence from 5 seconds to 60 seconds. The schedule increases the ratio of high noise timesteps, which stabilizes the global layout over long contexts and reduces mode collapse for long rollouts.

To make the model interactive, actions are injected directly into the transformer block. Camera rotation is encoded with Plucker embeddings. Keyboard actions are represented as multi hot vectors on keys such as W, A, S, D. These encodings are fused and passed through the adaptive layer normalization module, which modulates the hidden states in the DIT. Only the action adapter layers are fine-tuned, the main video backbone remains frozen, so the model retains visual quality from pre-training while learning action responses from small interactive datasets.

Both image-to-video and video-to-video continuity functions are used in training. Given a single image, the model can synthesize future frames. When viewing a partial clip, it can extend the sequence. This results in an internal transition function that can start from arbitrary time points.

Lingbot World Distillation for Fast, Real-Time Use

The intermediate-trained model, Lingbot-World Base, still relies on multi-stage propagation and full temporal attention, which is expensive for real-time interactions. The Robient team has introduced lingbot-world-fast as an accelerated version.

The fast model is initialized with a high noise expert and full temporal attention is replaced by block causal attention. Within each temporal block, attention is bidirectional. In all blocks, it is causal. This design supports key value caching, so the model can autoregressively stream frames with low overhead.

Distillation uses a diffusion force strategy. The student is trained on a small set of target timesteps, including timestep 0, so it sees both noisy and clean latents. Distribution matching distillation is combined with a regressive differential head. The adverse loss only updates the discriminator. The student network is updated with the distillation loss, which stabilizes the training while preserving action adherence and temporal coherence.

In experiments, Lingbot World Fast reaches 16 frames per second when processing 480p video on a system with 1 GPU node, and, maintains end-to-end interaction latency less than 1 second for real-time control.

Emergent memory and long-term behavior

One of the most interesting features of Lingbot-World is random memory. The model maintains global consistency without explicit 3D representations such as Gaussian splatting. When the camera moves away from a landmark such as Stonehenge and returns after about 60 seconds, the structure reappears with consistent geometry. When a car leaves the frame and later re-enters, it appears to be in a physically reliable location, not frozen or reset.

The model can also handle extremely long sequences. The research team shows coherent video generation that lasts up to 10 minutes with a stable layout and narrative structure.)

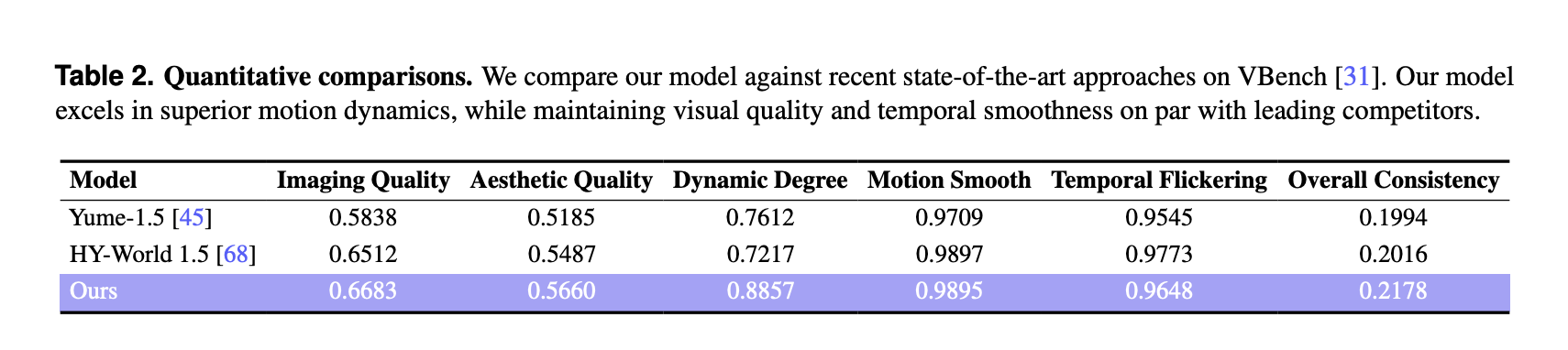

VBench results and comparison with other world models

For quantitative evaluation, the research team used VBench on a curated set of 100 generated videos, each of which was longer than 30 seconds. Lingbot-World is compared with 2 recent world models, Yume-1.5 and HY-World-1.5.

On WeBench, Lingbot World reports:

These scores exceed both baselines for imaging quality, aesthetic quality, and dynamic degree. The dynamic degree margin is larger, 0.8857 compared to 0.7612 and 0.7217, which indicates richer scene transitions and more complex motion that reacts to user input. Motion smoothness and temporal flicker are equivalent to the best baseline, and Method 3 achieves the best overall stability metric among the models.

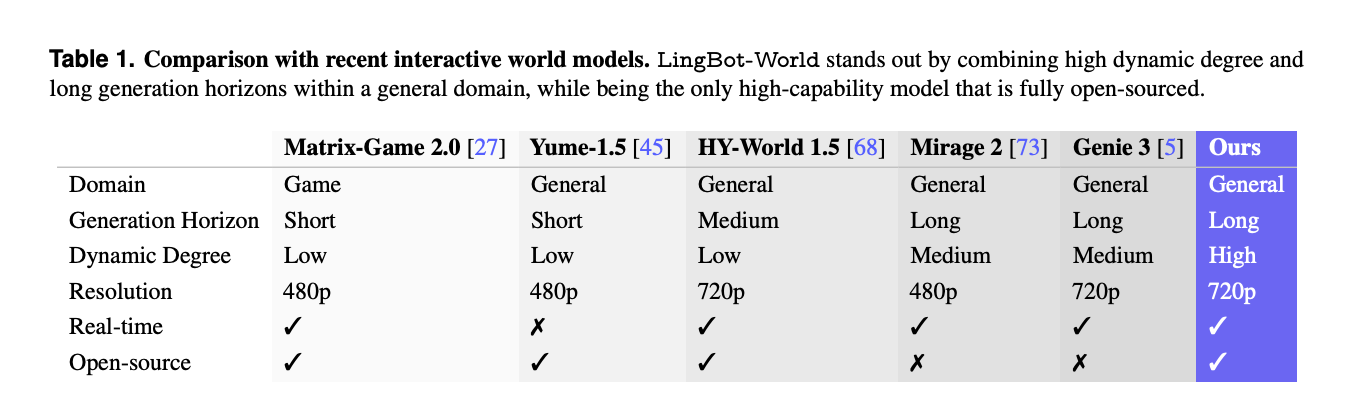

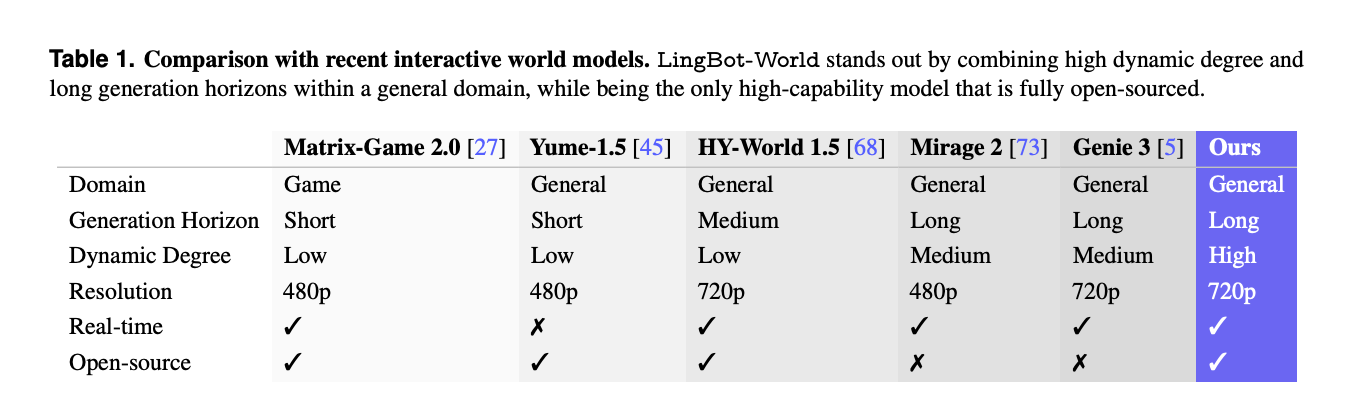

A separate comparison with other interactive systems such as Matrix-Game-2.0, Mirage-2 and Genie-3 highlights that Lingbot-World is one of the few completely open-source world models that combines general domain coverage, long generation horizon, high dynamic degree, 720p resolution and real-time capabilities.

Applications, Speedy Worlds, Agents and 3D Reconstruction

Beyond video synthesis, Lingbot-World is positioned as a testbed for embodied AI. The model supports prompt world events, where text instructions change weather, lighting, style over time, or inject local events such as fireworks or moving animals while preserving spatial structure.

It can also train downstream action agents, for example with small vision language action models like Qwen3-VL-2B that predict control policies from images. Because the generated video streams are geometrically consistent, they can be used as input to 3D reconstruction pipelines that generate static point clouds for indoor, outdoor, and synthetic scenes.

key takeaways

- Lingbot-World is an action conditioned world model that extends the text-to-video to text-to-world simulation, where keyboard actions and camera movements directly control the long-horizon video rollout for approximately 10 minutes.

- The system is trained on an integrated data engine that combines web video, action labels and game logs with Unreal Engine trajectories, as well as hierarchical narrative, static scenes and dense temporal captions to distinguish layout from motion.

- The core backbone is a 28B parameter mix of experts’ diffusion transformers, built from Wan2.2, with 2 experts of 14B each and action adapters that are fine tuned while the visual backbone remains frozen.

- Lingbot-world-fast is a distilled variant that uses block causal attention, diffusion force, and distribution matching distillation to achieve approximately 16 frames per second at 480p on 1 GPU node, reporting less than 1 second latency for interactive use.

- On VBench with 100 generated videos longer than 30 seconds, LingBot-World reports the highest imaging quality, aesthetic quality and dynamic degree among Yume-1.5 and HY-World-1.5, and the model shows stable long-range structure suitable for emergent memory and embodied agents and 3D reconstruction.

check it out paper, repo, project page And model weight. Also, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletter. wait! Are you on Telegram? Now you can also connect with us on Telegram.