Question:

MoE models have far more parameters than Transformers, yet they can run faster at inference time. how is that possible?

Difference Between Transformers and Mixing Experts (MOE)

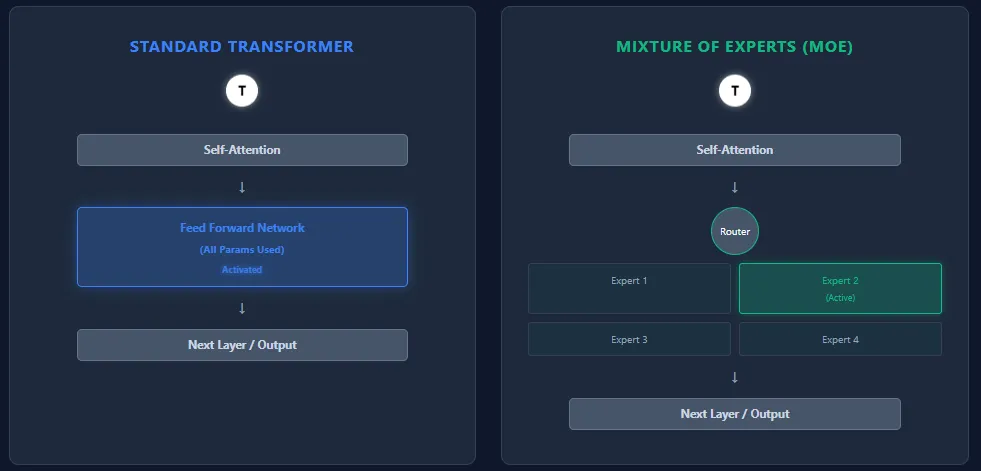

Transformer and Mixture of Experts (MOE) models share the same backbone architecture – self-attention layers followed by feed-forward layers – but they differ fundamentally in the way they use parameters and computations.

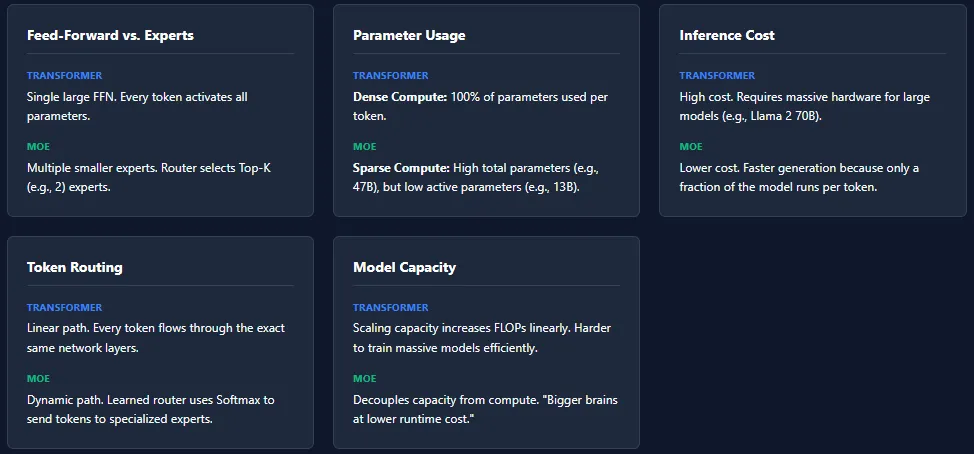

Feed-forward networks vs experts

- Transformer: Each block consists of a large feed-forward network (FFN). Each token passes through this FFN, which activates all the parameters during inference.

- MOE: Replaces the FFN with several smaller feed-forward networks, called specialists. A routing network selects only a few experts (top-k) per token, so only a small fraction of the total parameters are active.

parameter usage

- Transformer: All parameters from all layers are used for each token → dense calculation.

- MOE: Has more total parameters, but activates only a small part per token → Sparse computation. Example: Mixtral 8×7B has 46.7B total parameters, but only uses ~13B per token.

estimate cost

- Transformer: High estimation cost due to full parameter activation. Scaling into models like GPT-4 or Llama 2 70B requires powerful hardware.

- MOE: Low estimation cost because only K experts are active per layer. This makes MoE models faster and cheaper to run, especially at large scale.

token routing

- Transformer: No routing. Each token follows exactly the same path through all layers.

- MOE: A learned router assigns tokens to experts based on the softmax score. Different tokens select different experts. Different layers can activate different experts thereby increasing expertise and model capability.

model capacity

- Transformer: To increase capacity, the only options are to add more layers or widen the FFN – both increase FLOPs drastically.

- MOE: Aggregate parameters can scale massively without increasing per-token calculations. This enables “bigger brains at lower runtime costs”.

While MoE architectures offer enormous potential with low estimation cost, they present several training challenges. The most common problem is expert collapse, where the router repeatedly selects the same expert, leaving others less trained.

Load imbalance is another challenge – some experts may receive far more tokens than others, leading to a disparity in learning. To address this, MoE models rely on techniques such as noise injection, top-k masking, and expert capacity thresholding in routing.

These mechanisms ensure that all experts remain active and balanced, but they also make MoE systems more complex to train than standard transformers.

I am a Civil Engineering graduate (2022) from Jamia Millia Islamia, New Delhi, and I have a keen interest in Data Science, especially Neural Networks and their application in various fields.