Qwen3-Max-Thinking is Alibaba’s new major reasoning model. It not only measures parameters, but it also changes the way inference is done, with explicit control over the depth of thinking and built-in tools for search, memory, and code execution.

Model scale, data, and deployment

Qwen3-Max-Thinking is a trillion-parameter MoE flagship LLM pre-trained on 36T tokens and built on the Qwen3 family as a top-level reasoning model. The model targets not just casual chat, but also long-term logic and code. It runs with a context window of 260k tokens, supporting repository scale code, long technical reports, and multi document analysis within a single prompt.

Qwen3-Max-Thinking is a closed model served through Qwen-Chat and Alibaba Cloud Model Studio with OpenAI compatible HTTP API. The same endpoint can be called in a cloud style tool schema, so existing anthropic or cloud code flows can be swapped into quen3-max-thinking with minimal changes. There is no public load, so usage is API based, which matches its status

Experience with smart test time scaling and cumulative reasoning

Most large language models improve logic by simple test time scaling, for example best of N sampling with many parallel chains of consideration. This approach increases quality but cost increases almost linearly with the number of samples. Quen3-Max-Thinking introduces an experience cumulative, multi-round test time scaling strategy.

Instead of simply taking more samples in parallel, the model iterates within the same conversation, reusing intermediate logic as the structured experience progresses. After each round, it makes useful partial conclusions, then focuses subsequent calculations on the unresolved parts of the question. This process is controlled by a clear thinking budget that developers can adjust through API parameters like enable_thinking and additional configuration fields.

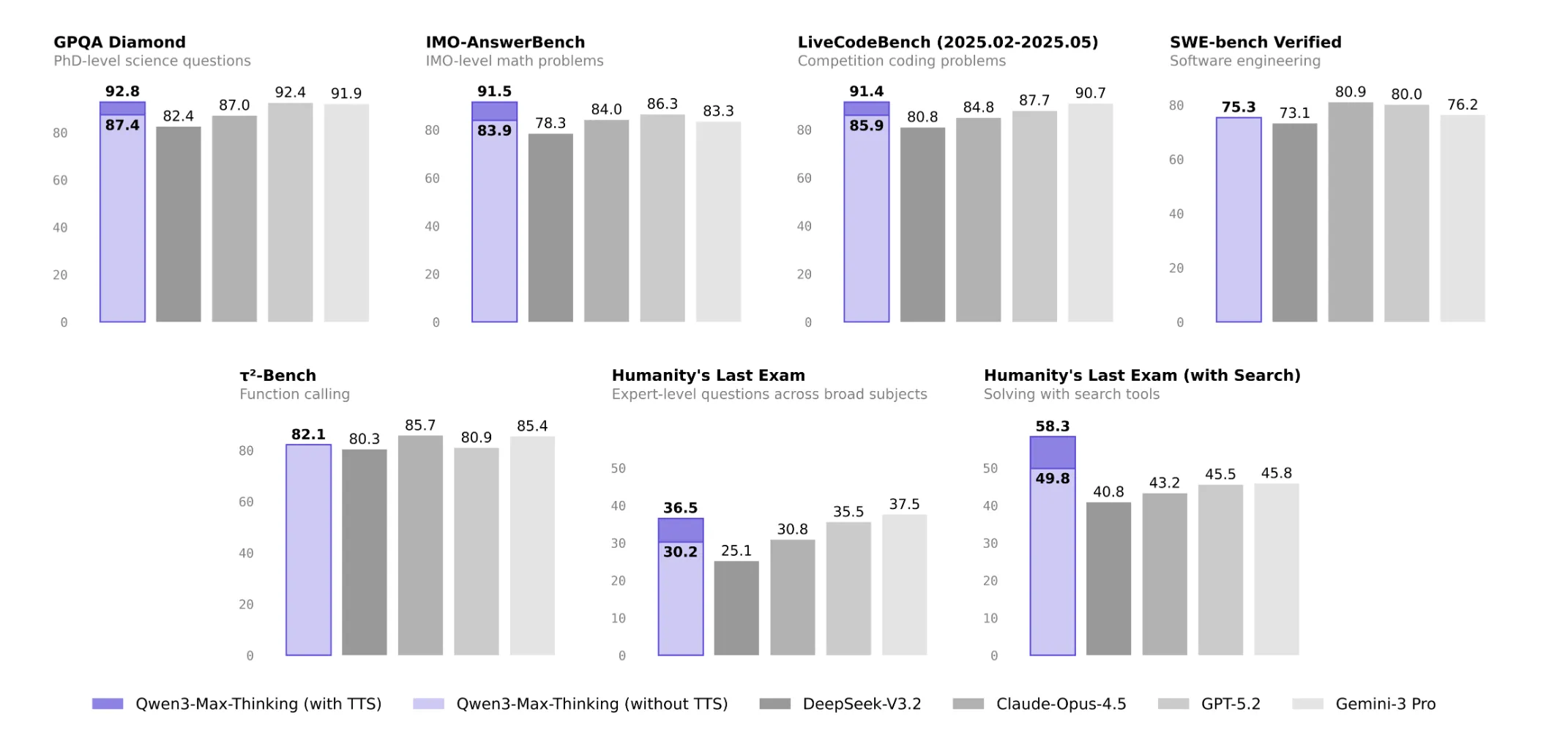

The reported effect is that accuracy increases without a proportional increase in token count. For example, Quen’s own ablation shows that GPQA Diamond has increased from approximately 90 level accuracy to approximately 92.8, and LiveCodeBench v6 has increased from approximately 88.0 to 91.4 under the experience accumulation strategy on the same token budget. This is important because it means that higher logic quality can be driven not just by more samples, but by more efficient scheduling of computations.

Native Agent Stack with Adaptive Tool Usage

Quen3-Max-Thinking integrates three tools as first-class capabilities: search, memory, and a code interpreter. Search connects to web retrieval so that the model can fetch new pages, extract content and base its answers on it. Memory stores user or session specific state, supporting personalized logic over longer workflows. The code interpreter executes Python, allowing numerical validation, data transformation, and program synthesis with runtime checking.

The model uses Adaptive Tool Use to decide when to apply these tools during a conversation. Tool calls are associated with internal thinking segments rather than being arranged by an external agent. This design obviates the need for separate routers or planners and reduces hallucinations, as the model can explicitly obtain missing information or verify calculations rather than guessing.

The capabilities of the tool are also benchmarked. On the Tau² bench, which measures function calling and tool orchestration, the Qwen3-Max-Thinking reported a score of 82.1, which is comparable with other Frontier models in this category.

Benchmark Profile in Knowledge, Reasoning and Discovery

On 19 public benchmarks, Quen3-Max-Thinking is at or near the same level as GPT 5.2 Thinking, Cloud Opus 4.5, and Gemini 3 Pro. For knowledge tasks, reported scores include 85.7 on MMLU-Pro, 92.8 on MMLU-Redux, and 93.7 on C-Eval, where Quon leads the group on the Chinese language assessment.

For hard reasoning, it records 87.4 on GPQA, 98.0 on HMMT on 25 February, 94.7 on HMMT on 25 November and 83.9 on IMOAnswerBench, placing it in the top tier of current math and science models. On coding and software engineering it reaches 85.9 on LiveCodeBench v6 and 75.3 on SWE Verified.

The base HLE configuration has a Qween3-Max-Thinking score of 30.2, down from the Gemini 3 Pro at 37.5, and a GPT 5.2 Thinking score of 35.5. In a tool enabled HLE setup, the official comparison table which includes web search integration shows the Qween3-Max-Thinking at 49.8, the GPT 5.2 ahead of the Thinking at 45.5 and the Gemini 3 Pro at 45.8. In my most aggressive experience with the tool on HLE with the cumulative test time scaling configuration, quen3-max-thinking reaches 58.3 while GPT 5.2 thinking remains at 45.5, although this higher number is for the heavy inference mode compared to the standard comparison table.

key takeaways

- Quen3-Max-Thinking is a closed, API-only flagship reasoning model from Alibaba, built on a more than 1 trillion parameter backbone trained on approximately 36 trillion tokens with a 262144 token context window.

- The model introduces experience cumulative testing time scaling, where it reuses intermediate logic across multiple rounds, improving on benchmarks like GPQA Diamond and LiveCodeBench v6 on the same token budget.

- Qwen3-Max-Thinking integrates search, memory, and a code interpreter as native tools and utilizes Adaptive Tool Use, so the model itself decides when to browse, recall status, or execute Python during a conversation.

- On public benchmarks it reports competitive scores with GPT 5.2 ThinkCentre, Cloud Opus 4.5 and Gemini 3 Pro, including strong results on MMLU Pro, GPQA, HMMT, IMOAnswerBench, LiveCodeBench v6, SWE Bench Verified and Tau² Bench.

check it out API And technical details. Also, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletter. wait! Are you on Telegram? Now you can also connect with us on Telegram.

Michael Sutter is a data science professional and holds a Master of Science in Data Science from the University of Padova. With a solid foundation in statistical analysis, machine learning, and data engineering, Michael excels in transforming complex datasets into actionable insights.