Alibaba Tongyi Lab has released a family of MAI-UI-Foundation GUI agents. It seamlessly integrates MCP tool usage, agent user interaction, device-cloud collaboration and online RL, establishing state-of-the-art results in general GUI grounding and mobile GUI navigation, surpassing Gemini-2.5-Pro, Seed1.8 and UI-TARS-2 at AndroidWorld. The system targets three specific gaps that beginning GUI agents often overlook, native agent user interactions, MCP tool integration, and a device cloud collaboration architecture that keeps privacy sensitive tasks on the device using the larger cloud model when needed.

What is MAI-UI?,

MAI-UI is a family of multimodal GUI agents built on Qwen3 VL, with model sizes 2B, 8B, 32B and 235B A22B. These models take natural language instructions and provide UI screenshots as input, then output structured actions to the live Android environment.

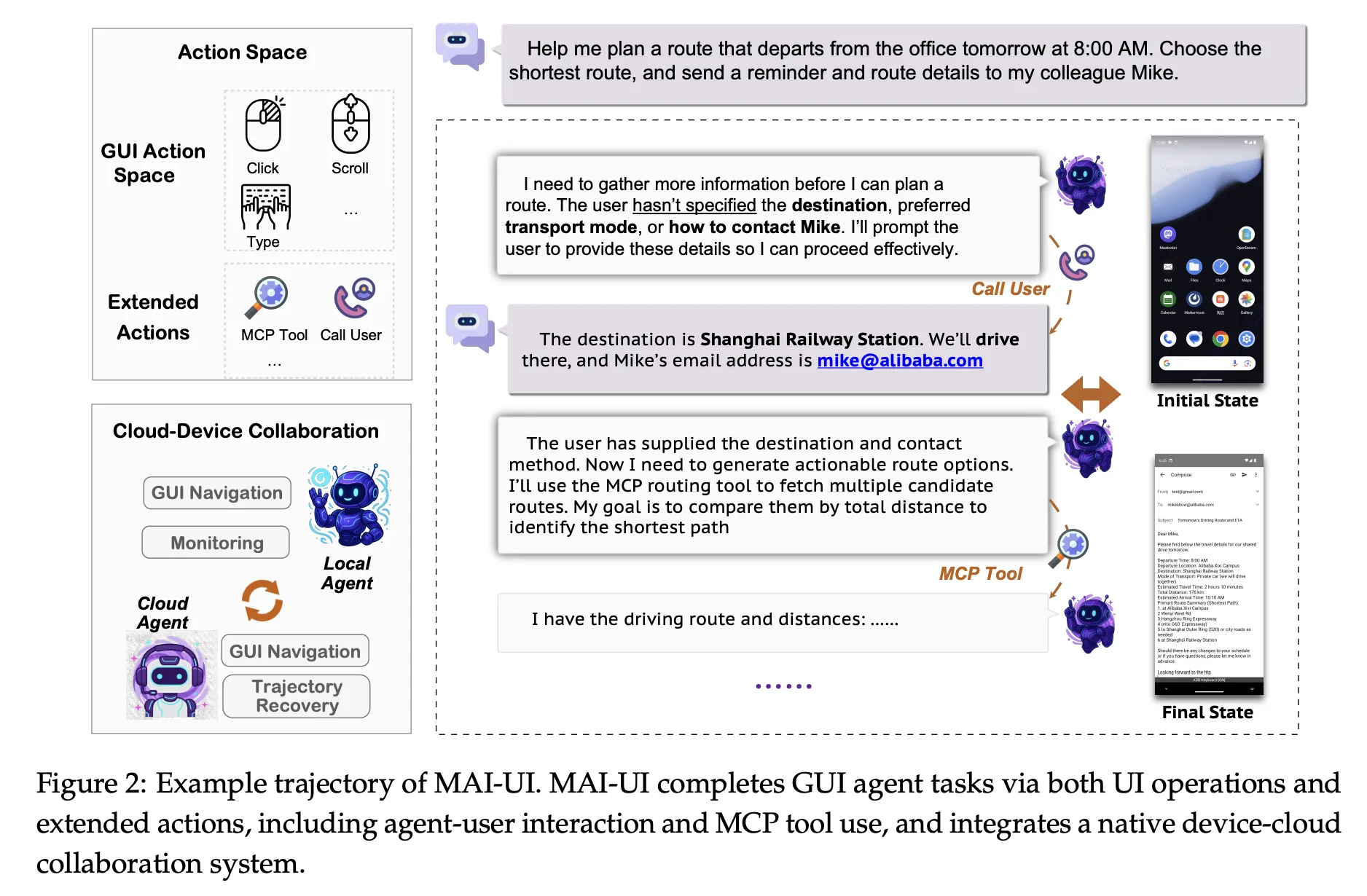

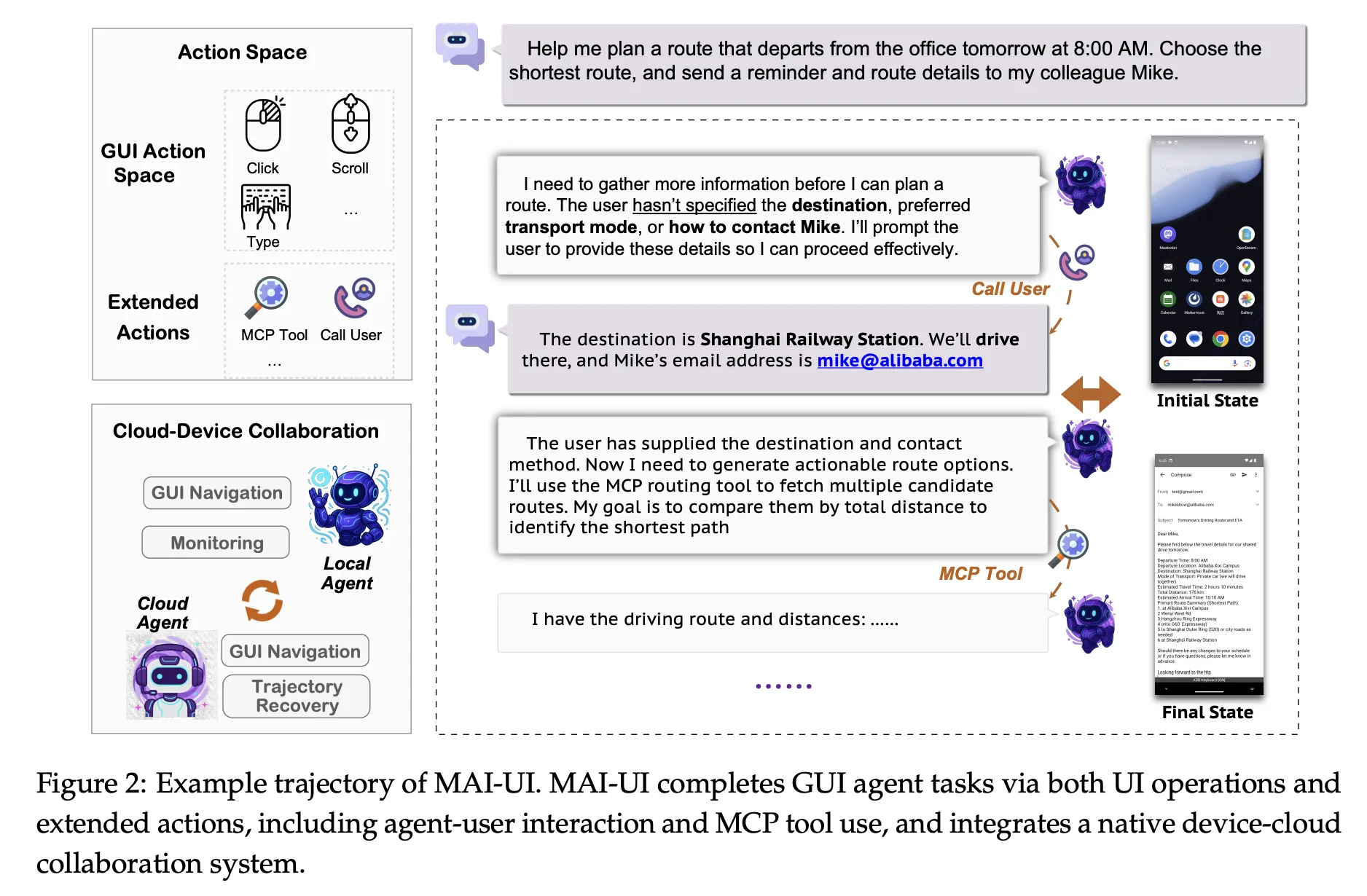

The action space includes standard operations such as clicking elements, swiping, entering text, and pressing system buttons. On top of this, the MAI-UI offers explicit actions to answer user questions, asks for clarification from the user when the goal is unclear, and invokes external tools through MCP tool calls. This enables the agent to mix GUI steps, direct language responses, and API level operations in a single trajectory.

From a modeling perspective, MAI UI integrates three components, a self-developed navigation data pipeline that includes user interactions and MCP cases, an online RL framework that scales to hundreds of parallel Android instances and long contexts, and a native device cloud collaboration system that routes execution based on task status and privacy constraints.

GUI grounding with instruction logic

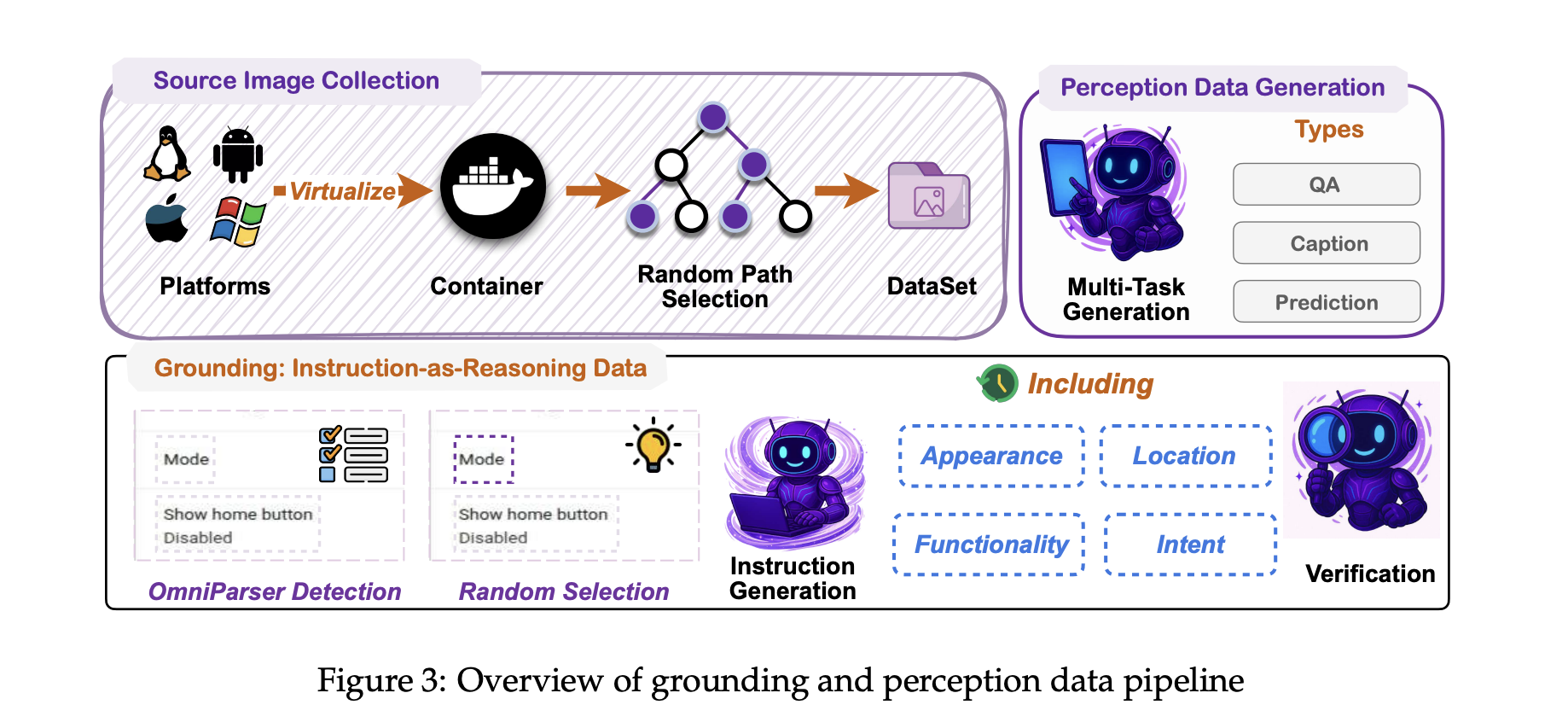

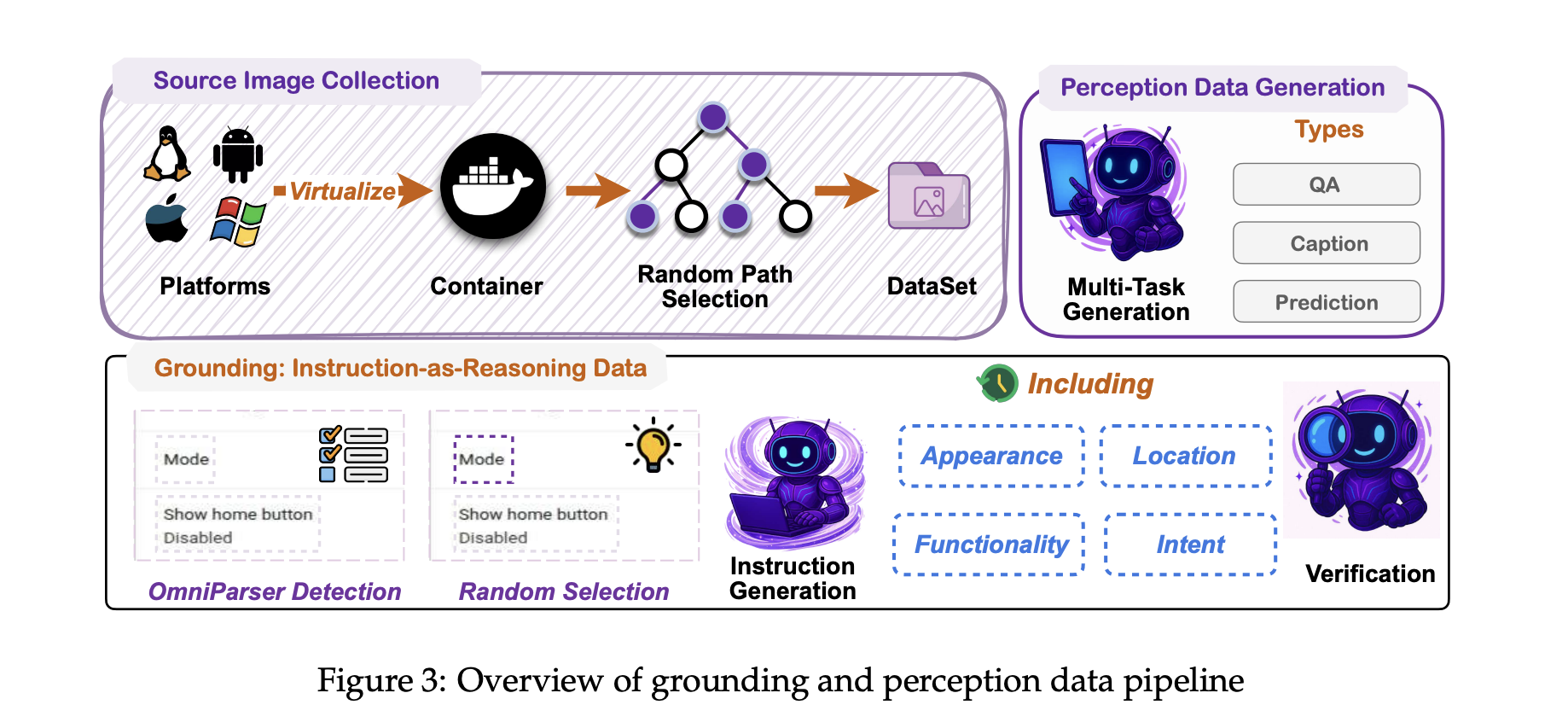

A core requirement for any GUI agent is grounding, mapping free form language like ‘Open Monthly Billing Settings’ to the right controls on the screen. MAI-UI adopts a UI grounding strategy inspired by earlier UI-INS work on multi-perspective instruction descriptions.

For each UI element, the training pipeline does not rely on a caption. Instead, it generates multiple views of the same element, for example appearance, function, spatial location, and user intent. These multiple instructions are treated as logical evidence for the model, which must select a point inside the correct bounding box. This reduces the impact of erroneous or under-specified instructions, a problem that UI INS has addressed in existing datasets.

Ground truth boxes are collected from a mixture of curated GUI datasets and large-scale exploration of virtualized operating systems in containerized environments. Accessibility tree or OCR based parser is used to align text metadata with pixel locations. The training objective combines supervised fine tuning with a simple reinforcement signal that rewards correct point in the box predictions and a valid output format.

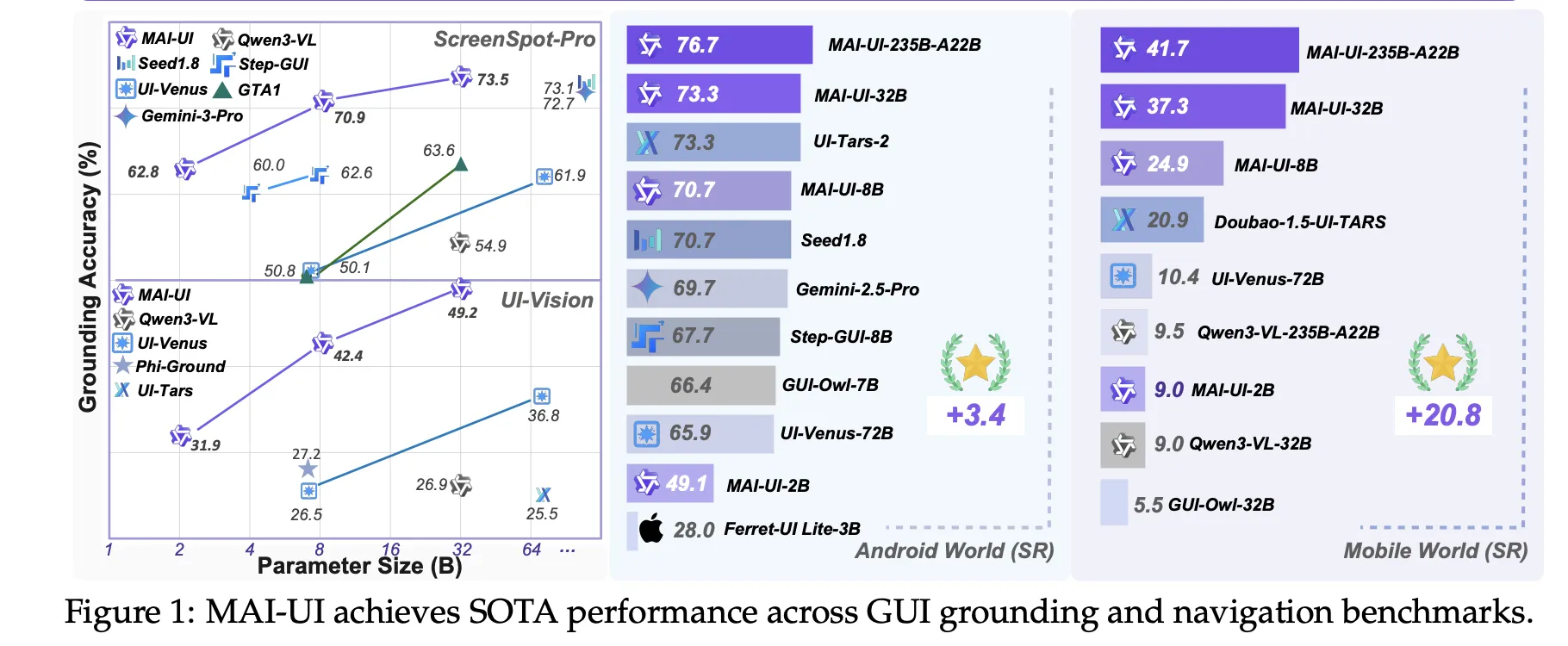

On public GUI grounding benchmarks, the resulting MAI-UI models reach 73.5 percent accuracy on ScreenSpot Pro with adaptive zoom in, 91.3 percent on MMBench GUI L2, 70.9 percent on OSWorld G, and 49.2 percent on UI Vision. These numbers are ahead of the Gemini 3 Pro and Seed1.8 on ScreenSpot Pro, and significantly outperform the earlier Open model on UI Vision.

Self-developed navigation data and mobileworld

Navigation is harder than grounding because the agent must maintain context across multiple steps, possibly across applications, while interacting with the user and tools. To create robust navigation behavior, Tongi Lab uses a self-developed data pipeline.

Seed tasks come from app manuals, hand-designed scenarios, and filtered public data. Parameters such as dates, ranges, and filter values are perturbed to expand coverage, and object level replacements are applied while remaining within the same use case. Multiple agents, together with human annotators, perform these tasks in the Android environment to generate trajectories. A judge model then evaluates these trajectories, keeping the longest correct prefix and filtering out low quality segments. The next supervised training round uses the union of fresh human traces and high-quality model rollout, so data distribution gradually follows the current policy.

The MAI UI is evaluated on MobileWorld, a benchmark from the same team that includes 201 tasks across 20 applications. MobileWorld clearly blends three categories, pure GUI tasks, agent user interaction tasks that require natural language with the user, and MCP augmented tasks that require tool calls.

On the mobile world, MAI UI has reached 41.7 percent success overall, a gain of approximately 20.8 points over the strongest end-to-end GUI baseline, and competitive with agentive frameworks using large proprietary planners such as Gemini 3 Pro.

Online RL in containerized Android environment

Static data is not enough for robustness in dynamic mobile apps. Therefore MAI-UI uses an online RL framework where the agent interacts directly with containerized Android virtual devices. The environment stack packs rooted AVD images and backend services into Docker containers, exposes standard reset and step operations on a service layer and supports over 35 self-hosted apps from the e-commerce, social, productivity, and enterprise categories.

The RL setup uses an asynchronous on policy method, GRPO, which is implemented on top of Verl. It combines tensors, pipelines, and context parallelism similar to Megatron style training, so that the model can learn from trajectories with up to 50 steps and very long token sequences. Rewards come from rule-based validators or model judges that detect task completion, as well as penalties for obvious looping behavior. Only the most recent successful trajectories are kept in task specific buffers to stabilize learning.

Scaling this RL environment makes sense in practice. The research team shows that increasing the number of parallel GUI environments from 32 to 512 improves navigation success by about 5.2 percentage points, and increasing the allowed environment steps from 15 to 50 adds about 4.3 points.

On the AndroidWorld benchmark, which evaluates online navigation in a standard Android app suite, the largest MAI UI variant reaches 76.7 percent success, surpassing UI-TARS-2, Gemini 2.5 Pro, and Seed1.8.

key takeaways

- Unified GUI Agent Family for Mobile: MAI-UI is a Qwen3 VL based family of GUI agents from 2B to 235B A22B, specifically designed for real-world mobile deployments with native agent user interactions, MCP tool calls, and device cloud routing, rather than just static benchmarks.

- State-of-the-art GUI grounding and navigation: The models reach 73.5 percent on ScreenSpot Pro, 91.3 percent on MMBench GUI L2, 70.9 percent on OSWorld G, and 49.2 percent on UI Vision, and set a new 76.7 percent SOTA on AndroidWorld Mobile Navigation, surpassing UI Tars2, Gemini 2.5 Pro, and Seed1.8.

- Realistic mobileworld display with interactions and tools: On the Mobile World benchmark with 201 tasks in 20 apps, the MAI UI 235B A22B reaches 41.7 percent success overall, with 39.7 percent on pure GUI tasks, 51.1 percent on agent user interaction tasks, and 37.5 percent on MCP augmented tasks, surpassing the best end-to-end GUI baseline Doubao 1.5 UI TARS by 20.9 percent.

- Scalable Online RL in Containerized Android:MAI-UI uses an online GRPO-based RL framework on a containerized Android environment, where scaling from 32 to 512 parallel environments gives approximately 5.2 points in navigation success and increasing the environment stage budget from 15 to 50 gives an additional 4.3 points.

check it out paper And GitHub repoAlso, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletterwait! Are you on Telegram? Now you can also connect with us on Telegram.

Asif Razzaq Marktechpost Media Inc. Is the CEO of. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. Their most recent endeavor is the launch of MarketTechPost, an Artificial Intelligence media platform, known for its in-depth coverage of Machine Learning and Deep Learning news that is technically robust and easily understood by a wide audience. The platform boasts of over 2 million monthly views, which shows its popularity among the audience.