Cisco and Splunk have introduced Cisco Time Series ModelA univariate zero shot time series foundation model designed for observation and safety metrics. It is released as an open weight checkpoint on Hugging Face under the Apache 2.0 license, and targets workload prediction without task specific fine tuning. The model extends TimesFM 2.0 with an explicit multiresolution architecture that combines coarse and fine history in a single context window.

Why do observations need a multi-resolution context?,

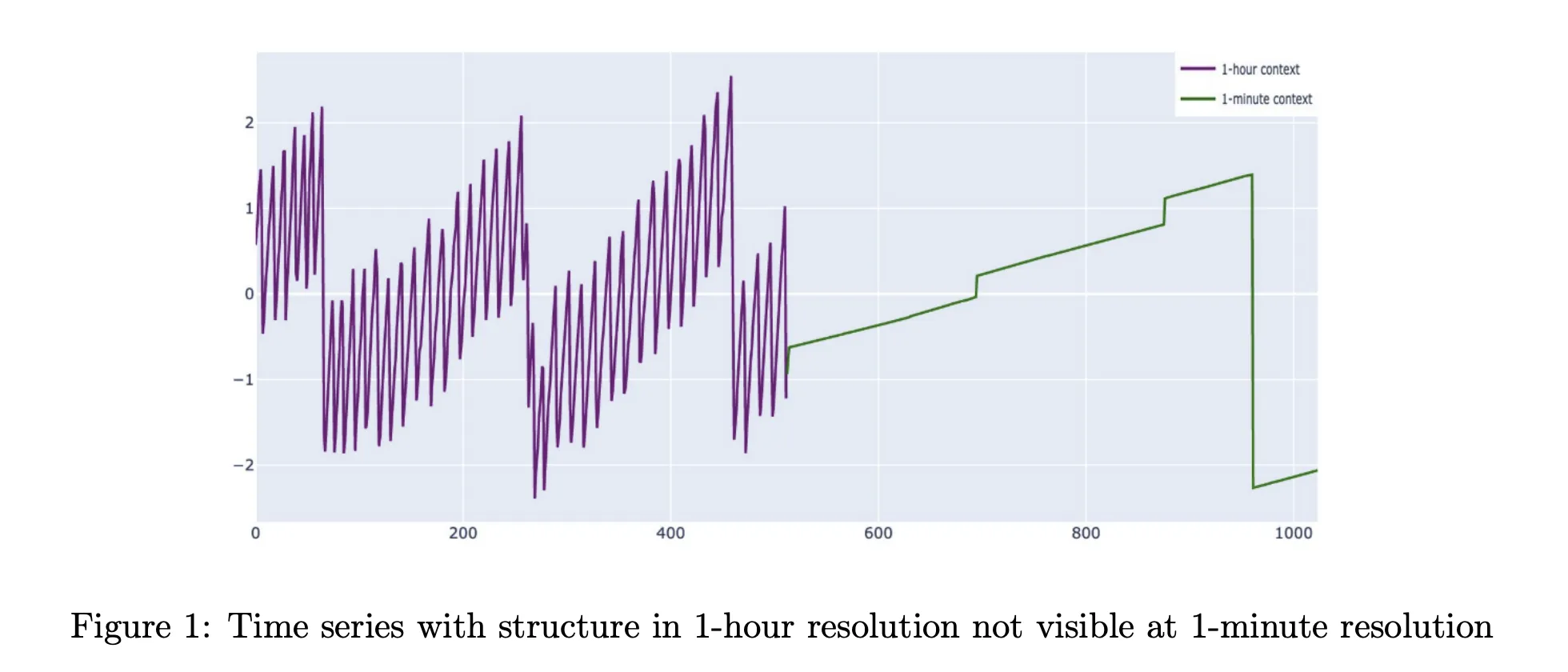

Production metrics are not simple single-scale indicators. Weekly patterns, long-term growth and saturation are visible only at coarser resolutions. Saturation events, traffic spikes and event dynamics are visible at 1 minute or 5 minute resolution. Normal time series Foundation models operate at a single resolution with a reference window between 512 and 4096 points, while TimesFM 2.5 increases this to 16384 points. For 1 minute of data this still covers a couple of weeks at most and often less than that.

This is a problem in observation where data platforms often only retain old data in aggregated form. Finer grained samples are depleted and only survive as a 1 hour rollup. The Cisco Time Series Model is built for this storage pattern. It treats coarse history as first-order input which improves predictions at fine resolution. The architecture works directly on the multiresolution context, rather than pretending that all inputs reside on the same grid.

Multiresolution input and forecasting objectives

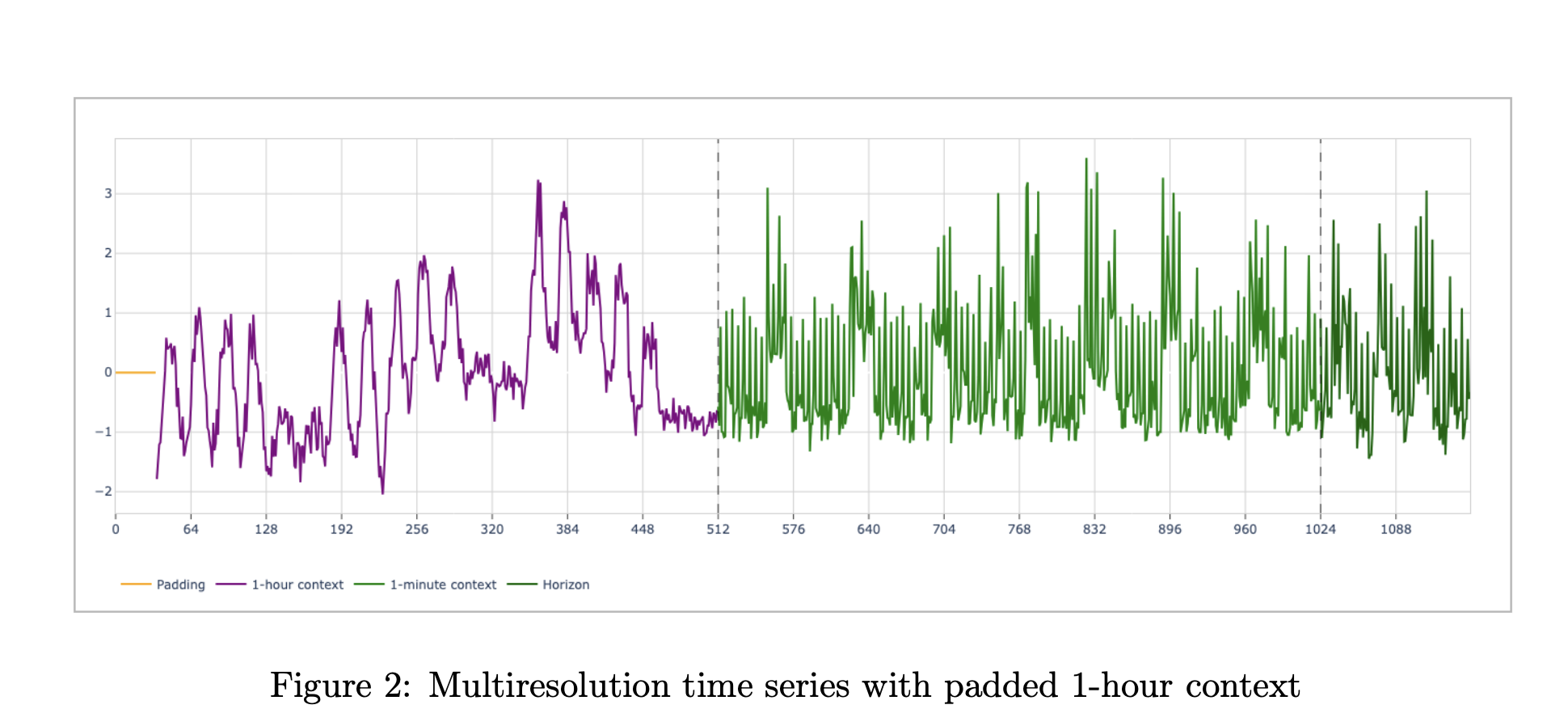

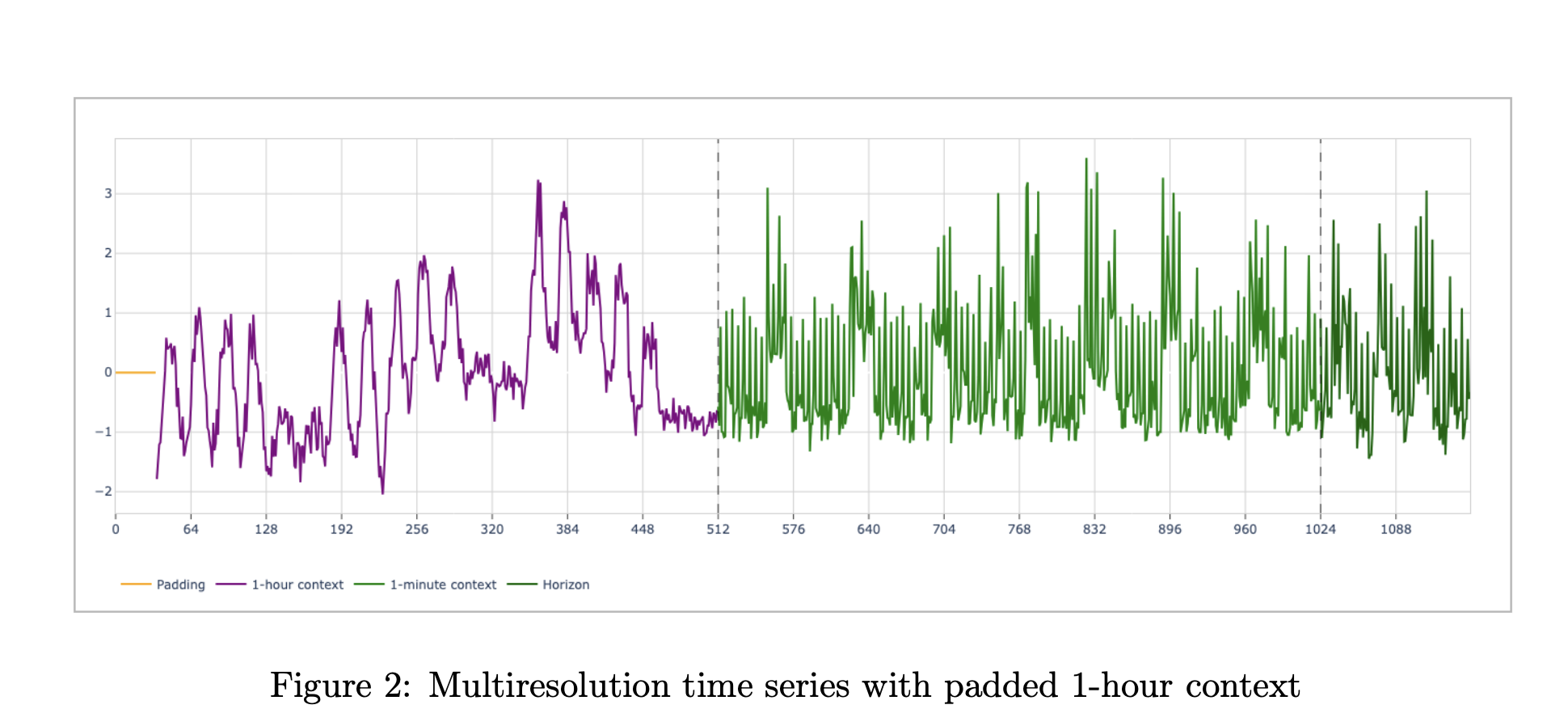

Formally, the model consumes a pair of contexts, (x).CxFCoarse references (x_c) and fine references (x_f) are each up to 512 in length. difference of (x)C) is fixed at 60 times the difference of (x)FA typical observing setup uses 512 hours of 1-hour aggregates and 512 minutes of 1-minute values. Both series end at the same forecast cut point. The model predicts horizons of 128 points at fine resolution, with a set of means and quantiles ranging from 0.1 to 0.9.

Architecture, TimesFM Core with Resolution Embedding

Internally, the Cisco time series model reuses the TimesFM patch based decoder stack. The input is normalized, patched into non-overlapping fragments, and passed through a residual embedding block. The transformer core contains only 50 decoder layers. A final residual block maps the tokens back to the horizon. The research team removes the positional embedding and instead relies on patch ordering, multiresolution structure, and a new resolution embedding to encode the structure.

Two additional architectures are multi-resolution aware. A special token, often called ST in reports, is inserted between the coarse and fine token streams. It resides in sequence space and marks the boundary between resolutions. Resolution embeddings, often called REs, are added to the model space. One embedding vector is used for all coarse tokens and the other is used for all fine tokens. The ablation study in the paper shows that both components improve quality, especially in long reference scenarios.

The decode process is also multiresolution. The model outputs mean and quantitative forecasts for fine resolution horizons. During long horizon decoding, newly predicted fine points are added to the fine context. The set of these predictions updates the coarse context. This creates an autoregressive loop in which both resolutions evolve simultaneously during forecasting.

Training data and recipe

The Cisco time series model is trained by continuous pretraining on top of the TimesFM weights. The final model has 500 million parameters. AdamW is used for biases, norms and embeddings in training, and Muon is used for hidden layers with a cosine learning rate schedule. The loss combines the mean square error on the mean forecast with the quantile loss at quantiles ranging from 0.1 to 0.9. The team trains for 20 epochs and chooses the best checkpoint based on validation loss.

The dataset is large and skewed towards observation. The Splunk team reports approximately 400 million metrics time series from their own Splunk Observability Cloud deployment, collected at 1-minute resolution and partially aggregated at 5-minute resolution over 13 months. The research team says the final corpus includes more than 300 billion unique data points, including approximately 35 percent 1-minute observability, 16.5 percent 5-minute observability, 29.5 percent GIFT Eval pretraining data, 4.5 percent Chronos dataset, and 14.5 percent synthetic KernelSynth series.

Benchmark results on observability and gift evaluation

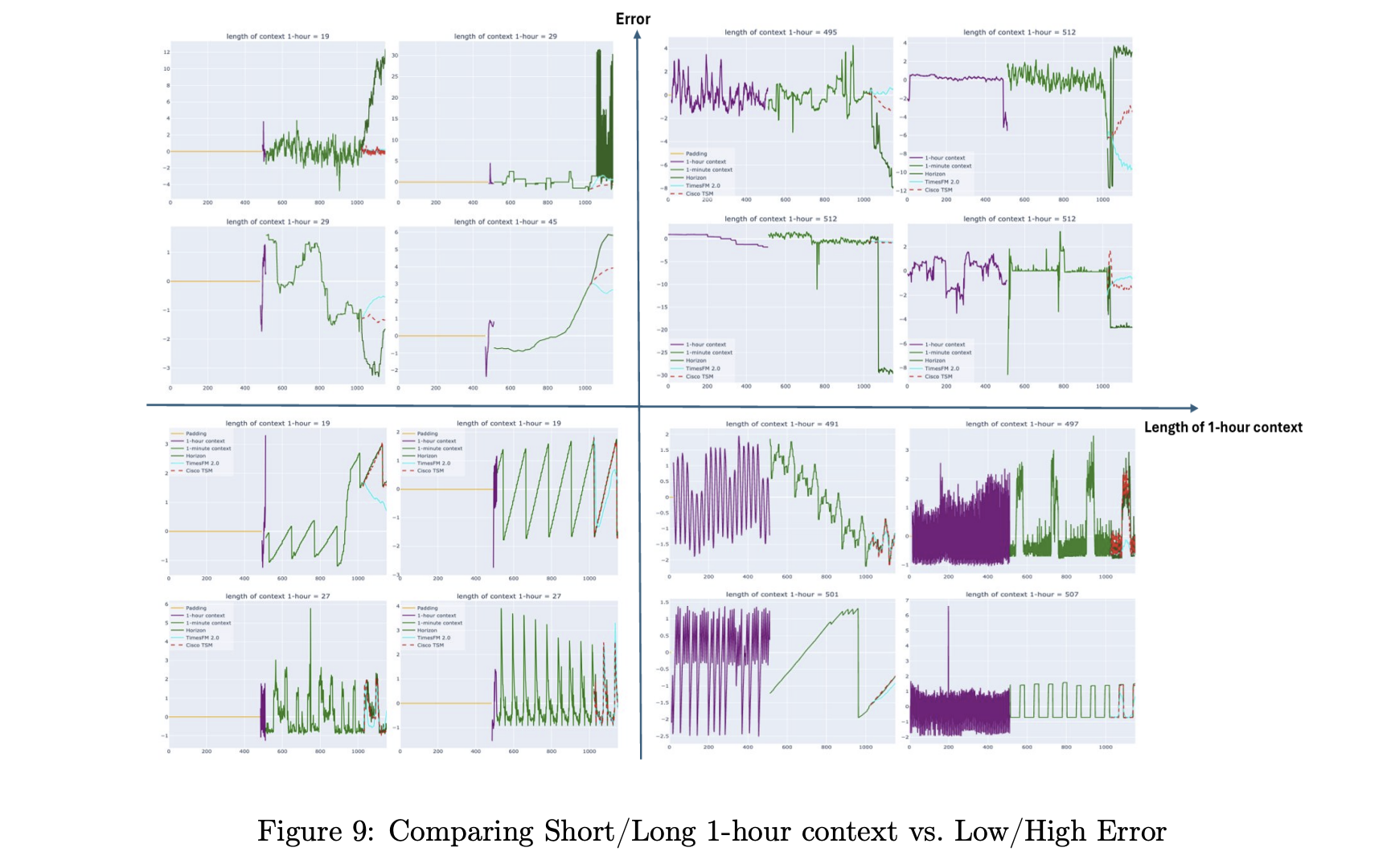

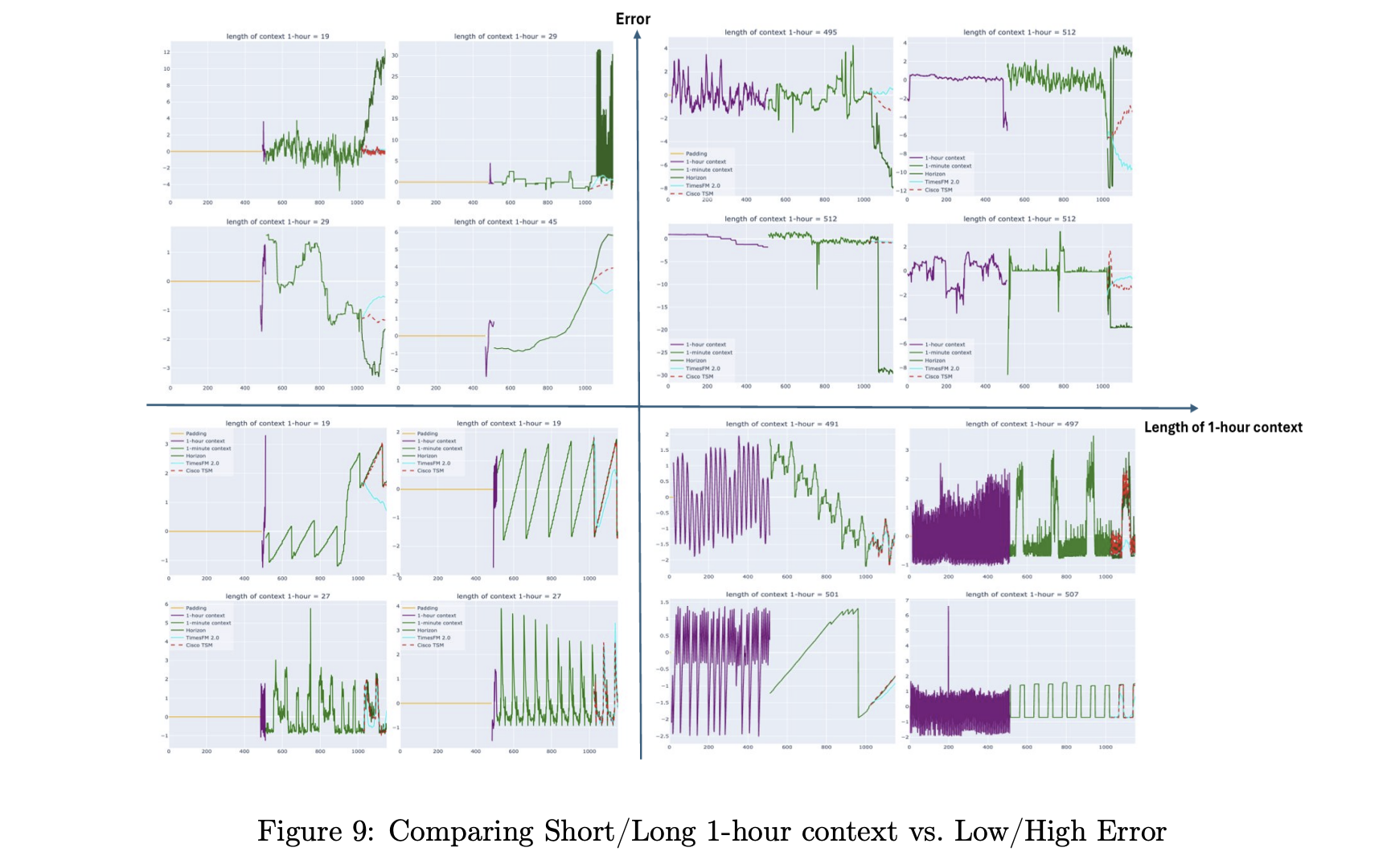

The research team evaluates the model on two main benchmarks. The first is an observational dataset obtained from Splunk metrics at 1 minute and 5 minute resolution. The second is a filtered version of GIFT Eval, where datasets that leak the TimesFM 2.0 training data are removed.

On observational data at 1 min resolution with 512 fine steps, the Cisco time series model using a 512 multiresolution reference reduces the mean absolute error from 0.6265 for TimesFM 2.5 and from 0.6315 to 0.4788 for TimesFM 2.0, with similar improvements in the mean absolute scale error and constant ranked likelihood score. Similar benefits are seen at 5 min resolution. At both resolutions, the model outperforms the Chronos 2, Chronos Bolt, Toto, and AutoARIMA baselines under the normalized metrics used in the paper.

On the filtered GIFT Eval benchmark, the Cisco Time Series model matches the base TimesFM 2.0 model and performs competitively with TimesFM-2.5, Chronos-2, and Toto. The main claim is not universal dominance, but preservation of general forecast quality while adding a strong advantage over long reference windows and observational workloads.

key takeaways

- The Cisco Time Series Model is a univariate zero shot time series foundation model that simply extends the TimesFM 2.0 decoder backbone with a multiresolution architecture for observation and security metrics.

- The model consumes a multi-resolution context, consisting of a coarse chain and a fine chain, each up to 512 steps long, where the coarse resolution is 60 times the fine resolution, and it predicts in 128 fine resolution steps with mean and quantile outputs.

- The Cisco time series model has been trained on more than 300B data points, more than half of the observability, combining Splunk machine data, GIFT Eval, Chronos dataset, and synthetic KernelSynth series, and has about 0.5B parameters.

- On observation benchmarks at 1 min and 5 min resolution, the model achieves lower error than TimesFM 2.0, Chronos, and other baselines, while maintaining competitive performance on the general purpose GIFT Eval benchmark.

check it out paper, blog And Model card on HFFeel free to check us out GitHub page for tutorials, code, and notebooksAlso, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletterwait! Are you on Telegram? Now you can also connect with us on Telegram.

Asif Razzaq Marktechpost Media Inc. Is the CEO of. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. Their most recent endeavor is the launch of MarketTechPost, an Artificial Intelligence media platform, known for its in-depth coverage of Machine Learning and Deep Learning news that is technically robust and easily understood by a wide audience. The platform boasts of over 2 million monthly views, which shows its popularity among the audience.