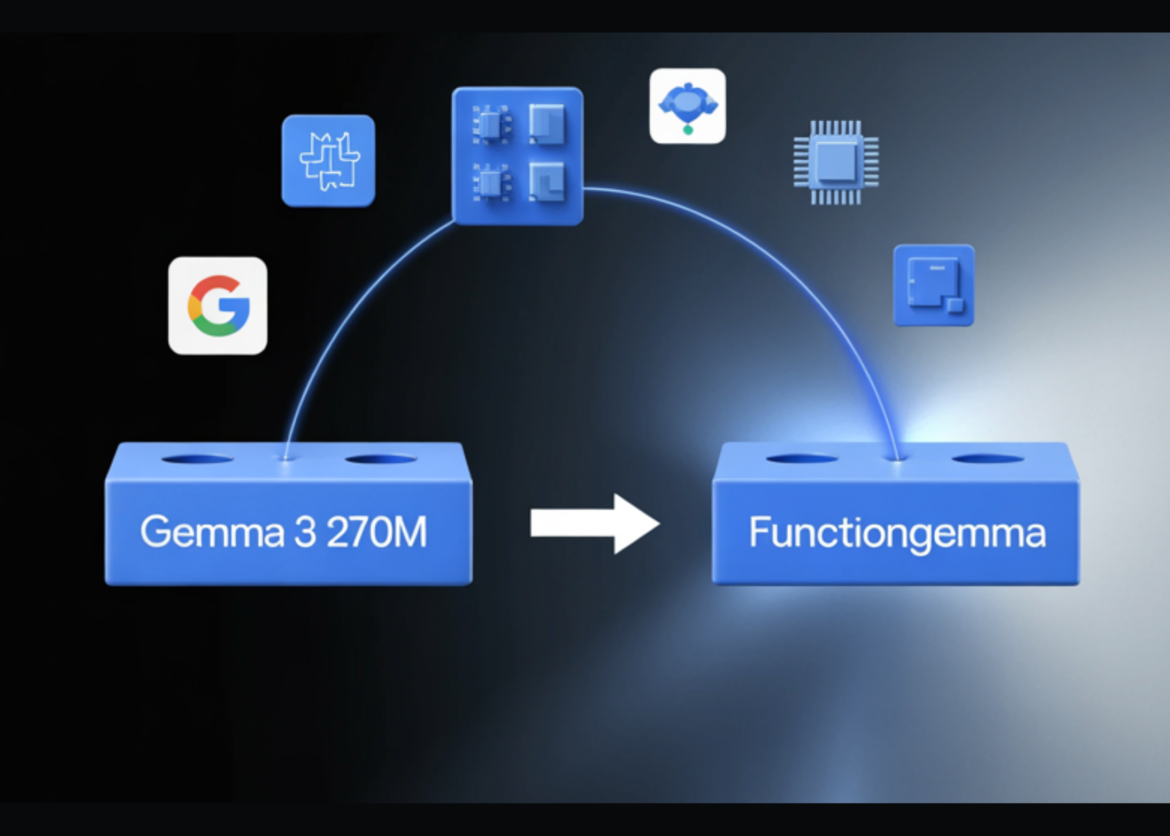

Google has released FunctionGemma, a special version of the Gemma 3 270M model, trained specifically for function calling and designed to run as an edge agent that maps natural language into executable API actions.

But, what is FunctionGemma?

FunctionGemma is a 270M parameter text only transformer based on the Gemma 3 270M. It keeps the same architecture as Gemma 3 and is released as an open model under the Gemma license, but for training purposes and the chat format is dedicated to function calling rather than free form dialogue.

The model is intended to be fine tuned to specific function calling tasks. It is not positioned as a general chat assistant. The primary design goal is to translate user instructions and tool definitions into structured function calls, then optionally summarize tool responses for the user.

From an interface perspective, FunctionGemma is presented as a standard causal language model. Input and output are text sequences, with an input context of 32K tokens and an output budget of up to 32K tokens per request, which is shared along the input length.

Architecture and Training Data

The model uses the Gemma 3 transformer architecture and the same 270M parameter scale as the Gemma 3 270M. The training and runtime stack reuses research and infrastructure used for Gemini, including JAX and ML pathways on large TPU clusters.

FunctionGemma uses Gemma’s 256K vocabulary, optimized for JSON structures and multilingual text. This function improves token efficiency for schema and tool responses and reduces sequence length for edge deployments where latency and memory are tight.

The model is trained on 6T tokens with a knowledge cutoff in August 2024. The dataset focuses on two main categories:

- Public tools and API definitions

- Tool usage interactions that include prompts, function calls, function responses, and natural language follow-up messages that summarize output or request clarification

This training teaches both signal syntax, which function to call and how to format arguments, and intent, when to call a function and when to ask for more information.

Conversation Format and Control Tokens

FunctionGemma does not use a free form chat format. This requires a strict conversation template that separates roles and device-related areas. Conversation turns have been wrapped up developer, user Or model,

Within those turns, FunctionGemma depends on a fixed set of control token pairs.

These markers let the model separate natural language text from function schema and execution results. hug face apply_chat_template The API and official Gemma templates automatically generate this structure for messages and tool lists.

Fine tuning and mobile action performance

Out of the box, FunctionGemma is already trained for common tool use. However, the official mobile action guide and model card emphasize that small models reach production level reliability only after action specific fine tuning.

The Mobile Actions demo uses a dataset where each example exposes a small set of tools for Android system operations, for example create a contact, set a calendar event, control the flashlight and map viewing. FunctionGemma learns to map statements like ‘create a calendar event for lunch tomorrow’ or ‘turn on the flashlight’ to those devices with structured arguments.

On mobile action evaluation, the Base FunctionGemma model reaches 58 percent accuracy on the conducted test set. After fine-tuning with public cookbook recipes, accuracy increases to 85 percent.

Edge Agent and Reference Demo

The main deployment targets for FunctionGemma are edge agents that run locally on phones, laptops, and small accelerators like the NVIDIA Jetson Nano. Small parameter count, 0.3 B, and support for quantization allows inference to be performed with less memory and low latency on consumer hardware.

Google offers multiple context experiences through Google AI Edge Gallery

- mobile actions Shows a completely offline assistive styling agent for device control using FunctionGemma, fine-tuned on the mobile action dataset and deployed on the device.

- small garden is a voice controlled game where the model decomposes commands like “plant sunflowers in the top row and water them” into domain specific actions like

plant_seedAndwater_plotsWith clear grid coordinates. - Function Gemma Physics Playground Runs entirely in the browser using Transformers.js and lets users solve physics puzzles through natural language instructions that the model converts into simulation actions.

These demos confirm that the 270M parameter function caller can support multi step logic on the device without server calls given appropriate fine tuning and tool interface.

key takeaways

- FunctionGemma is a 270M parameter, text-only version of Gemma 3 that is trained specifically for function calling, not open-ended chat, and is released as an open model under the Gemma terms of use.

- The model keeps the Gemma 3 Transformer architecture and 256k token vocabulary, supports 32k tokens per request shared between input and output, and is trained on 6T tokens.

- FunctionGemma uses a strict chat template

role ... - On the mobile action benchmark, accuracy increases from 58 percent for the base model to 85 percent after task specific fine tuning, showing that small function callers require more domain data from prompt engineering.

- The 270M scale and quantization support lets FunctionGemma run on phones, laptops, and Jetson-class devices, and the model is already integrated into ecosystems like Hugging Face, Vertex AI, LM Studio, and edge demos like Mobile Actions, Tiny Garden, and Physics Playground.

check it out technical details And Model on HF. Also, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletterwait! Are you on Telegram? Now you can also connect with us on Telegram.

Asif Razzaq Marktechpost Media Inc. Is the CEO of. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. Their most recent endeavor is the launch of MarketTechPost, an Artificial Intelligence media platform, known for its in-depth coverage of Machine Learning and Deep Learning news that is technically robust and easily understood by a wide audience. The platform boasts of over 2 million monthly views, which shows its popularity among the audience.