Author(s): mental image

Originally published on Towards AI.

I did not switch models, fine-tune, or add new data. I have stopped trusting AI.

I did not change the models. I didn’t tune properly. I didn’t add a single row of new training data. I have stopped trusting AI.

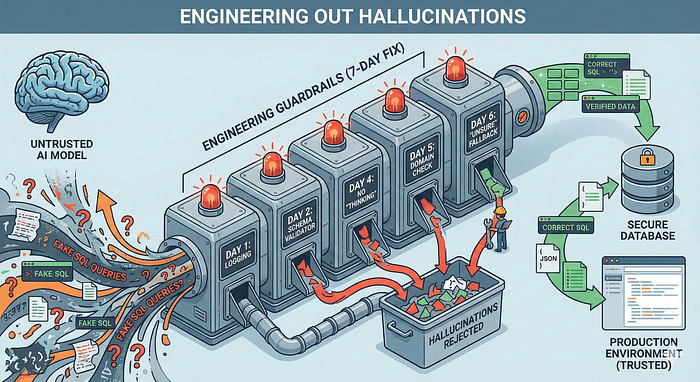

The article discusses the author’s experience dealing with AI hallucinations in production systems without changing the model. The authors implemented a series of engineering controls, such as logging everything, validating the output, and allowing the model to express uncertainty, which collectively resulted in a significant reduction in hallucinations and errors. These practical steps emphasized the importance of applying reality and maintaining a critical stance towards model outputs rather than blindly trusting AI.

Read the entire blog for free on Medium.

Published via Towards AI

Take our 90+ lessons from Beginner to Advanced LLM Developer Certification: This is the most comprehensive and practical LLM course, from choosing a project to deploying a working product!

Towards AI has published Building LLM for Production – our 470+ page guide to mastering the LLM with practical projects and expert insights!

Find your dream AI career at Towards AI Jobs

Towards AI has created a job board specifically tailored to machine learning and data science jobs and skills. Our software searches for live AI jobs every hour, labels and categorizes them and makes them easily searchable. Search over 40,000 live jobs on AI Jobs today!

Comment: The content represents the views of the contributing authors and not those of AI.