OpenAI is falling Signal This week it’s all about AI’s role as a “health care aide” — and today, the company is announcing a product right in line with that idea: ChatGPT Health.

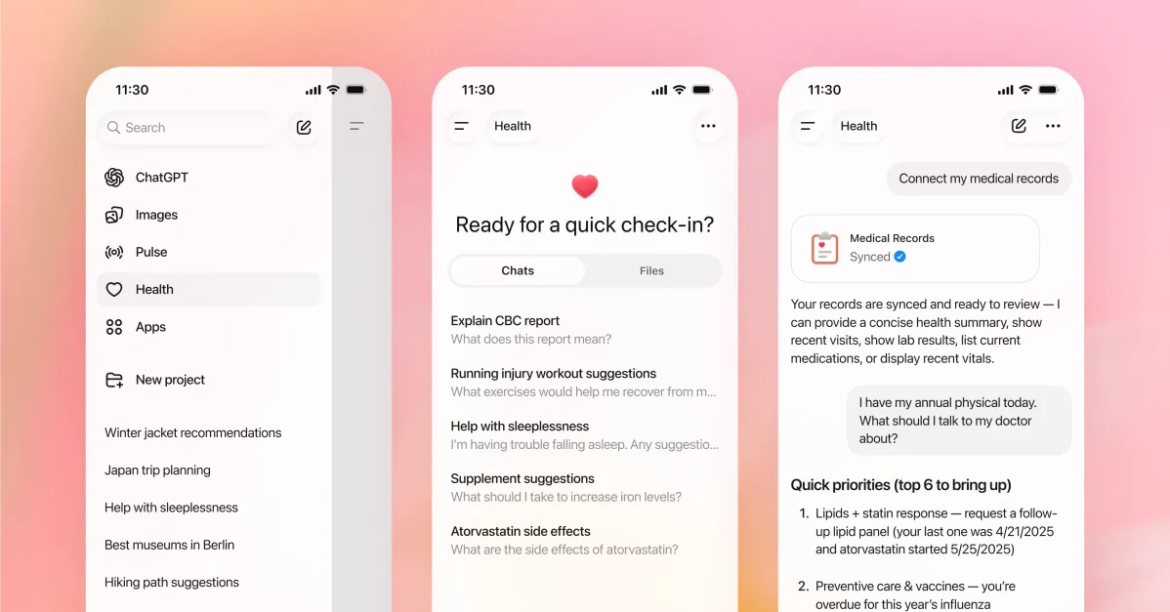

ChatGPT Health is a sandboxed tab within ChatGPT designed for users to ask their health questions, in what it describes as a more secure and personalized environment than the rest of ChatGPT, with a separate chat history and memory feature. The company is encouraging users to connect their personal medical records and wellness apps like Apple Health, Peloton, MyFitnessPal, Weight Watchers, and Function to “get more personalized, grounded answers to their questions.” It suggests linking medical records so that ChatGPT can analyze lab results, view summaries, and view clinical history; MyFitnessPal and Weight Watchers for dietary guidance; Apple Health for health and fitness data, including “movement, sleep, and activity patterns”; And work for insight into laboratory tests.

On the medical records front, OpenAI says it has partnered with b.well, which will provide back-end integration for users to upload their medical records, as the company works with about 2.2 million providers. For now, ChatGPT Health requires users to sign up waiting list To request access, as it is starting with a beta group of early users, but the product will gradually become available to all users regardless of subscription level.

The company makes sure to mention in the blog post that ChatGPT Health is “not intended for diagnosis or treatment,” but it can’t fully control how people use the AI when they leave the chat. by company self acceptanceIn disadvantaged rural communities, users send an average of about 600,000 health-related messages weekly, and seven out of 10 health-related conversations in ChatGPT “take place outside of normal clinic hours.” In August, the doctor published a report A man was hospitalized for several weeks with an 18th-century medical condition after taking ChatGPT’s alleged advice to replace salt in his diet with sodium bromide. Google’s AI overview has been in the news for weeks after its launch over dangerous advice like putting gum on pizza and a recent investigation. Guardian found Dangerous health advice continues to spread, with false advice on liver function tests, women’s cancer testing and recommended diets for people with pancreatic cancer.

In a blog post, OpenAI wrote that based on its “anonymized analysis of conversations”, more than 230 million people around the world already ask ChatGPAT questions related to health and wellness every week. OpenAI also said that over the past two years, it has worked with more than 260 practitioners to provide feedback on model outputs more than 600,000 times across 30 areas of focus to help shape the product’s responses.

“ChatGPT can help you understand recent test results, prepare for appointments with your doctor, get advice about your diet and workout routine, or understand the tradeoffs of different insurance options based on your health care patterns,” claims OpenAI in a blog post.

One part of health that OpenAI carefully avoided mentioning in its blog post: mental health. There have been multiple instances of adults or minors dying by suicide after trusting ChatGPT, and in the blog post, OpenAI stuck to a vague mention that users can customize the instructions in the health product “to avoid mentioning sensitive topics.” When asked during a Wednesday briefing with reporters whether ChatGPT Health would also summarize mental health visits and provide advice in that area, Fidzi Simo, OpenAI’s CEO of applications, said, “Mental health is definitely part of health in general, and we see a lot of people turning to ChatGPT for mental health conversations,” adding that the new product “can handle any part of your health, including mental health… We’re very focused on making sure that in crisis situations we respond accordingly and we’re directed toward health professionals,” as well as loved ones or other resources.

It is also possible that the product may worsen conditions of health concern, such as hallucinationAsked if OpenAI has introduced any safeguards to help prevent people with such conditions from escalating when using ChatGPT Health, Simo said, “We have done a lot of work on tuning the model to make sure that we are informative without ever being alarmist and that we direct the healthcare system if there is an action to be taken,”

According to the briefing, when it comes to security concerns, OpenAI says ChatGPS Health “operates as a separate space with enhanced privacy to protect sensitive data” and the company introduced multiple layers of purpose-built encryption (but not end-to-end encryption). By default, conversations within the Health product are not used to train its base model, and if a user initiates a health-related conversation in regular ChatGPT, the chatbot will suggest moving it to the Health product for “additional security,” according to the blog post. But OpenAI has faced security breaches in the past, notably a March 2023 Issue that allowed some users to see chat titles, opening messages, names, email addresses, and payment information from other users. In the event of a court order, OpenAI would still be required to provide access to the data “where necessary through legitimate legal processes or in an emergency situation,” Nate Gross, OpenAI’s head of health, said during the briefing.

When asked whether ChatGPS Health is Health Insurance Portability and Accountability Act (HIPAA) compliant, Gross said that “in the case of consumer products, HIPAA does not apply in this setting – it applies to clinical or professional health care settings.”