Tencent Hunyuan’s 3D Digital Human team has released HY-Motion 1.0, an open weight text-to-3D human motion generation family that scales diffusion transformer based flow matching to 1B parameters in the motion domain. The models transform natural language signals and expected durations into 3D human motion clips on an integrated SMPL-H skeleton and are available on GitHub and Hugging Face with code, checkpoints, and a Gradio interface for local use.

What does HY-Motion 1.0 offer for developers,

HY-Motion 1.0 is a series of text-to-3D human motion generation models built on a Diffusion Transformer, DIT, trained with the flow matching objective. The model series features 2 variants, the HY-Motion-1.0 with 1.0B parameter as the standard model and the HY-Motion-1.0-Lite with 0.46B parameter as a lightweight option.

Both models generate skeleton-based 3D character animations from simple text symbols. The output is a motion sequence on an SMPL-H skeleton that can be integrated into 3D animation or game pipelines, for example for digital humans, cinematics and interactive characters. The release includes estimation scripts, a batch-oriented CLI, and a Gradio web app, and supports macOS, Windows, and Linux.

Data Engines and Classification

The training data comes from 3 sources, wild human motion videos, motion capture data and 3D animation assets for game production. The research team starts with HunyuanVideo’s 12M high-quality video clips, detects shot boundaries for segmented scenes and runs a human detector to place clips with people, then applies the GVHMR algorithm to reconstruct the SMPL X motion track. Motion capture sessions and 3D animation libraries contribute approximately 500 hours of additional motion sequences.

All data is retargeted to the integrated SMPL-H skeleton through mesh fitting and retargeting tools. A multi stage filter removes artifacts such as duplicate clips, abnormal poses, outliers in joint velocity, abnormal displacements, long static segments and foot slipping. The motions are then canonized, resampled at 30 fps and divided into clips of less than 12 seconds each with a fixed world frame, the Y axis up and the character facing the positive Z axis. The final repository includes over 3,000 hours of motion, of which 400 hours are high quality 3D motion with verified captions.

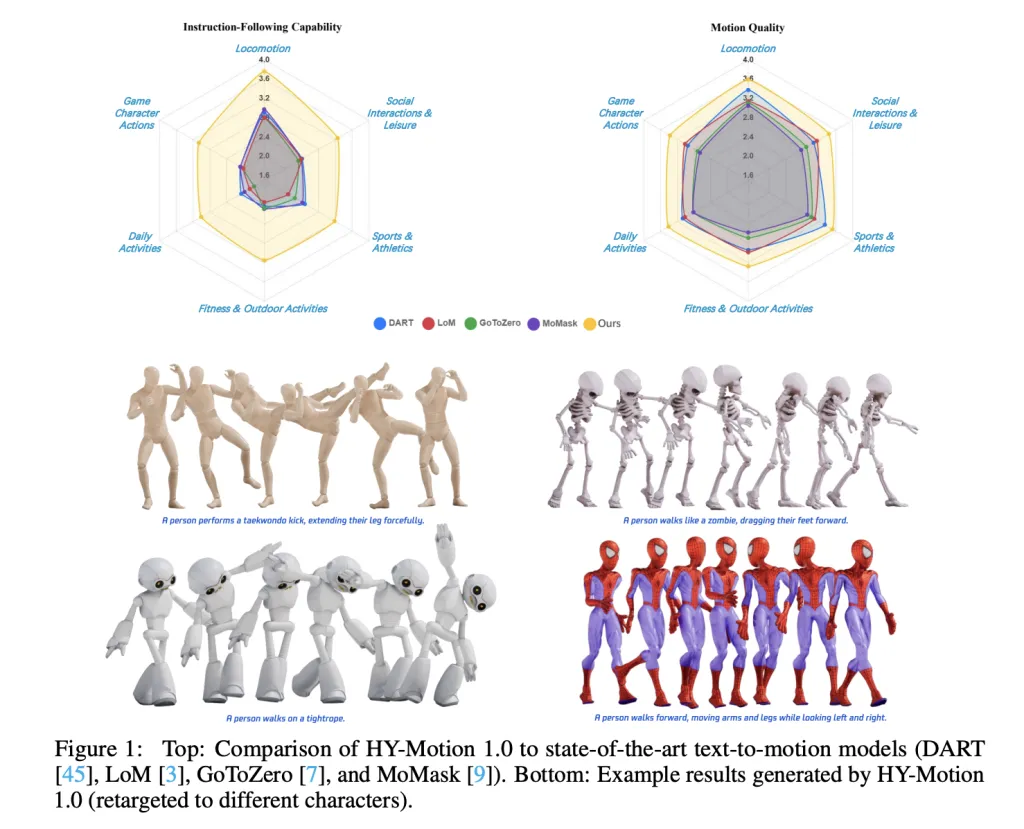

On top of this, the research team defines a 3 level classification. The top level has 6 sections, Locomotion, Sports and Athletics, Fitness and Outdoor Activities, Daily Activities, Social Interaction and Leisure and Sports Character Actions. These extend to over 200 fine-grained motion categories on the leaves, covering both simple atomic actions and concurrent or sequential motion combinations.

Motion Representation and HY-Motion DiT

HY-Motion 1.0 uses the SMPL-H skeleton with 22 body joints without arms. Each frame is a 201-dimensional vector that combines the global root translation in 3D space, the global body orientation in a continuous 6D rotation representation, 21 local joint rotations in 6D form, and 22 local joint positions in 3D coordinates. Velocity and foot contact labels have been removed as they slowed down the training and did not help the final quality. This representation is compatible with animation workflows and is close to the DART model representation.

The core network is a hybrid HY Motion DiT. It first implements dual stream blocks that process motion latent and text tokens separately. In these blocks, each modality has its own QKV estimates and MLPs, and a joint attention module allows motion tokens to query semantic features from text tokens while maintaining the modality specific structure. The network then switches to a single stream block that combines motion and text tokens into a sequence and processes them with parallel spatial and channel attention modules to perform deep multimodal fusion.

For text conditioning, the system uses a dual encoder scheme. Qwen3 provides 8B token level embeddings, while the CLIP-L model provides global text features. A bidirectional token refiner corrects attention bias due to LLM for non-autoregressive generation. These signals feed the DIT through adaptive layer normalization conditioning. Attention is asymmetric, motion tokens can attend to all text tokens, but text tokens do not feed back into motion, which prevents noisy motion states from corrupting the language representation. Temporal focus inside the motion branch uses a narrow sliding window of 121 frames, focusing capacity on local kinematics while keeping costs manageable for long clips. Full rotary position embedding is applied after combining text and motion tokens to encode relative positions in the entire sequence.

Flow matching, quick rewriting and training

HY-Motion 1.0 standard denoising uses flow matching instead of propagation. The model learns a velocity field along a continuous path that interpolates between Gaussian noise and real motion data. During training, the objective is to have a mean square error between the estimated and ground truth velocities on this path. During inference, the learned ordinary differential equation is integrated from the noise into a clean trajectory, which gives stable training for long sequences and fits into the DIT architecture.

A separate duration prediction and prompt rewrite module improves the following instruction. It uses the Qwen3 30B A3B as the base model and is trained on synthetic user style signals generated from motion captions with the VLM and LLM pipeline, for example Gemini 2.5 Pro. This module predicts an appropriate movement period and rewrites the informal signals into normalized text that is easier for DiT to follow. It is first trained with supervised fine tuning and then refined with group relative policy optimization, using Qwen3 235B A22B as a reward model that scores semantic consistency and duration probability.

The training follows a 3 phase curriculum. Phase 1 performs large-scale pre-training on the entire 3,000-hour dataset to learn comprehensive motion pre- and basic text-motion alignment. Stage 2 fine tunes on 400 hours of high-quality sets to sharpen motion details and improve semantic accuracy with low learning rates. Stage 3 applies reinforcement learning, first direct preference optimization using 9,228 curated human preference pairs out of approximately 40,000 generated pairs, then feed the GRPO with an aggregate reward. This reward combines a semantic score from the text motion retrieval model and a physics score that penalizes artifacts such as foot slipping and root drift under a KL regularization term to stay close to the supervised model.

Benchmarks, scaling behavior and limitations

For evaluation, the team creates a test set of over 2,000 signals that cover 6 classification categories and include simple, concurrent, and sequential actions. Human evaluators score the quality of following instructions and speed on a scale of 1 to 5. HY-Motion 1.0 reaches an average instruction post score of 3.24 and an SSAE score of 78.6 percent. Baseline text-to-motion systems like DART, LoM, GoToZero, and MoMask achieve scores between 2.17 and 2.31, with SSAE between 42.7 percent and 58.0 percent. For motion quality, HY-Motion 1.0 reaches an average of 3.43 while the best baseline is 3.11.

Scaling experiments study DIT models with 0.05b, 0.46b, 0.46b trained only on 400 h and 1b parameters. Instruction following improves steadily with model size, reaching an average of 3.34 for the 1B model. Motion quality saturates around the 0.46b scale, where the 0.46b and 1b models reach similar averages between 3.26 and 3.34. A comparison of a 0.46B model trained on 3,000 hours and a 0.46B model trained on only 400 hours shows that larger data volume is important for instruction alignment, while higher quality curation mainly improves realism.

key takeaways

- Billion scale DIT flow matching for speed: HY-Motion 1.0 is the first diffusion transformer based flow matching model specifically extended to 1B parameter level for 3D human motion from text, targeting high fidelity instruction in various tasks.

- Large-scale, curated motion corpus: The model is pre-trained on over 3,000 hours of reconstruction, mocap and animation motion data and fine-tuned on 400 hours of high-quality subsets, all retargeted onto a unified SMPL H skeleton and organized into over 200 motion categories.

- Hybrid DIT architecture with strong text conditioning: HY-Motion 1.0 uses a hybrid dual stream and single stream DiT with asymmetric attention, narrow band temporal attention and dual text encoders, Qwen3 8B and CLIP L to combine token level and global semantics into motion trajectories.

- RL aligned prompt rewrite and training pipeline: A dedicated Qwen3 30B based module predicts motion duration and rewrites user signals, and DiT is combined with direct preference optimization and flow GRPO using semantic and physics rewards, improving realism and instruction beyond supervised training.

check it out paper And full code here, Also, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletterwait! Are you on Telegram? Now you can also connect with us on Telegram.

Asif Razzaq Marktechpost Media Inc. Is the CEO of. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. Their most recent endeavor is the launch of MarketTechPost, an Artificial Intelligence media platform, known for its in-depth coverage of Machine Learning and Deep Learning news that is technically robust and easily understood by a wide audience. The platform boasts of over 2 million monthly views, which shows its popularity among the audience.