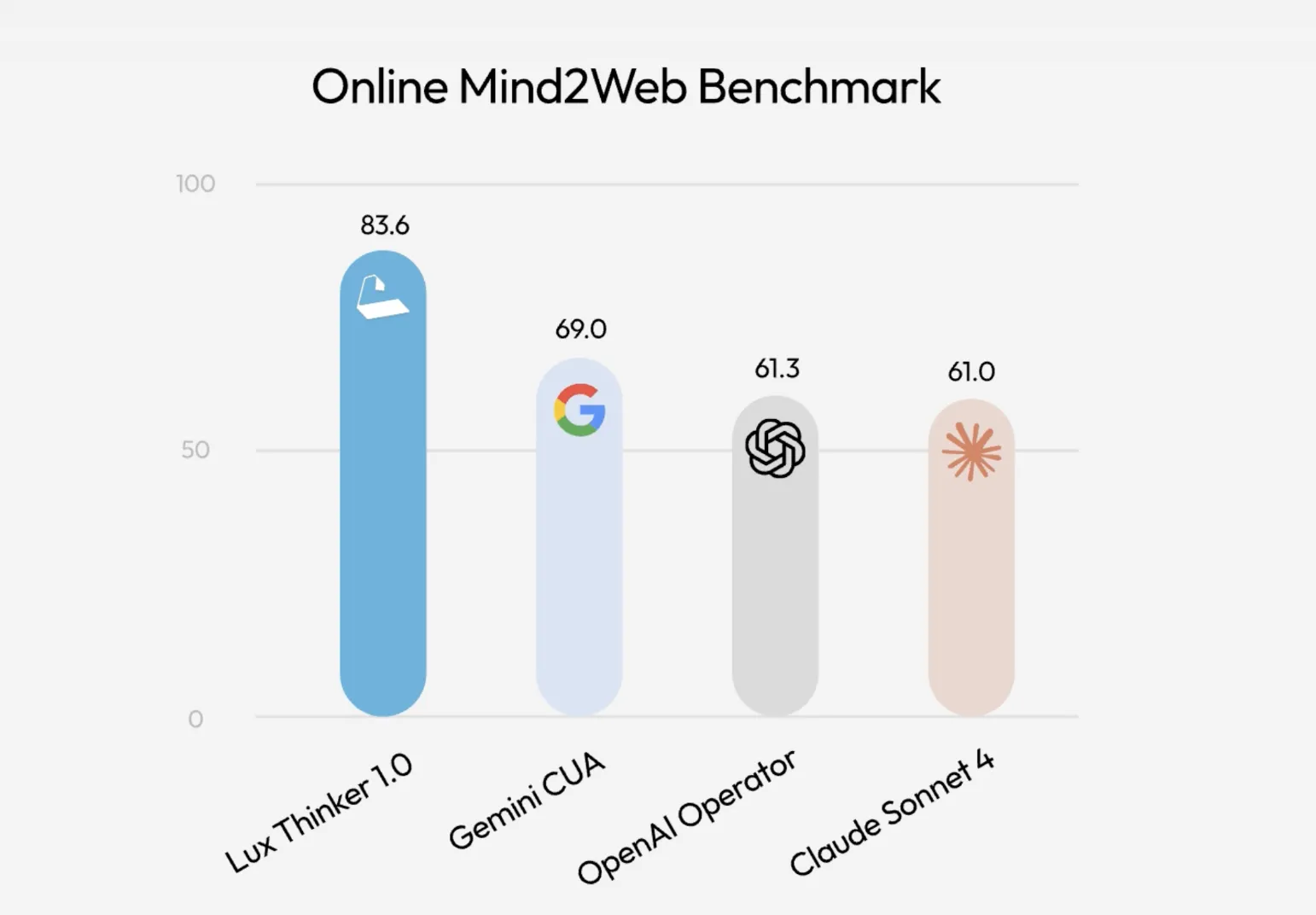

How can you turn slow, manual clicking work on browsers and desktops into a reliable, automated system that can actually do the bulk of the computer use for you? Lux Computer Usage Agents’ research is the latest example of the move from demo to infrastructure. The OpenAGI Foundation team has released luxA foundation model that operates on a real desktop and browser and reports a score of 83.6 on the online Mind2Web benchmark, which covers more than 300 real-world computer usage tasks. It is ahead of Google Gemini CUA at 69.0, OpenAI Operator at 61.3 and Anthropic Cloud Sonnet 4 at 61.0.

What does Lux actually do?

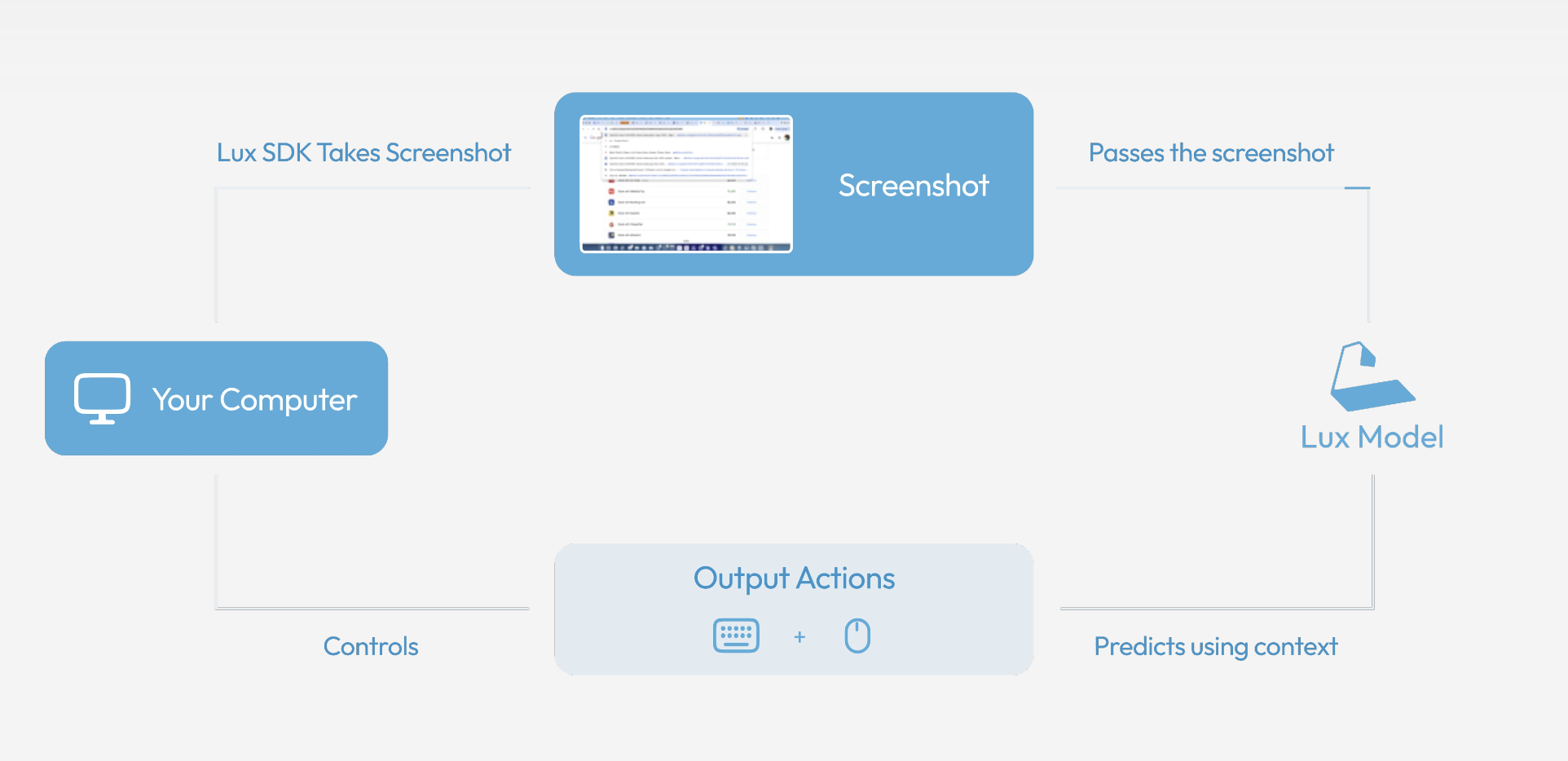

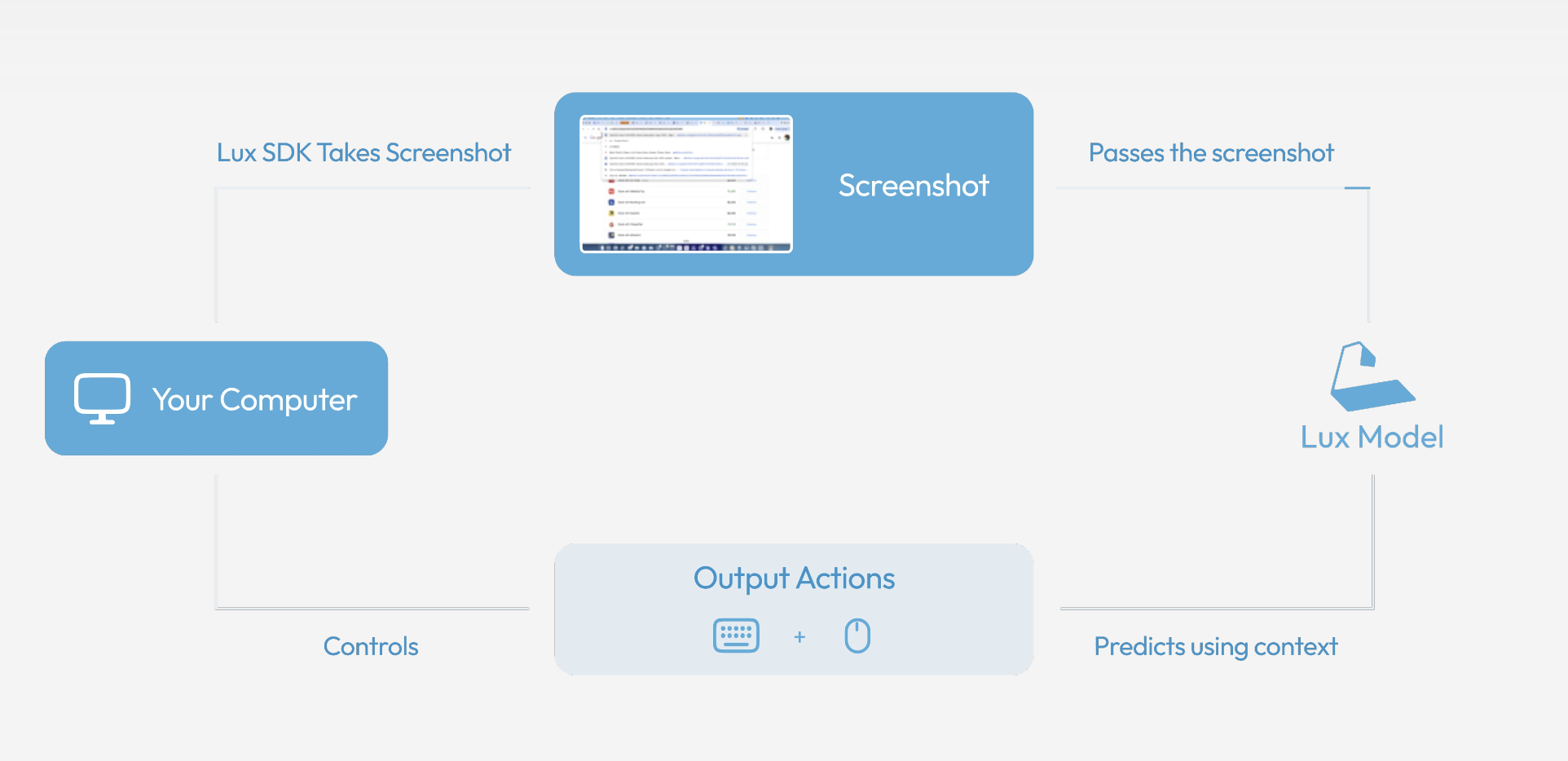

Lux is a computer usage model, not a chat model with a browser plugin. It takes a natural language target, looks at the screen, and outputs low-level actions such as click, key press, and scroll events. It can run browsers, editors, spreadsheets, email clients, and other desktop applications because it works on the rendered UI, not application specific APIs.

From a developer’s perspective, Lux is available through OpenAGI SDK and API ConsoleThe research team describes the target workload as including software QA flow, in-depth research runs, social media management, online store operations, and bulk data entry, All of these settings require the agent to sequence dozens or hundreds of UI actions while remaining aligned with natural language task descriptions,

Three execution modes for different control levels

lux sends with three execution modes Which highlights the various trade-offs between speed, autonomy and control.

actor mode Is the faster way. It runs about 1 second per step and is aimed at clearly specified tasks such as filling out a form, pulling a report from a dashboard or extracting a small set of fields from a page. Think of it as a low-latency macro engine that still understands natural language.

thinking mode Handles vague or multi-step goals. It decomposes high level instructions into smaller sub tasks and then executes them. Example workloads include multi-page research, triage of long email queues, or navigation of analytics interfaces where the exact click path is not specified in advance.

tasker mode Gives maximum determinism. The caller provides an explicit Python list of steps that Lux executes one by one and it retries until the sequence is completed or a hard failure occurs. This allows teams to keep task graphs, guardrails, and failure policies in their code while delegating UI control to models.

Tasker, Actor, and Thinker are the three primary modes for procedural workflow, fast execution, and complex goal solving.

Benchmarks, Latency and Cost

Online on Mind2Web, Lux has reached a success rate of 83.6 percent. The same benchmark reports 69.0 percent for Gemini CUA, 61.3 percent for OpenAI Operator, and 61.0 percent for Cloud Sonnet 4. The benchmark includes over 300 web-based tasks collected from real services, so it is a useful proxy for practical agents that run browsers and web apps.

Latency and cost are where the numbers become important for engineering teams. The OpenAGI team reports that Lux completes each step in about 1 second, while the OpenAI operator takes about 3 seconds per step in the same evaluation setting. The research team also says that Lux is approximately 10 times cheaper per token than the operator. For any agent that can easily walk hundreds of steps in a session, these constant factors determine whether the workload is viable in production.

Why Agentic Active Pre-Training and OSGym Matter,

Lux is trained with a method that the OpenAGI research team calls Agent Active Pre-TrainingThe team compares this to standard language model pre-training that passively ingests text from the Internet, The idea is that Lux learns by acting in a digital environment and refining its behavior through large-scale interactions, rather than simply minimizing token prediction loss on a static log, The optimization objective differs from classical reinforcement learning, and is set to promote self-driven exploration and understanding rather than a manually shaped reward,

This training setup relies on a data engine that can expose multiple operating system environments in parallel. The OpenAGI team has already open sourced that engine osgym, Under the MIT license which allows both research and commercial use. OSGym runs not just browser sandboxes, but full operating system replicas, and supports functions spanning office software, browsers, development tools, and multi application workflows.

key takeaways

- Lux is a foundation computer usage model that operates full desktop and browser and reaches 83.6 percent success on the online Mind2Web benchmark, ahead of Gemini CUA, OpenAI Operator, and Cloud Sonnet-4.

- Lux exposes 3 modes, Actor, thinker and activistWhich covers low latency UI macros, multi step goal decomposition and deterministic scripted execution for production workflows.

- Lux reportedly runs about 1 second per step and is about 10x cheaper per token than the OpenAI operator, which makes sense for long horizon agents that run hundreds of actions per task.

- Lux is trained with Agentic Active Pre-training, where the model learns by acting in the environment rather than simply consuming static web text, targeting strong screen to action behavior rather than pure language modeling.

- OSGym, the open source data engine behind Lux, can run over 1,000 OS replicas and generate over 1,400 multi turn trajectories per minute at a low per replica cost, giving teams a practical way to train and evaluate their own computer usage agents.

check it out official announcement, Project And repoFeel free to check us out GitHub page for tutorials, code, and notebooksAlso, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletterwait! Are you on Telegram? Now you can also connect with us on Telegram.

Michael Sutter is a data science professional and holds a Master of Science in Data Science from the University of Padova. With a solid foundation in statistical analysis, machine learning, and data engineering, Michael excels in transforming complex datasets into actionable insights.