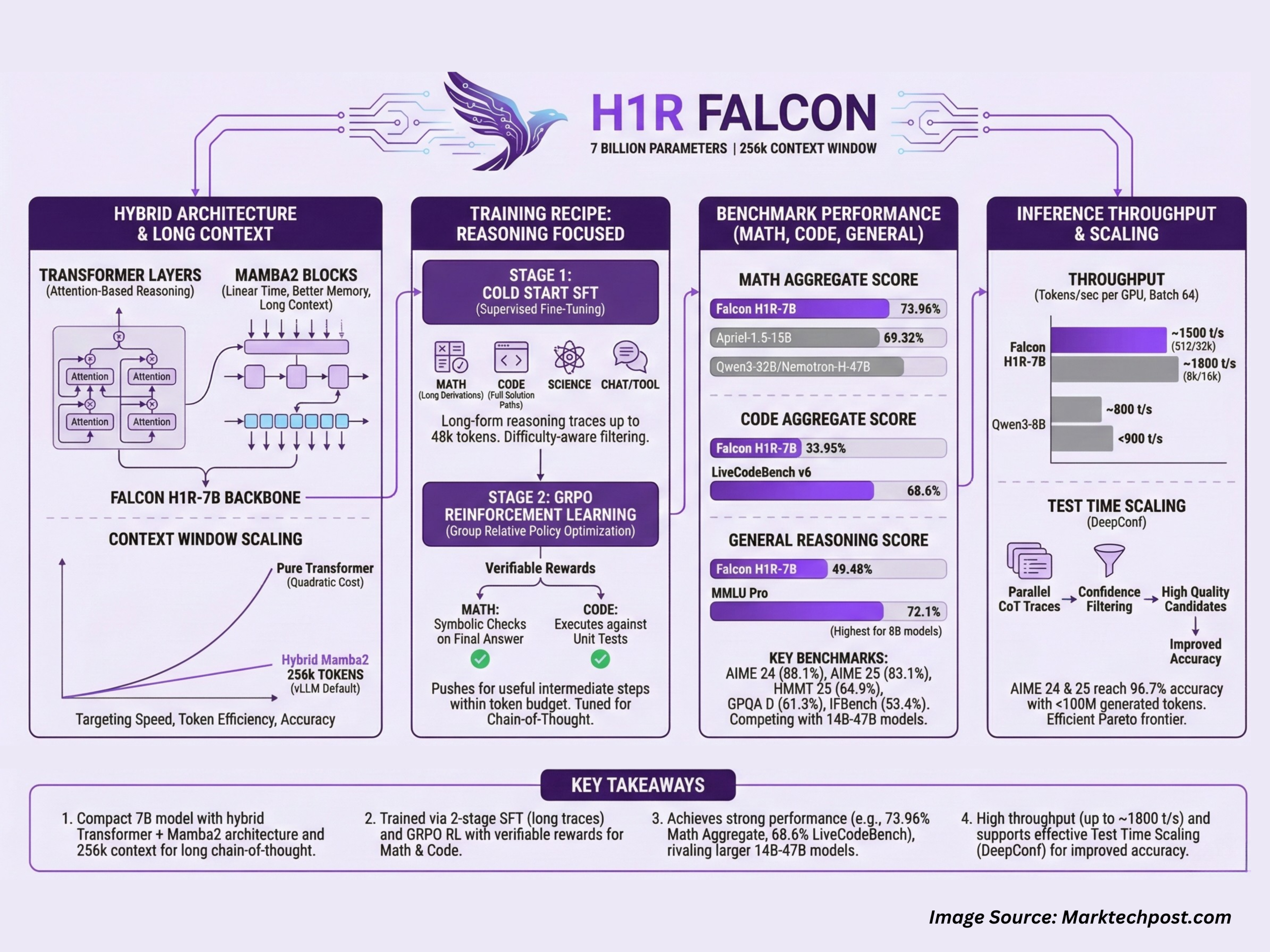

Technology Innovation Institute (TII), Abu Dhabi, has released the Falcon-H1R-7B, a 7B parameter reasoning specialized model that matches or exceeds many 14B to 47B reasoning models in math, code and general benchmarks while remaining compact and efficient. It is built on the Falcon H1 7B base and is available in face hugging form under the Falcon-H1R collection.

Falcon-H1R-7B is interesting because it combines 3 design options in 1 system, a hybrid transformer with a Mamba2 backbone, a very long context that reaches 256k tokens in a standard vLLM deployment, and a training recipe that blends supervised long form reasoning with reinforcement learning using GRPO.

Hybrid Transformer Plus Mamba2 Architecture with Long Reference

Falcon-H1R-7B is a causal decoder model with a hybrid architecture that combines Transformer layers and Mamba2 state space components. Transformer blocks provide standard attention based logic, while Mamba2 blocks provide linear time sequence modeling and better memory scaling as the context length increases. This design logic targets the 3 axes of efficiency that the team describes, speed, token efficiency, and accuracy.

Model runs with default --max-model-len Of 262144 When served via VLLM, that matches the practical 256k token reference window. This allows very long series of idea prompts, multi step tool usage logs and large multi document prompts in a single pass. The hybrid backbone helps control memory usage at these sequence lengths and improves throughput compared to a pure Transformer 7B baseline on the same hardware.

training recipe for logic tasks

The Falcon H1R 7B uses a 2 stage training pipeline:

In first stageThe team runs cold start supervised fine tuning on top of the Falcon-H1-7B base. SFT (Supervised Fine Tuning) data traces long form logic step-by-step 3 main domainsMath, coding and science, as well as non-logical domains like chat, tool calling and security. Conscious filtering magnifies hard problems and reduces small problems. Targets can reach up to 48k tokens, so the model sees longer derivations and full solution paths during training.

In second phaseSFT Checkpoint has been refined with GRPO, a group relative policy optimization method for reinforcement learning. Rewards are given when the generated logic chain is verifiably correct. For math problems, the system uses symbolic checking on the final answer. For code, it executes the generated programs against unit tests. This RL step prompts the model to take useful intermediate steps while remaining within a token budget.

The result is a 7B model that is tuned specifically for chain of thought reasoning rather than general chat.

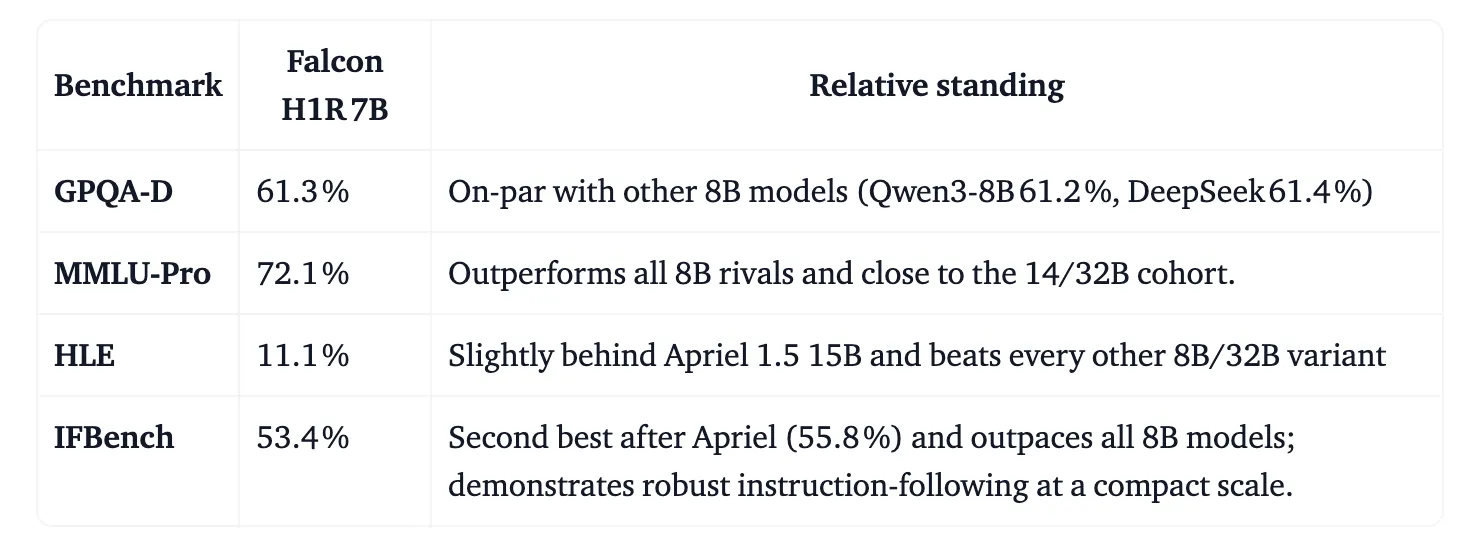

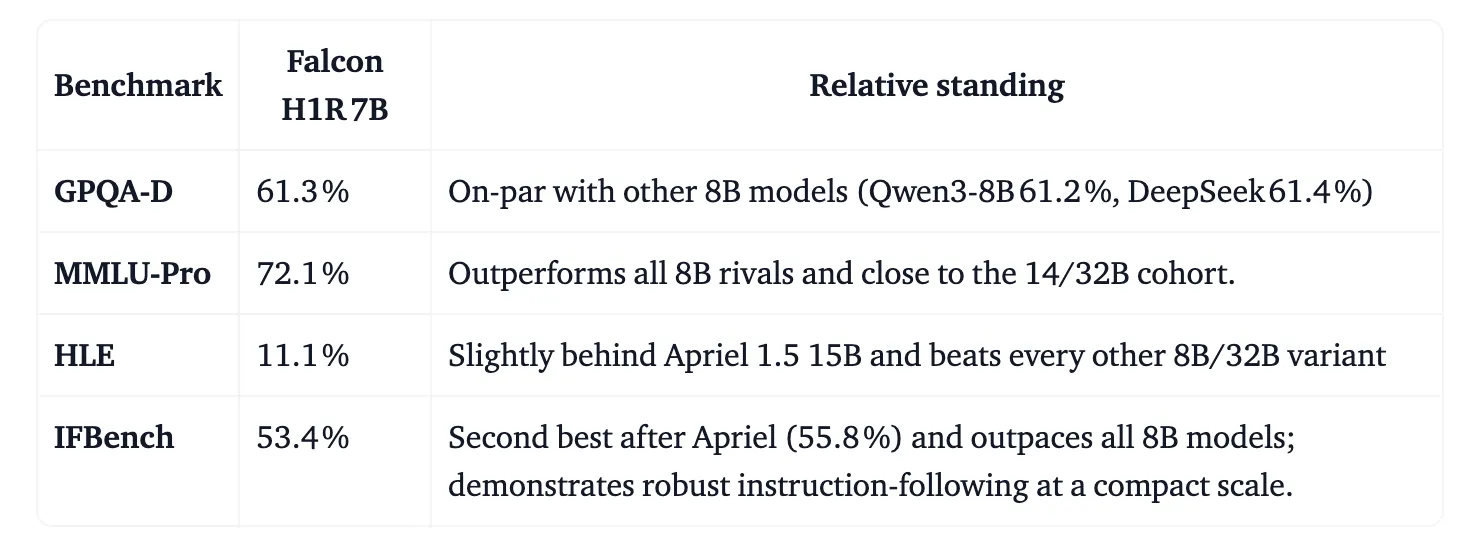

Benchmarks in math, coding and general reasoning

Falcon-H1R-7B benchmark scores are grouped into math, code and agentic tasks, and general reasoning tasks.

In the mathematics group, the Falcon-H1R-7B reaches a total score of 73.96%, which is ahead of the Aprilia-1.5-15B at 69.32% and larger models such as the Quen3-32B and Nemotron-H-47B. On personal benchmarks:

- AIME 24, 88.1%, 86.2% higher than Apr-1.5-15B

- AIME 25, 83.1%, APR-1.5-15B more than 80%

- HMMT 25, 64.9%, above all listed baselines

- AMO Bench, 36.3%, compared to 23.3% for DeepSeq-R1-0528 Qwen3-8b.

For code and agentic workloads, the model reaches 33.95% as an ensemble score. On LiveCodeBench v6, Falcon-H1R-7B scores 68.6%, which is higher than Qwen3-32B and other baselines. It scores 28.3% on the SciCode subproblem benchmark and 4.9% on Terminal Bench Hard, where it is second only to Apriel 1.5-15B, but ahead of many 8B and 32B systems.

On general logic, Falcon-H1R-7B gets 49.48% as group score. It records 61.3% on GPQA D, close to other 8B models, 72.1% on MMLU Pro, which is higher than all other 8B models in the above table, 11.1% on HLE and 53.4% on IFBench, where it is second only to April 1.5 15B.

The key point is that if the architecture and training pipeline are tuned for reasoning tasks, a 7B model can sit in the same performance band as many 14B to 47B reasoning models.

Estimate throughput and test time scaling

The team also benchmarked the Falcon-H1R-7B on throughput and test time scaling under realistic batch settings.

For 512 token inputs and 32k token outputs, Falcon-H1R-7B reaches approximately 1,000 tokens per GPU at batch size 32 and approximately 1,500 tokens per GPU at batch size 64, which is approximately twice the throughput of Qwen3-8B in the same configuration. For 8k input and 16k output, the Falcon-H1R-7B reaches about 1,800 tokens per second per GPU, while the Qwen3-8B remains below 900. The hybrid transformer with the Mamba architecture is an important factor in this scaling behavior, as it reduces the quadratic cost of attention for long sequences.

Falcon-H1R-7B is also designed for test time scaling using deep think with confidence, known as DeepConf. The idea is to run multiple chains of thought in parallel, then use your next token confidence score of the model to filter out noisy traces and keep only the high quality candidates.

On AIME 24 and AIME 25, Falcon-H1R-7B reaches 96.7% accuracy with less than 100 million generated tokens, which places it on the favorable Pareto frontier of accuracy versus token cost compared to other 8B, 14B, and 32B reasoning models. On the parser verifiable subset of AMO Bench, it reaches 35.9% accuracy with 217 million tokens, which is again ahead of similar or larger-scale comparison models.

key takeaways

- Falcon-H1R-7B is a 7B parameter reasoning model that uses hybrid transformers with Mamba2 architecture and supports 256k token context for long series of consideration signals.

- The model is trained in 2 stages, supervised fine tuning on long reasoning traces in math, code and science up to 48k tokens, followed by GRPO based reinforcement learning with verifiable rewards for math and code.

- The Falcon-H1R-7B achieves strong math performance, including approximately 88.1% on AIME 24, 83.1% on AIME 25, and 73.96% overall math scores, which is competitive or better with the larger 14B to 47B models.

- On coding and agentic tasks, the Falcon-H1R-7B gets a group score of 33.95% and 68.6% on LiveCodeBench v6, and it’s also competitive on general logic benchmarks like MMLU Pro and GPQA D.

- The hybrid design improves throughput, reaching approximately 1,000 to 1,800 tokens per second per GPU at reported settings, and supports test time scaling via Deep Think to improve accuracy by using multiple logic samples under a model-controlled token budget.

check it out technical details And model weight hereAlso, feel free to follow us Twitter And don’t forget to join us 100k+ ml subreddit and subscribe our newsletterwait! Are you on Telegram? Now you can also connect with us on Telegram.

Check out our latest releases ai2025.devA 2025-focused analytics platform that models launches, benchmarks and transforms ecosystem activity into a structured dataset that you can filter, compare and export

Asif Razzaq Marktechpost Media Inc. Is the CEO of. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. Their most recent endeavor is the launch of MarketTechPost, an Artificial Intelligence media platform, known for its in-depth coverage of Machine Learning and Deep Learning news that is technically robust and easily understood by a wide audience. The platform boasts of over 2 million monthly views, which shows its popularity among the audience.