Author(s): asif khan

Originally published on Towards AI.

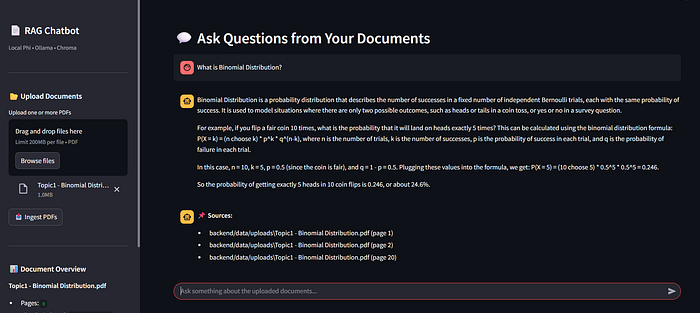

I wanted to create a chatbot, but without spending a single penny,

No paid API key.

No GPU dependency.

No cloud bill.

The goal was simple:

Understand the logic, concepts and terms behind a working chatbotDon’t just make something that “works”.

During my research I found out that Microsoft has a small open-source model called Phi,

It is lightweight and good enough for learning.

then i found out Olamawhich allows you to run models locally on your system,

No API key. No internet dependency. Perfect for use.

In this blog, I will tell you every step I followed to make it Working RAG-based chatbot backendkeep things simple and practical,

I will avoid long paragraphs and focus on clarity.

it is Part – 1where we build backend,

what are we building

- A local chatbot backend

- Runs completely offline

- uses pdf as knowledge

- Answers questions from documents only

- can say “I don’t know” When the answer doesn’t exist

Prerequisites

1. Operating System

2. Python

3. Virtual Environment (PowerShell)

Create and activate the virtual environment:

To activate, type My_EnvScriptsactivate.ps1 in the CLI.

You should see (My_Env) in your terminal.

Next, create this folder structure.

ai_chatbot_project/

│

├── backend/

│ ├── app/

│ │ ├── __init__.py

│ │ ├── main.py

│ │ ├── llm.py

│ │ ├── rag.py

│ │ ├── schemas.py

│ │

│ ├── data/

│ │ └── uploads/

│ │

│ ├── vectorstore/

│ │

│ ├── .env

│ ├── requirements.txt

│

├── frontend/

│ ├── streamlit_app.py

│

└── README.md

requirements.txt (dependency management)

This file lists:

- All Python packages required to run the backend

Why is it important:

- Reproducible setup

- Easy onboarding for others

- clean environmental management

Anyone can rebuild the backend with a single command.

fastapi

uvicorn# LangChain core + ecosystem

langchain

langchain-core

langchain-community

langchain-text-splitters

langchain-chroma

langchain-ollama

# Vector database

chromadb

# PDF loading

pypdf

# Environment & utilities

python-dotenv

pydantic

requests

# Frontend

streamlit

“Set everything up like this:

make sure you’re inai_chatbot_projectfolder.

Use:cd ai_chatbot_projects,

pip install -r requirements.txt

Installing Olama and Fai

Step 1: Download Olama from:

https://ollama.com

Download and install Olama for Windows.

After installation:

- Olama runs as a background service

- You don’t need to open it manually

Verify that Olama is installed:

- Open a new terminal and run

ollama --version

Step 2: Pull the Microsoft Phi Model Locally

ollama pull phi

This downloads the Microsoft Phi models locally.

size:

- ~2-3 GB

- download once

Wait for it to complete.

Step 3: Test the Fi Locally (Very Important)

run:

ollama run phi

Ask something simple:

What is FastAPI?

If you get any feedback → The model works locally,

Exit with: Ctrl + D

Step 4: For embedding (important for RAG):

run:

ollama pull nomic-embed-text

Now your system is ready to run the model locally.

Why do we need RAG?

LLM can answer anything, even if the answer is not in your document.

that’s dangerous.

So we use RAG (Retrieval Augmented Generation):

- load document

- Convert text to embedding

- Store embeddings in a vector database

- Retrieve relevant excerpts

- Reply with retrieved content only

Backend files explained

1) main.py – Application entry point

this is starting point of backend,

Responsibilities:

- FastAPI starts the application

- API exposes endpoints

- Acts as a bridge between frontend and backend logic

Main things it handles:

- pdf upload request

- question-answer request

- Request validation and response formatting

think about main.py In form of traffic controller,

It doesn’t do heavy processing, it just sends requests to the right place.

from fastapi import FastAPI, HTTPException, UploadFile, File

from app.llm import llm, rag_answer

from app.schemas import QuestionRequest

from app.rag import ingest_pdf

from pathlib import Path

import shutilapp = FastAPI(title="AI Chatbot Backend")

# Base directory → backend/

BASE_DIR = Path(__file__).resolve().parents(1)

UPLOAD_DIR = BASE_DIR / "data" / "uploads"

UPLOAD_DIR.mkdir(parents=True, exist_ok=True)

@app.get("/")

def home():

return {"status": "Backend is running"}

@app.post("/ask")

def ask_question(payload: QuestionRequest):

try:

response = llm.invoke(payload.question)

return {"answer": response}

except Exception as e:

raise HTTPException(status_code=500, detail=str(e))

@app.post("/upload-pdf")

def upload_pdf(file: UploadFile = File(...)):

file_path = UPLOAD_DIR / file.filename

with open(file_path, "wb") as buffer:

shutil.copyfileobj(file.file, buffer)

chunks = ingest_pdf(str(file_path))

return {"message": "PDF ingested successfully", "chunks": chunks}

@app.post("/ask-pdf")

def ask_pdf(payload: QuestionRequest):

return rag_answer(

payload.question,

payload.chat_history

)

2) rag.py – Document ingestion and retrieval logic

This file handles Everything related to documents,

Responsibilities:

- Loading PDF files

- dividing large text into smaller parts

- Creating embedding from text

- Storing and retrieving vectors from vector database

Important design choices here:

- uses a dedicated embedding modelnot llm

- uses a persistent vector storeHence the data is saved from restart

- uses one clear collection name To avoid data loss

in simple terms, rag.py Is Chatbot’s memory,

from langchain_community.document_loaders import PyPDFLoader

from langchain_chroma import Chroma

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langchain_ollama import OllamaEmbeddings

from pathlib import Path# Base directory → backend/

BASE_DIR = Path(__file__).resolve().parents(1)

UPLOAD_DIR = BASE_DIR / "data" / "uploads"

VECTOR_DIR = BASE_DIR / "vectorstore"

COLLECTION_NAME = "pdf_documents"

UPLOAD_DIR.mkdir(parents=True, exist_ok=True)

VECTOR_DIR.mkdir(parents=True, exist_ok=True)

embeddings = OllamaEmbeddings(model="nomic-embed-text")

def ingest_pdf(file_path: str):

loader = PyPDFLoader(file_path)

documents = loader.load()

splitter = RecursiveCharacterTextSplitter(

chunk_size=800,

chunk_overlap=150

)

chunks = splitter.split_documents(documents)

vectordb = Chroma(

collection_name=COLLECTION_NAME,

persist_directory=str(VECTOR_DIR),

embedding_function=embeddings

)

vectordb.add_documents(chunks)

return {

"pages": len({doc.metadata.get("page") for doc in documents}),

"chunks": len(chunks)

}

def get_retriever_with_sources():

vectordb = Chroma(

collection_name=COLLECTION_NAME,

persist_directory=str(VECTOR_DIR),

embedding_function=embeddings

)

return vectordb.as_retriever(search_kwargs={"k": 4})

3) llm.py – intelligence and guardrails

this is most important file In the backend.

Responsibilities:

- calling the language model

- Creating signals with context

- Enforcing strict rules on when to respond

- deciding when to say “I don’t know”

Main ideas implemented here:

- chatbot answers Only if documentation supports it

- Weak recovery results are rejected

- Sources shown only if an answer exists

This file ensures that the chatbot is honest and trustworthyNot just smart.

import os

from dotenv import load_dotenv

from langchain_ollama import OllamaLLMfrom langchain_core.runnables import RunnableWithMessageHistory

from langchain_core.chat_history import InMemoryChatMessageHistory

from langchain_core.prompts import PromptTemplate

from langchain_core.runnables import RunnablePassthrough

from app.rag import get_retriever_with_sources#for local version

llm = OllamaLLM(model="phi",temperature=0.2)retriever = get_retriever_with_sources()

# Prompt (from langchain-core)

prompt = PromptTemplate(

input_variables=("chat_history", "question"),

template="""

You are a helpful assistant.Conversation so far:

{chat_history}User question:

{question}Answer clearly and concisely:

"""

)rag_prompt = PromptTemplate(

input_variables=("context", "question"),

template="""

Answer the question ONLY using the context below.

If the answer is not present, say "I don't know".Conversation so far:

{chat_history}Context:

{context}Question:

{question}Answer:

"""

)def rag_answer(question: str, chat_history: str):

docs = retriever.invoke(question)# 1. If retriever confidence is weak → no answer

if not docs or len(docs) < 2:

return {

"answer": "I don't know. This is not mentioned in the document.",

"sources": ()

}context = "nn".join(d.page_content for d in docs)

answer = llm.invoke(

rag_prompt.format(

context=context,

question=question,

chat_history=chat_history

)

)answer_text = answer.strip() if isinstance(answer, str) else str(answer).strip()

normalized = answer_text.lower()# 2. If answer is empty or generic → reject

if (

not answer_text

or "i don't know" in normalized

or "not mentioned" in normalized

or "cannot find" in normalized

or "I'm sorry" in normalized

):

return {

"answer": "I don't know. This is not mentioned in the document.",

"sources": ()

}# 3. VALID answer → attach sources

sources = sorted({

f"{doc.metadata.get('source')} (page {doc.metadata.get('page')})"

for doc in docs

if doc.metadata.get("source") is not None

})return {

"answer": answer_text,

"sources": sources

}rag_chain = (

{

"context": retriever | (lambda docs: "nn".join(d.page_content for d in docs)),

"question": RunnablePassthrough()

}

| rag_prompt

| llm

)# Base chain (RunnableSequence)

base_chain = prompt | llm# Simple in-memory message store

_store = {}def get_session_history(session_id: str):

if session_id not in _store:

_store(session_id) = InMemoryChatMessageHistory()

return _store(session_id)# Chain with memory

chat_chain = RunnableWithMessageHistory(

base_chain,

get_session_history,

input_messages_key="question",

history_messages_key="chat_history",

)

4) schemas.py – Request and response validation

This file defines data size Flowing in the backend.

Responsibilities:

- Verification of incoming requests

- Applying Required Fields

- Prevent distorted input

Why it matters:

- Avoids silent bugs

- Makes API predictable

- Helps with future scaling and debugging

think about schemas.py As a Contract Between frontend and backend.

from pydantic import BaseModelclass QuestionRequest(BaseModel):

question: str

chat_history: str

5) data/uploads/ – uploaded files

This folder stores:

- User uploaded PDF documents

Why it exists:

- Keeps raw documents separate from processed data

- It becomes easier to manage or delete files later

this is the folder temporary storageNo intelligence.

6) vectorstore/ – implicit knowledge

This folder contains:

- Vector database files created by ChromaDB

Why it matters:

- right here Meaning is stored, not text

- Enables semantic search

- Document comprehension persists during restart

Deleting this folder resets the chatbot’s knowledge.

7) .env – environment configuration

Used for storage:

- configuration value

- Environment-Specific Settings

Even though we initially avoided paid APIs, placing this file makes the project ready:

- future api keys

- deployment environment

- secure configuration handling

But At this point, we have a fully functioning backend that runs locally and understands documents.

In part 2We will first validate this backend by testing our model swagger uiAnd then proceed to create a clean, chat-based frontend (using Streamlight).

Published via Towards AI